These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Teeny-Tiny Castle

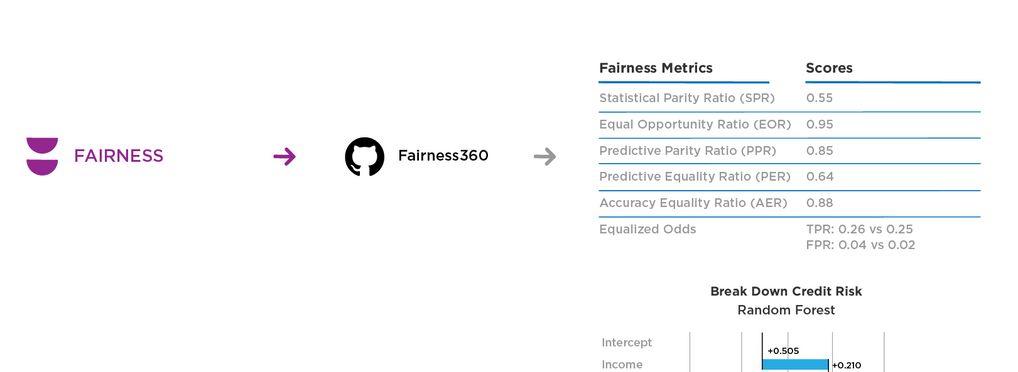

AI ethics and safety are (relatively) new fields, and their tools (and how to handle them) are still unknown to most of the development community. To address this problem, we created the Teeny-Tiny Castle, an open-source repository containing "Educational tools for AI ethics and safety research."

There, the developer can find many examples of how to use programming tools to deal with various problems raised in the literature (e.g., algorithmic discrimination, model opacity, etc.).

Currently, Teeny-Tiny Castle has several examples of how to work ethically and safely with AI. Our lines of focus are on issues related to Accountability & Sustainability, Interpretability, Robustness/Adversarial, and Fairness, all being worked through examples that refer to some of the most common contemporary AI applications (e.g., Computer Vision, Natural Language Processing, Classification & Forecasting, etc.).

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Tool type(s):

Objective(s):

Target sector(s):

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

License:

Target groups:

Target users:

Stakeholder group:

Validity:

Geographical scope:

People involved:

Required skills:

Tags:

- ai reliability

- fairness

- accountability

- explainability

- carbon reporting

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI