AI Safety Institutes: Can countries meet the challenge?

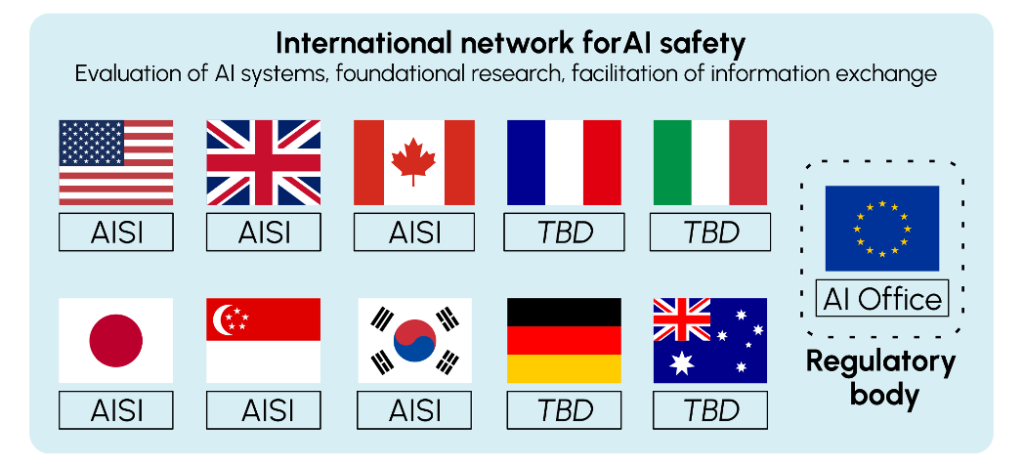

Governments need to understand what these models can do to manage the risks and seize the benefits of AI. In recent months, recognising the need to keep up with the unprecedented pace of AI development, the U.S., U.K., Japan, Canada and Singapore have established specialised bodies known as AI Safety Institutes (AISIs). They are tasked with evaluating AI systems’ capabilities and risks, conducting fundamental safety research, disseminating their results and facilitating information exchange among stakeholders. Through its AI Safety Unit, the European Union’s AI Office will carry out similar responsibilities in addition to its primary mandate as regulators. The inception of these bodies marks a significant step towards formulating a coordinated global strategy for ensuring safe and beneficial AI.

What roles for AI safety institutes?

Survey the unpredictability of AI systems. By nature, AI models are complex and unpredictable. The current state of AI technology makes it difficult to predict how a model will respond to new, unseen inputs or assess its capabilities’ upper limits. This poses significant challenges in terms of safety and reliability. For instance, the potential to extend an AI system’s functions through tools or other expansions complicates safe deployment and use. Given the vast uncertainty about the capabilities and potential risks of the next generations of AI models, evaluations and safeguards that work today may not work tomorrow.

Evaluation standards and information gathering. While AI developers conduct safety research and evaluations, there are no standard approaches to quality and consistency. Moreover, certain evaluations, particularly those involving national security, may only be conducted by government bodies authorised to access and handle sensitive information. By establishing AISIs, governments can develop a robust body of knowledge on AI’s capabilities, its impact on society, and its development trajectory, which is essential for creating informed policies and standards, including at the international level.

Build consensus for better AI governance. AI safety institutes bridge theoretical AI research and practical governance, ensuring policymakers are well-informed and prepared to make good decisions. They could also help foster global scientific consensus on the capabilities and risks of AI models and how to carry out robust tests and evaluations.

Variations in AI safety institutes across countries. Countries vary on the specific role of their AISI. In the UK, for example, the mission of the AI Safety Institute is explicitly to “minimise surprise to the UK and humanity from rapid and unexpected advances in AI.” Other institutes in the U.S., Canada, Japan, or Singapore have similar but distinct mandates. The U.S. AI Safety Institute will coordinate diverse actors nationally and internationally to develop robust AI safety and testing standards. Other bodies are more than technical centres of expertise. For instance, the European Union’s new AI Office has regulatory powers, can request information from model providers, and can apply sanctions to enforce the AI Act.

On the whole, AI safety institutes could fulfil three primary functions:

- Evaluate AI systems: One crucial role these institutes could play is to improve our evaluation tools. AISIs can help assess the safety-relevant capabilities of advanced AI systems, the security of the systems, and their potential societal impacts. Despite the need for these evaluations, there has yet to be a way to conduct them.

- Conduct foundational research: AISIs may be pivotal in initiating and supporting foundational research in AI safety. This includes sponsoring exploratory projects and bringing together researchers from various disciplines with academic and industry stakeholders. This is key, as AI safety and evaluations are nascent interdisciplinary fields requiring further scientific advances.

- Facilitate information exchange: AISIs could help disseminate crucial knowledge and insights about AI technologies by establishing national and international information-sharing channels. This is vital for fostering collaboration and coordination among stakeholders, including policymakers, private companies, academia, and the public.

The promise of international coordination

From their inception, AI safety institutes have been envisioned as crucial players in the international arena. The United Kingdom’s initial announcement emphasised that its institute would make its findings globally accessible to enable coordinated responses to the opportunities and risks posed by AI. A rapid progression in international partnerships followed this announcement. The UK and the US committed to collaborative efforts, including the development of comprehensive evaluation suites for AI systems and the execution of joint testing exercises on publicly accessible AI models. These initiatives extend to personnel exchanges and reciprocal information sharing concerning AI risks and capabilities. The UK and Canada launched a similar partnership, which also focused on sharing computing resources crucial for AI safety research and evaluations.

The culmination of these bilateral efforts is the recent establishment of an international network for AI safety involving ten countries and the European Union, announced at the May 2024 Seoul AI Summit. This network aims to facilitate extensive insights sharing on AI models’ limitations, capabilities, and associated risks and monitor AI-related safety incidents globally. This framework will help distribute knowledge more evenly across international boundaries.

Effective communication and information-sharing are fundamental to international coordination. They enable countries to build trust, exchange expertise, and develop a shared understanding of the most pressing AI risks, paving the way for deeper cooperation. In the future, nations might consider adopting a division of labour based on geographical or thematic specialisations, which would streamline the evaluation processes and foster specialisation in particular areas of AI testing.

AI safety institutes can enrich international coordination in several ways:

- Capacity building: Countries can collaborate to assist each other in developing regulatory frameworks and technical capacities to evaluate and manage AI technologies effectively.

- Science-based thresholds for regulation: Governments use various means to determine when regulatory requirements apply to AI development and deployment. AISIs could work together to define compatible compute-based thresholds, often used in current and planned standards and policies, to trigger evaluations or other responses. Similarly, AISIs could help determine thresholds beyond which risks from an AI model are considered intolerable, as agreed to by governments during the Seoul AI summit.

- Global reports: AI safety institutes could play a significant role in periodic updates of the IPCC-inspired International Report on AI Safety. This report is designed to synthesise existing research on AI systems’ capabilities and risks and whose initial findings were presented at the Seoul AI Summit. AISIs’ contributions could help ensure the report reflects the latest research and evaluations.

- Global governance: These institutes are uniquely positioned to contribute to their governments’ efforts in international bodies like the TTC, G7, and G20, promoting technically grounded governance strategies. They could also help set international standards.

Sharing data, competition, and remit differences pose challenges

International collaboration among AI safety institutes promises substantial benefits, mainly through exchanging technical tools and scientific discoveries between countries. However, some areas, particularly those concerning information sharing, present challenges. These include concerns about the confidentiality and security of sensitive information, legal incompatibilities between national mandates, and countries’ varying technical capacities to assess and understand advanced AI models. Addressing these issues will be crucial for ensuring that the potential of international coordination is fully realised.

Sharing sensitive data at the international level is fraught with difficulties. Information about AI models’ capabilities is often commercially sensitive and can also affect national security. So, institutes should share findings from their evaluations and research in suitable ways through robust and secure communication channels. Nations must take steps to reassure themselves that data will be confidential and securely handled.

Differences in legal mandates between AI safety institutes in different jurisdictions pose another significant challenge. For instance, the EU’s AI Office has legal guarantees that allow comprehensive access to AI models, which is not always available in other jurisdictions. These differences can hinder the ability of institutes to share data freely and collaborate effectively, mainly when institutes’ mandates focus more on national security.

Disparities in technical capacity among nations also pose a considerable challenge. As government bodies financed with public money, AISIs must compete with generously funded AI companies to attract top-level AI engineers and scientists. For instance, the U.K. AI Safety Institute recently opened a San Francisco office to be closer to a world-leading talent pool after successfully hiring top researchers from large AI companies. But not all countries have the financial resources and technical expertise to evaluate the latest AI systems effectively. Particularly significant for developing countries, this discrepancy may necessitate capacity-building initiatives, such as through expert secondments or sharing technical tools.

Moreover, the type of access that AI Safety Institutes have to models is a crucial factor. While voluntary commitments from companies to provide API access are helpful, more comprehensive access may be required for thorough evaluations. The distinction between pre-tuning and post-tuning access and between white-box and black-box access is pivotal and will impact the effectiveness of safety evaluations.

Addressing the challenges head-on

The birth of AI safety institutes represents a crucial step in managing advanced AI’s complexities. They are critical in assessing risks, conducting foundational research, and facilitating international collaboration. With concerted effort and international cooperation, challenges ranging from ensuring secure and appropriate information sharing to overcoming legal and functional disparities are significant yet surmountable. By addressing these challenges head-on, AI safety institutes can form the cornerstone of a technically informed and globally coordinated action on AI