DeepMind: Exploring institutions for global AI governance

In recent months, policy discussions have cited the need for some form of global governance for AI : from US Senate Justice Committee hearings to press articles, we have seen repeated calls to create an IPCC, CERN or IAEA equivalent for AI. Given that without adequate safeguards, some of AI’s capabilities, such as automated software development, chemistry and synthetic biology research, and text and video generation, could be misused to cause harm, an inclusive and multilateral solution is needed to mitigate them while addressing — and not exacerbating—the global digital divide.

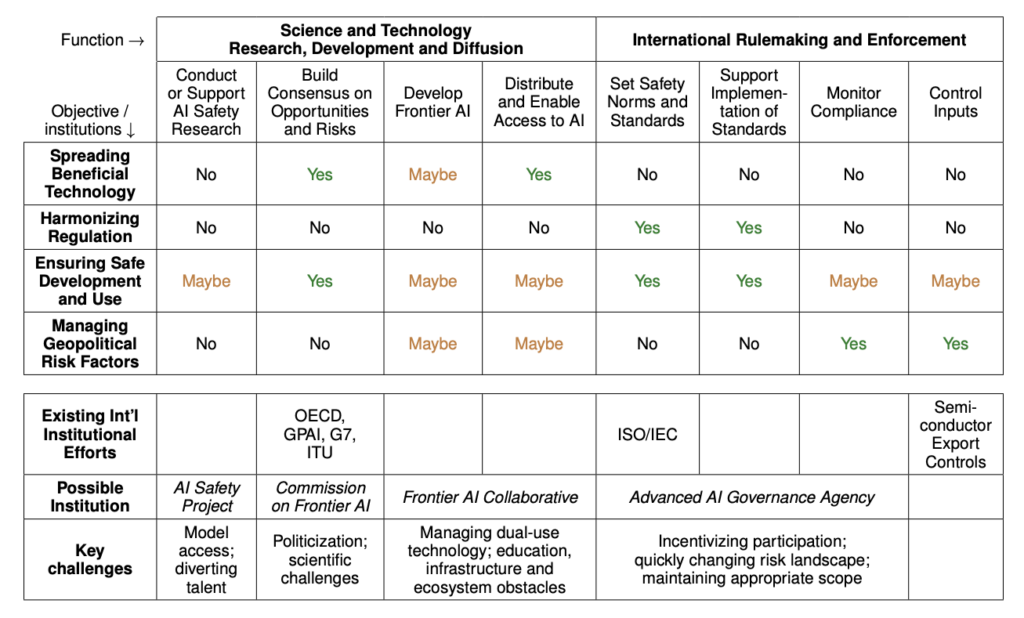

In a new paper, DeepMind explores models and functions of international institutions that could help manage the global impact of frontier AI development and ensure AI’s benefits reach all communities. The paper explores four complementary institutional models to support global coordination and governance functions:

- An intergovernmental Commission on Frontier AI could build international consensus on opportunities and risks from advanced AI and how to manage them. This would increase public awareness and understanding of AI prospects and issues, contribute to a scientifically informed account of AI use and risk mitigation, and be a source of expertise for policy makers.

- An intergovernmental or multi-stakeholder Advanced AI Governance Organisation could help to internationalise and align efforts to address global risks from advanced AI systems. It would set governance norms and standards and assist in their implementation. It may also perform compliance monitoring functions for a theoretical international governance regime.

- A Frontier AI Collaborative could promote access to advanced AI as an international public-private partnership. In doing so, it would help underserved societies benefit from cutting-edge AI technology and promote international access to AI technology for safety and governance objectives.

- An AI safety project could bring together leading researchers and engineers and provide them with access to computation resources and advanced AI models for research into technical mitigations of AI risks. This would promote AI safety research and development by increasing scale, resourcing, and coordination.

Policy discussions on AI frontier models are in the early stages. Still, there is increasing acknowledgement that we must seriously consider the establishment of a global governance framework to manage AI safety risks. Given the immense global opportunities and challenges presented by AI systems on the horizon, governments and other stakeholders need to engage in more discussions about the role of international institutions and how they can further AI governance and coordination.