Name it to tame it: Defining AI incidents and hazards

This work was made possible by the guidance of Marko Grobelnik, Mark Latonero, Irina Orssich, and Elham Tabassi, Co-Chairs of the OECD Expert Group on AI Incidents.

As artificial intelligence (AI) becomes more integral to our daily lives, understanding and mitigating the risks associated with its deployment is paramount. To observe, document, report, and learn from problems and failures of AI systems, we need to use common terminology to describe them. Today, these events are broadly referred to as AI incidents. Recent developments in global initiatives aim to standardise how we report and monitor AI incidents and hazards, paving the way for better governance and safer AI implementations.

Defining AI incidents and related terminology

The first step is to standardise what constitutes AI incidents and hazards. The OECD and the OECD.AI Network of Experts are leading the charge, proposing initial definitions to categorise AI-related incidents and hazards, which are included in the newly released paper on Defining AI incidents and related terms.

The definitions result from over 18 months of deliberations among AI experts from all stakeholder groups in the OECD.AI Expert Group on AI Incidents and policymakers from the OECD Working Party on AI Governance (AIGO). They were informed by Stocktaking for the development of an AI incident definition, which analysed terminology related to incident definitions across industries to build the foundational knowledge base for developing an AI incident definition.

These definitions are crucial for ensuring a shared understanding and point of reference for regulators across jurisdictions so that they can work together to mitigate risks and hazards with the same reference points. This is what is called interoperability. At the same time, the definitions are drafted to provide flexibility for jurisdictions to determine the scope of AI incidents and hazards they wish to address.

AI incidents start with harm

Harm is a central concept to technical standards and regulations addressing incidents and harm definitions and taxonomies are often context-specific. Incident definitions often focus on potential harms, actual harms or both. Defining harm and assessing its types, severity levels, and other relevant dimensions (e.g., scope, geographic scale, quantifiability, etc.) is critical to identifying the incidents that lead or might lead to that harm. It is also vital to elaborate an effective framework to address them.

Defining the type, severity, and other dimensions of AI harms is a prerequisite for developing a definition of AI incidents. There are different types of harms e.g., physical, psychological, economic, etc. and different levels of severity, e.g., inconsequential hazards t, damage to property, harm to health, impact to critical infrastructure, human deaths, etc.

Some aspects of harm may be quantifiable, such as financial loss or the number of people affected. Others may be harder to quantify, such as reputational harm. Harm can be tangible, such as physical injury to a person or damage to property or the environment. Some harms, such as psychological harms, may not be as tangible or readily quantifiable. The scope and scale of harm – whether it affects individuals, organisations, societies, or the environment and whether it happens at local, national or international level – are also important. Other dimensions of harm include the potential to reoccur and reversibility.

Complete and accurate definitions of AI incidents and hazards should capture all aspects of harm.

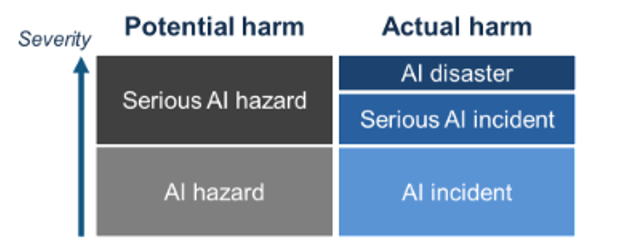

Actual vs potential harm

Potential harm is the risk or likelihood that harm or damage will occur. Risk is a function of the probability of an event occurring and the severity of the consequences. For example, the risk of an explosion in a chemical plant is more significant if the plant is in a densely populated area, and the consequences of an explosion would be severe. Identifying and addressing risks and hazards that can arise from developing and using AI systems for risk management and AI incident reporting frameworks is crucial. Potential harm is commonly associated with the concept of hazard.

Actual harm is a risk that materialised into harm. Definitions of actual harm in standards and regulations depend significantly on context. They generally focus on physical injury or damage to health, property or the environment. Actual harm is often associated with the concept of an incident.

Core definitions

An event where the development or use of an AI system results in actual harm is termed an “AI incident”, while an event where the development or use of an AI system is potentially harmful is termed an “AI hazard” The OECD’s proposed definitions are as follows:

“An AI incident is an event, circumstance or series of events where the development, use or malfunction of one or more AI systems directly or indirectly leads to any of the following harms:

(a) injury or harm to the health of a person or groups of people;

(b) disruption of the management and operation of critical infrastructure;

(c) violations of human rights or a breach of obligations under the applicable law intended to protect fundamental, labour and intellectual property rights;

(d) harm to property, communities or the environment.”

(a) injury or harm to the health of a person or groups of people;

(b) disruption of the management and operation of critical infrastructure;

(c) violations to human rights or a breach of obligations under the applicable law intended to protect fundamental, labour and intellectual property rights;

(d) harm to property, communities or the environment.”

The report also includes proposed definitions for terminology associated with incidents and hazards, such as serious AI incident, AI disaster and serious AI hazard. These proposed definitions aim to clarify what constitutes an AI incident, hazard, and associated terminology without being overly prescriptive.

The drive for a common reporting framework

These definitions will enable a unified system that allows consistent and comprehensive reporting and monitoring of AI incidents across jurisdictions and sectors. This is a foundation for tracking and analysing incidents and building an environment to anticipate and prevent them proactively. By understanding the types and frequencies of incidents, policymakers can craft more effective regulations and guidelines to prevent repeat and future occurrences.

The movement towards a common AI incident reporting framework represents a significant step in global efforts to ensure the safety and reliability of AI systems. By standardising definitions and reporting mechanisms, the OECD is helping to manage AI’s societal impacts better.

Tracking real AI incidents can provide an evidence base to inform AI policy

The OECD developed a global AI Incidents Monitor (AIM) to collect AI incidents in real-time, structured from available public resources, and to inform the more conceptual work on developing an AI incident definition and reporting framework. AIM will help identify AI applications that may need regulatory attention, their impacts and the underlying causes of failure. It will enable interactive comparison and analysis of AI risks that have or might materialise if no corrective action is taken. This can also help prevent incidents from reoccurring and provide appropriate evidence for policymaking and regulatory purposes.