How smart can education get? Very smart.

Machine learning and artificial intelligence (AI) have been put to use in scientific laboratories, out on farms, in radiology clinics, and in many other workplaces. But school classrooms have been slower to jump onto the smart bandwagon.

Already, the OECD’s 2018 Teaching and Learning International Survey (TALIS) revealed that only slightly more than half of lower secondary teachers let students “frequently” or “always” use ICT for projects or class work on average across the OECD. But the COVID-19 pandemic has changed all that and with remote schooling skills under our belt, AI cannot be far behind. And like agriculture, medicine, policing, finance, law and so many other sectors, education will be upended by the data revolution. AI-powered education technology (EdTech) can turn the factory-modelled classrooms we inherited from the Industrial Revolution into places where learning is active, flexible, collaborative and individualised.

Learning analytics leverages data on how students perform on homework assignments, pop quizzes, formal assessments and individual problems; the pace at which they learn and master new concepts; and how they conduct their self-regulated learning to track each student’s progress.

At the very minimum, this data give teachers insight into their students and helps them plan their classes. But, like AI systems in self-driving cars, learning analytics can be employed with different levels of autonomy. For example, if a teacher is introducing the class to quadratic equations but a couple of students are already a little familiar with them, she/he could let them go ahead with programmes like Mathia or Snappet. The teacher may allow the programme to select the next problem the student should tackle based on how they did on the last one. It can give the student feedback. It can reintroduce a question or a problem the student learned earlier at the optimum interval to refresh their memory – allowing the brain to “forget” something it has learned and reminding it is called spacing, which cognitive science has shown to be a highly effective learning strategy.

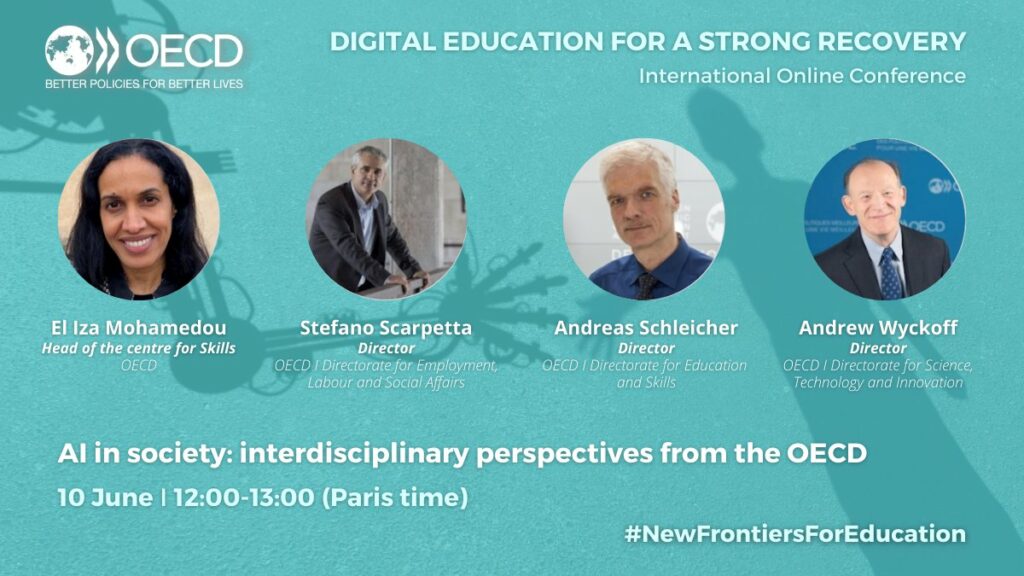

AI in society: Interdisciplinary perspectives from the OECD:

Homework software helps students to learn more

In Maine in the United States, a study compared schools that used the mathematics software ASSISTments for homework with those that did not in a randomised control trial. The software sets homework assignments, provides immediate feedback to students and prepares reports for teachers on how students do on daily assignments. The study found that students in the schools that used ASSISTments learned significantly more compared to their peers in the control schools, especially for students who had been weaker in mathematics.

AI to determine student needs and plan learning trajectories and detect learning anomalies

Analytics software can go so far as to plot a student’s learning trajectory, again, based on what the software discerns the student needs according to the data. It may determine that a student is ready for polynomials now that she/he has digested quadratic equations. This is a radical departure from one-size-fits-all teaching and learning where everyone is expected to progress at the same pace, leaving some students bored and others left behind.

Most education systems, unfortunately, have had a legacy of left-behind students, some because of developmental challenges like dyslexia, dysgraphia and dyscalculia that have long flown under the radar. Through data analysis, AI technology has the capability to detect early on if a child has possible learning anomalies. It can flag this for teachers and parents, and help them diagnose what might be the cause of these anomalies.

Besides earlier detection and diagnosis of developmental disabilities, current technologies have great potential for students with special needs in the classroom. ECHOES, for example, is an intelligent software programme for children with autism. Studies have shown promising results for children’s social interaction when they are using it with a human – teacher or teacher’s assistant, for instance – who knows the child well. Using technology in tandem with humans is a crucial point here, not just for students with special needs but all students. We will come back to this.

Publication: The OECD Digital Education Outlook 2021

The potential of social robots in education

But, to get back to quadratic equations, for those who haven’t quite grasped, for example, moving all unknown terms in a quadratic equation to the left side of the equation, the teacher can focus on that and let the rest of the class go on to the next step with some AI direction. Or, they could call on social robots for tutoring support. Robots have proven to be effective individual tutors because students seem to develop a rapport with them that spurs learning. Robots also work well as fun “buddy” learners who learn at the same time as students. Children, especially, profit from the exercise of teaching robots something they “don’t understand”, which cements mastery of a new concept. Robot tutors are great for language-learning classes because they can chat with you and because they may be able to provide a more authentic accent than a teacher who is often not a native speaker. And, again, at the very minimum, robots can take over the administrative drudgery that teachers have to do, which frees them up to do what accelerates and deepens learning best: coach, mentor, inspire.

“Classware” to measure engagement

Supported but not supplanted by AI, teachers have the tools to reinvent the way we teach and learn. With AI marking students’ homework and giving feedback, teachers will already know how everyone is doing when they arrive for class. That saves time. They may have an easy-to-read data dashboard that helps them orchestrate class activities in a well-paced and dynamic way. This is called “classware”. This smart technology incorporates data from log files and sensors that capture things like posture, eye movements, heart rate, body temperature and facial expressions to infer who is interested and engaged or lost and dozing off. That kind of real-time data tells the teacher it’s time to change pace or maybe break up the class into smaller groups. It can help with pacing and timing by signalling to the teacher when enough of the class has grasped a lesson and can move on or more work needs to be done. It can give the teacher important spatial information too; for example, if she/he spends more time on one side of the room than the other. By making visible what is invisible – especially to novice teachers who have little classroom experience – classware helps teachers design and orchestrate compelling learning experiences.

The ubiquitous measurement of engagement that feeds into intelligent strategies that enhance engagement has the potential to become essential to digital learning technologies. This would of course require them to fully respect students’ privacy. This can happen through data anonymity or deletion and consent.

Game assessments

AI can also open the way to evaluating hard-to-measure skills like creativity and curiosity. While the technology is clearly useful in traditional assessments, specially designed game assessments are worth keeping an eye on. Whether assessments come in the form of traditional video games or the entire simulated environment of virtual or mixed reality, they can be designed to capture not just “correct” responses but how the student cognitively works through the decisions they make. Though expensive and, because of that, difficult to scale, games assessments could advance “out-of-the-box” thinking.

Edtech promises an abundance of gains to education, but it comes with some important caveats. The first has already been alluded to, which is that technology works best for learning when used hand-in-hand with humans. For now, humans are still superior to machines when it comes to interpreting what a smile, a slump, or idle drumming on the desk says about what a student is feeling. This is especially true for students with developmental issues. Frustration, for example, is a tricky emotion for a machine to decipher. When a student is frowning and their face is scrunched up, is it because they are having a hard time understanding the lesson, or is it because it is just slightly out of their grasp for the moment and they are concentrating hard; in other words, fully engaged? It’s a difficult call for AI. Too much frustration without the pay-off of finally “getting” something can be an obstacle; on the other hand, just enough frustration and “getting” something represents a hard-won accomplishment. Humans may be better at telling the difference.

Inclusive design and accessibility

Another word on human involvement with technology is that teachers, students, and parents – and especially people with or involved with special needs – need to work together with designers to build education technology that is useful, intuitive, and affordable. The technology should be useable on as many commercial platforms and devices as possible and open-sourced when possible. Governments can promote a healthy ecosystem for these private-public partnerships, and help scale up and disseminate the fruits of their labour. One more thing to add: no matter how good, technology doesn’t just insert itself into teaching practices. Teachers and school principals need to be trained on how to use them in their different learning scenarios. This is an investment education ministries need to make.

The ever-present risk of algorithm bias

Lastly, but not least, comes some very sensitive considerations. Though most OECD countries have strong data protection safeguards in place, they are, however, grappling with how to regulate algorithms. There is the problem of biases in algorithms though there are increasingly technical workarounds for that. What is really tricky is how to render automated decision-making in things like high-stakes exam results transparent and explainable, with recourse to human oversight when needed. The crisis in summer 2020 over the Office of Qualifications and Examinations Regulation’s (Ofqual) algorithmically standardised grades in lieu of results from England’s A-level exams – cancelled due to COVID-19 – spotlights what’s at stake here. At the base of it, algorithms impact people’s lives, and they should be designed in negotiation with stakeholders. What is striking about the Ofqual problem is that the accuracy of the algorithm was not disputed; rather, it was its design parameters for predicting or adjusting grades, and, especially, the opaqueness of the process that was criticised.

Whether AI is used to determine which post-secondary schools students should be admitted to or predict whether a student is close to dropping out, the people concerned – or their representatives – should have their say in how these algorithms are designed. And when things go wrong, people, again, should be able to understand how the machine made the decision, and, when necessary, overrule them. Like everything worthwhile in society, it takes time, consultation and negotiation.