The argument for holistic AI audits

Algorithm auditing is a crucial cornerstone of AI risk management and an emerging discipline that focuses on assessing, mitigating, and ensuring algorithms’ safety, legality, and ethics. In essence, algorithm audits assess systems for risks, ensuring they meet legal requirements and adhere to best practices to minimise harm. Audits aim to provide high confidence in an AI system’s performance through independent and impartial evaluations of inherent risk factors. Auditors, free from conflicts of interest, examine AI systems for reliability, uncover hidden errors and discrepancies, identify system weaknesses, and offer recommendations.

When can audits be carried out?

Algorithm audits can be carried out throughout a system’s lifecycle, covering parts or the entire system. For example, audits can investigate the data associated with the model, including the inputs, outputs, and training data. They can also examine the model, including its objectives, parameters, and hyperparameters, or scrutinise the system’s development, including design decisions, documentation, and library usage. With multiple components of AI systems eligible for audits, it is never too early to audit a system. Auditors can even give recommendations during the design and development phases before deployment.

The four key stages of algorithm audits

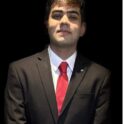

Generally, four key stages are involved in algorithm auditing: triage, assessment, mitigation and assurance, as shown in the figure below.

First, systems must be triaged to identify risks and the context in which they are used and evaluate the documentation quality. With a comprehensive understanding of the system, auditors can assess risks (Assessment Stage). Comprising a combination of qualitative and quantitative assessments, audits can examine one or more types of risks, such as bias, robustness, privacy, explainability, and efficacy.

For example, auditors can use various quantitative assessments when investigating bias in a system depending on how much access they have to the system. These assessments may use appropriate metrics to compare outcomes among different subgroups with respect to protected characteristics. Additionally, they can look at whether a model’s predictors show correlations with subgroup membership, evaluate the representativeness of the training data, or scrutinise the presence of bias within the training data. Complementarily, qualitative assessments can gather information about the process used to collect training data, determine relevant predictors, and make efforts to increase the system’s accessibility. Together, this information can determine whether the system is or is likely to be biased against particular groups regarding unequal treatment or outcomes.

After identifying any risks in the assessment stage, the audit moves into the Mitigation Stage, where a series of technical and procedural interventions can be made to prevent and reduce the prevalence of such risks. Revisiting the bias example, when the model produces unequal outcomes for different groups, it prompts the need to investigate underlying root causes thoroughly. Depending on the level of system access and the audit’s depth, predictors’ examinations may reveal associations with particular protected characteristics, which would have likely contributed to biased outcomes.

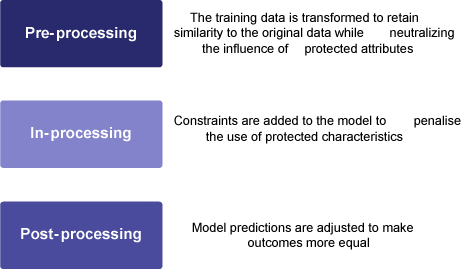

In such cases, actors can implement corrective steps, such as removing or reducing the influence of these predictors. They can also introduce additional parameters and constraints into the model to alter interactions. A more balanced sample could retrain the model if the training data is unrepresentative. If the training data exhibits bias, several classic computer science approaches can transform training data, mitigating and reducing the likelihood of bias in the model (see Figure 2). Non-technical steps may also be required, including comprehensive documentation on how training data was collected, model selection criteria, feature and parameter decisions and robust system monitoring mechanisms.

After risk mitigation comes the Assurance phase, where the system is declared to conform to predetermined standards, practices, and regulations. Auditors establish expectations for ongoing monitoring, accountability, and liability mechanisms.

More laws are requiring algorithm audits

AI is increasingly being adopted across industries. Fuelled by high-profile harms and recent lawsuits, policymakers worldwide are proposing laws to regulate AI use and make it safer and fairer. Each law has its own approach, but two essential laws, New York City Local Law 144 and the European Union’s (EU) Digital Services Act, require independent audits.

New York City Local Law 144

Local Law 144 targets Automated Employment Decision Tools (AEDTs) used to screen candidates for employment or employees for promotion in New York City, with tools such as AI-driven video interviews and image-based assessments. Such tools are under the scope of the law if they use machine learning, statistical modelling, data analytics, or AI to produce a “simplified output to substantially assist or replace human decision-making”. In addition to notification and transparency requirements, these tools must undergo annual independent bias audits. Here, bias must be examined using metrics defined by the Department of Consumer and Worker Protection that compare success rates based on sex/gender and race/ethnicity, at a minimum, for both standalone and intersectional groups. This audit can, therefore, be said to be an outcome audit, a type of technical audit that focuses on the outputs of a system and their implications for users.

Digital Services Act

The European Union’s Digital Services Act came into effect in November 2022 and will be fully applicable starting February 2024. Taking a different approach, it targets hosting services, marketplaces, and online platforms offering services in the EU. It fosters safe online environments for users with a particular focus on platforms designated as Very Large Online Platforms (VLOPs) and Search Engines (VLOSEs) – those have over 45 million average monthly users in the EU. They include the likes of Meta, Twitter (now X), and Google. Among requirements mandating risk management of a platform’s content moderation systems, user appeals mechanisms and recommendation systems, the DSA subjects all VLOPs and VLOSEs to conduct annual independent audits of the systemic risks prevalent on their platforms.

Audits under the DSA must be carried out to validate due diligence obligations set out in Chapter III of the text, which involve assessing a platform’s transparency, risk assessment and accountability efforts, as well as their commitments to voluntary codes of conduct and implementation of crisis protocols.

Rules for conducting independent audits under the DSA were first proposed on the 6th of May 2023 when the European Commission, pursuant to Article 37 of the legislation, issued a draft delegated regulation. After an extensive public consultation process, which brought to light gaps in the level of assurance and the need for a uniform criterion for conducting such audits, the final iteration of the regulation was published on 20 October 2023. Addressing these gaps, the Rules are the first to provide a standardised framework to assist VLOPs, VLOSEs and auditors on some of the modalities and reporting templates that could be adopted for these audits. In contrast to the audits required by Local Law 144, DSA audits can be considered governance audits because they evaluate internal governance practices and risk frameworks.

Assessing technical and governance audit approaches

The auditing approach for NYC Local Law 144 and the DSA are distinct, with the former taking a technical approach and the latter a governance approach focused on procedures. Although both types of audits fulfil specific objectives and certainly can help ensure algorithmic systems’ safety, fairness, and legal compliance, it is crucial to realise both approaches have limitations.

Outcome audits in the context of the NYC Local Law, while proving helpful in ensuring compliance with equal opportunity laws, fall short when examining the processes used to generate these outcomes. For example, an employer may deploy an algorithm that initially yields unequal recommendations for various sub-groups but then can adjust the outcomes to make them appear more balanced.

If this post hoc action proves effective, an outcome audit will inherently not raise concerns. However, changing scores based on protected characteristics is illegal under the federal Civil Rights Act of 1991. Unless the auditor takes additional steps to understand how these outcomes were generated or performs a governance audit, it may not become apparent from the audit that such activity is illegal, as the outcomes would be considered fair at face value.

Audits under the DSA, on the other hand, focus more on the controls, frameworks, and procedures in place to minimise risks and prevent harms. However, they are not required to examine the system’s outputs in the same way as Local Law 144, making it difficult to determine the relative efficacy of these procedures in reducing and mitigating platform risks without conducting a technical audit. As such, the lack of an outcome audit here could fail to flag well-intentioned but ultimately ineffective safeguards. Further, while the nature and quantum of systemic risks vary across time periods and platforms, the lack of fit-for-purpose baselines that could be enabled through such audits makes it challenging to judge relative improvements in a platform’s safety operations objectively.

Comprehensive audits offer the best protection

More laws in the US propose similar requirements to Local Law 144, so it is essential to recognise that while outcome audits are certainly a step in the right direction towards fair, safe, and accountable algorithmic systems, they alone are not adequate to minimise the risks of AI fully. Similarly, as algorithm audits gain prominence in online safety governance, especially under regulations like the DSA, they will need to evolve to assess incremental enhancements in a platform’s safety efforts thoroughly.

Therefore, the most effective audits would combine governance and technical audits to examine the process and outcome of an algorithmic system. This would involve evaluating the adequacy of implemented controls and procedures, scrutinising relative improvements in system results, and assessing the system’s compliance with broader legal frameworks. By adopting holistic approaches, AI systems deployers can gain a more comprehensive and thorough understanding of risks and the efficacy of corrective measures. The value of these audits and associated feedback would be maximised for systems with white- or glass-box access levels, where auditors can obtain documentation and information about model selection, validation, development, and security evaluation relevant to the type of audit being carried out.

Comprehensive audits establish precise accountability mechanisms if harm occurs, which becomes particularly crucial in ambiguous liability, like when a system’s deployer is not the developer. This would also be essential for compliance with regulations like the EU’s AI Liability Directive, which should make it easier to claim damages caused by algorithmic technologies by updating civil liability rules to hold enterprises accountable for the tools they develop and deploy.