Leveraging AI, big data analytics and people to fight untruths online

Lies, misleading information, conspiracy theories, and propaganda have existed since the serpent tempted Adam and Eve to take that fateful bite of the apple. What has changed the dynamic is the Internet, which makes producing and disseminating the collection of untruths that exist today much easier and faster.

Stopping the creators and spreaders of untruths online will play an important role in reducing political polarisation, building back trust in democratic institutions, promoting public health, and improving the well-being of people and society more generally. To do so, we must leverage the power of AI, big data analytics, and people in smart and new ways.

The Internet’s rise as a key conduit for the spread of untruths

The global free flow of information was one of the early Internet’s main drivers. The pioneers of the Internet’s architecture viewed an open, interconnected, and decentralised Internet as a vehicle to bridge knowledge gaps worldwide and level the information playing field. But despite these idealistic beginnings, societies across the world are now confronted with a dystopian reality: the Internet has reshaped and amplified the ability to create and disseminate false and misleading information in ways that we are only just beginning to understand.

Inaccurate and misleading information online became a problem at the same time that the Internet became a major news source. Data show that the share of people in the European Union who read online newspapers and news magazines nearly doubled from 2010 – 2020, with the percentage of individuals aged 16 to 75 consuming news online rising to 65%. Likewise, research suggests that 86% of adult Americans access news on a digital device and it is the preferred medium for half of all Americans.

Researchers from MIT Sloan show that untrue tweets were 70% more likely to be retweeted than those stating facts, and that false and misleading content reaches its first 1,500 viewers faster than true content.

Echo chambers and filter bubbles contribute to the wide-scale circulation of untruths online. While these phenomena exist in the analogue world – for example, newspapers with a particular political inclination – it is easier and faster to spread information online. User-specific cookies that log individuals’ preferences, memberships in social networks and other links to people or groups help reinforce the type of content that people see. When users consistently interact with or share the specific types of content that reinforce existing biases, the echo chambers that confirm them emerge and grow.

Untruths come in many different shapes and sizes

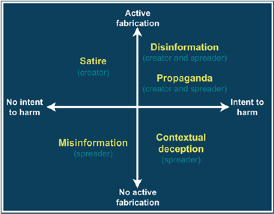

Often, terms like “misinformation” (false or misleading but without the intent to harm) and “disinformation” (deliberately fabricated untrue content designed to deceive) are used loosely in policy circles and the mainstream media. This creates confusion as to what untruths really are and what needs to be done to stop them. It all depends on the context, source, intent and purpose.

Establishing clear and shared definitions for different types of untruths can help policy makers design well-targeted policies and facilitate efforts to measure untruths and improve the evidence base. Our new paper provides definitions for the range of untruths that circulate online: 1) Disinformation, 2) Misinformation, 3) Contextual deception, 4) Propaganda, and 5) Satire.

These definitions support a typology of false and misleading content that can be differentiated along two axes: 1) the information spreader’s intent to cause harm and 2) the degree of fabrication by the information creator – altering photos, writing untrue texts and articles, creating synthetic videos, etc. (Figure 1). These distinctions are important because some types of false and misleading content are not deliberately created to deceive (e.g. satire) or they are not spread to intentionally inflict harm (e.g. misinformation).

From a policy perspective, it is important to differentiate the creators from the spreaders. There may be policies better suited to addressing false content creators, particularly considering that some spreaders disseminate falsehoods unknowingly (misinformation) or as part of societal or political commentary (satire). Before devising policy options, however, policy makers need to consider where and how false information spreads online.

AI, big data analytics and people are part of the problem and the solution

A particular characteristic of the digital age is that false information spreads by digital technologies that were created for entirely different purposes. For example, AI-based tools built to increase user engagement, monitor user interaction and deliver curated content now help false and inaccurate information to circulate widely. The use of algorithms and AI-based approaches to curate content makes it difficult to track the sources of untruths online and even more complicated to monitor its flow or stop its spread. For example, sophisticated disinformation attacks use bots, trolls, and cyborgs that are specifically aimed at the rapid dissemination of untruths. Efforts to stop these harmful practices require transparency about how these technologies work.

Existing approaches to reducing untruths online often depend on manual fact-checking, content moderation and takedown, and quick responses to attacks. All of this involves human intervention and allows for a finer-grained assessment of the degree of the accuracy of content. Collaborations between independent, domestic fact-checking entities and platforms can also help identify untruths (e.g. DIGI in Australia and FactCheck Initiative Japan) and can be useful to ensure that cultural and linguistical considerations are taken into account.

While human understanding is essential to interpreting specific content in the context of cultural sensitivities and belief or value systems, monitoring online content in real-time is a mammoth task that may not be entirely feasible without AI and big data analytics. The automation of certain content moderation functions and the development of technologies that embed them “by design” could considerably enhance the efficacy of techniques used to prevent the spread of untruths online. However, such approaches often provide less nuance on the degree of accuracy of the content (i.e. content is usually identified as either “true” or “false”).

Such approaches would also benefit from partnerships between local fact-checking entities and online platforms to ensure cultural and linguistical biases are addressed. Advanced technologies such as automated fact-checking or natural language processing and data mining can be leveraged to detect creators of inaccurate information and prevent sophisticated disinformation attacks. However, the spreaders of untruths have found ways to circumvent such approaches (e.g. by using images rather than words).

Innovative tools that use AI and big data analytics

In this regard, the transparent use of digital technologies by online platforms to identify and remove untrue content can improve the dissemination of accurate information. For example, the Chequeabot is a bot tool that incorporates natural language processing and machine learning to identify claims made in the media and matches them with existing fact checks. Chequeabot is notable in that it is in Spanish and it was developed by professional fact-checkers.

Another example is Google’s collaboration with Full Fact to build AI-based tools to help fact-checkers verify claims made by key politicians. Full Fact groups the content by topic and matches them with similar claims from across the press, social networks and even radio with the help of speech-to-text technology. These tools help Full Fact process 1,000 times more content, detecting and clustering over 100,000 claims per day. Importantly, these tools give Full Fact’s fact-checkers more time to verify facts rather than identify which facts to check. Using a machine learning BERT-based model, the technology now works in four languages (English, French, Portuguese and Spanish).

With better understanding, AI and big data analytics can more effectively combat untruths

AI solutions can accurately detect many types of false information and recognise disinformation tactics deployed through bots and deepfakes. They are also more cost-effective because they reduce the time and human resources required for detecting and removing false content. However, the effective use of AI for countering untruths online depends on large volumes of data as well as supervised learning. Without that, AI tools run the risk of identifying false positives and enforcing or even creating biases.

At the same time, the technical limitations of AI and other technologies point toward the need to adopt a hybrid approach that integrates both human intervention and technological tools in fighting untruths online. Approaches that marry technology-oriented solutions and human judgement may be best suited to ensure both efficient identification of problematic content and potential takedown after careful human deliberation, taking into account all relevant principles, rights and laws such as those regarding fundamental human rights.

A better understanding of a range of complex and intertwined issues about untruths online is urgent to develop best practice policies for addressing this important problem. In the meantime, concrete steps can be taken to begin the fight.

Tackling untrue content online requires a multistakeholder approach where people, firms, and governments all play active roles to identify and remove inaccurate content online. Everyone should be encouraged to exercise good judgment before sharing information online. While technology can take us a long way, people are still an important part of the solution.

This blog is based on the OECD Going Digital Toolkit note “Disentangling untruths online: Creators, spreaders and how to stop them” by Molly Lesher, Hanna Pawelec and Arpitha Desai.

Find out more about the state of digital development in OECD and partner economies on the OECD Going Digital Toolkit.