Soft law 2.0: Incorporating incentives and implementation mechanisms into the governance of artificial intelligence

There are numerous social, ethical, and safety concerns associated with AI and approaches for managing the risks that accompany them. From a governance perspective, stakeholders can address concerns through legislation and regulations, what we call “hard law.” Alternatively, they can turn to non-regulatory means known as “soft law” or programs that set substantive expectations but are not directly enforceable by governments.

Considering the role that soft law plays in managing the risks related to AI, it is incredibly important to understand the trends in how society deploys these programs and provide stakeholders with a readily available source of information to facilitate their development. To that effect, we undertook a global review of soft law dedicated to AI. Among our results, we find that for soft law to increase its credibility and effectiveness, it is fundamental for a program to include incentives so that stakeholders are motivated to implement and enforce them using a toolkit of organizational mechanisms. Before covering incentives and guidance, it is important to understand why soft law is well suited for AI.

Soft law can respond to the changing nature of AI

AI’s dynamic and rapidly evolving nature creates regulatory gaps or pacing issues that make it challenging to keep stable and relevant regulations in place. In these scenarios, soft law’s main advantage is flexibility. In other words, any organization can initiate or amend a program. They can transcend the boundaries that typically limit hard law and, by being non-binding, serve as a precursor or as a complement or substitute to regulation.

In effect, soft law is not a solution to every problem that presents itself in the AI context, but its reliance on the alignment of incentives to produce expected behaviours, norms, and procedures make it very useful, particularly on an international level where legislation is less of an option. The Organisation for Economic Co-operation and Development’s (OECD) AI principles are an important example of soft law. They represent one form of such programs where high-level norms are created by a multilateral organization with the intention of setting baseline expectations for the management of AI.

The scope of global AI soft law programs

Our work examined how AI soft law programs are employed throughout the world, which led to identifying and documenting the characteristics that define them. More specifically, we looked for programs that: 1) conform to the definition of soft law; 2) address the governance or management of AI methods or applications; and 3) were published by December 31st, 2019.

We found 634 soft law programs dedicated to AI governance and used 14 variables to characterize them. We also labelled each program’s text with 93 themes and sub-themes (the database and report can be accessed through this link). This led us to group results in the following way.

Themes: Education and displacement are dominant subjects

Every program’s text was labelled into 15 themes and further subdivided into 78 sub-themes. The theme with the highest number of excerpts is education/displacement of labour with 815. This means that text related to this subject was found 815 times throughout the 634 soft law programs. Meanwhile, general transparency leads the sub-themes by appearing in 43% of programs. For example, our labelling of the OECD AI Principles found that its text contained information relevant to 10 themes and 18 sub-themes.

Geographic representation: AI soft law programs are prevalent in industrialized countries and regions

Over 60 countries and regions are represented in the database, ranging from Argentina to the Vatican. We learned that soft law tends to be created in a narrow band of high-income economies. In fact, about 54% of all programs originate either in the U.S., international organizations or in Europe. While many Central and East Asia and Pacific entities are found in the database, those in Sub-Saharan Africa (Kenya and Mauritius) and South Asia (India) appear the least. As a multilateral organization, the OECD AI Principles were naturally catalogued as an international entity.

Year of publication: AI soft law trends after 2015

Our process revealed that AI soft law programs began to emerge in 2001. However, between 2001 – 2014, only 20 programs were found. These positions AI soft law as a relatively new activity since over 94% of the programs in our database were created between 2015-2019. Included in this majority are the OECD AI principles, which were published in 2019.

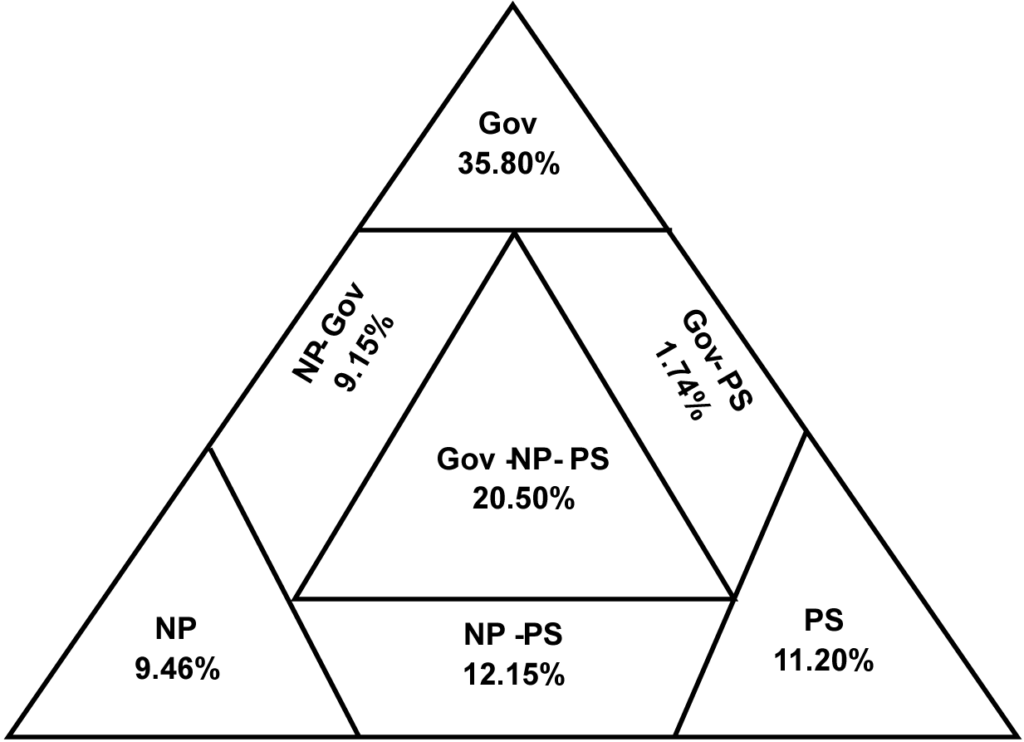

Stakeholders: Governments lead the development of programs

Soft law is not reserved for industry self-regulation. In fact, governments initiated approximately one-third of the programs in our sample. Public interest groups, think tanks, standard-setting organizations, and their alliances also played a significant role in AI governance, solidifying the idea that soft law is a viable alternative or complement to hard law. For instance, as an institution whose membership includes 38 countries on several continents, the OECD was catalogued as a multilateral government entity in our database.

| Key Gov = Government PS = Private Sector NP = Non-Profit |

In our research, it was clear that stakeholders who initiate soft law vary from region to region. In the U.S., the private sector, non-profits, and government actors have comparable representation in the creation of soft law. Unlike the U.S., Europe’s governments and their alliances account for around 81% of combined participation, while the private sector is only marginally represented. Unsurprisingly, more than half of international efforts are led by multi-stakeholder groups jointly organized by governments, the private sector, and non-profits (e.g. standard-setting organizations, professional associations, among others).

| USA | International | Europe | |||

| Gov | 23.93% | Gov, Np, Ps | 50.48% | Gov | 51.43% |

| Ps | 23.31% | Gov | 24.76% | Gov, Np, Ps | 17.14% |

| Np, Ps | 21.47% | Np, Ps | 10.48% | Gov, Np | 12.86% |

| Np | 12.27% | Np | 7.62% | Np, Ps | 11.43% |

| Gov, Np, Ps | 9.20% | Gov, Ps | 3.81% | Np | 5.71% |

| Gov, Np | 9.20% | Gov, Np | 1.90% | Ps | 1.43% |

| Gov, Ps | 0.61% | Ps | 0.95% | Gov, Ps | 0% |

Enforcement: Mechanisms are key program elements

Soft law’s voluntary nature is one of its main challenges. As prior work in the field demonstrates, these programs require incentives to be effective. Once incentives exist, organizations are compelled to develop mechanisms to implement these programs.

We classified the mechanisms we found according to two dimensions. The first dimension identifies the origin of the resources necessary for a program’s operation. Internal mechanisms are dependant on the resources that are available within an organization. External mechanisms invite independent third parties to play the role of overseeing a program’s implementation.

The second dimension is composed of levers and roles. Levers represent the toolkit of actions available to implement a program. This entails a range of activities such as: creating employee training programs, designing internal procedures, making commitments with public consequences, and leveraging one’s influence over third parties such as supply chain partners.

The counterpart to levers is roles, which exemplifies the importance of empowering individuals, arguably the most valuable resource of organizations, so that they execute the levers. These human resources can take the form of a champion that serves as a lightning rod for an issue, a unit that crystallizes its importance by adjudicating it a place in the organizational structure, an internal/external committee that advises or supervises a soft law program, or a deep commitment by the C-suite of the organization to its success.

| Internal | External | |

| Levers | Training of employees Design of procedures Commitments Indicators Allocating a budget | Third-party verification Leverage |

| Roles | Champions Units Committees C Suite commitment | Committees |

Influence: Most programs focus on impacting third-parties

In terms of organizational influence, programs are designed with one of two motivations. Either they are created solely to have an internal impact on the entity that adopted them or to affect both internal and external stakeholders.

The second scenario represents the vast majority of our database at 82%. In these cases, parties come up with their programs to inspire, encourage, and guide others. This is the case of the OECD AI principles, whose purpose is to influence how stakeholders of all ilks develop and deploy AI applications and methods.

Two examples of the remaining 18% of programs that were devised to be completely insular are Google’s AI principles and Deutsche Telekom’s guidelines. In both cases, they serve as norms that shape how employees manage AI and provide the public with insights as to what they can expect from these companies. Unlike the OECD AI Principles, they were not published to influence external parties.

Type of program: AI soft law tends to be created in the form of recommendations, strategies, and principles

Not all programs are created equally and, in this project, they were divided into seven categories. We found that 79% of programs are either principles or recommendations and strategies. It is important to note that this database offers one of the largest known compilations of principles in the literature with 158 examples.

| Number of Programs | Percentage of Total Programs | |

| Recommendation/Strategy | 344 | 54.26% |

| Principles | 158 | 24.92% |

| Standards | 60 | 9.46% |

| Professional Guidelines or Codes of Conduct | 23 | 3.63% |

| Partnerships | 21 | 3.31% |

| Certification or Voluntary Program | 16 | 2.52% |

| Voluntary moratorium or Ban | 12 | 1.89% |

| Grand Total | 634 | 100.00% |

Incentives and mechanisms are key components of soft law

For AI soft law to become an increasingly effective and credible tool, programs must integrate incentives and mechanisms to demonstrate stakeholder compliance. This means that enforcement and implementation have to be taken into account from the beginning. Otherwise, programs run the risk of never being implemented. Still worse, they could be perceived as “ethic-washing” props. We think that tackling this concern entails building “Soft Law 2.0” that integrates incentives and mechanisms into every program up-front.

Incentives represent the reason why organizations justify their willingness to comply with soft law. For the past several decades, the development of soft law for emerging technologies has taught us valuable lessons on how to persuade parties to participate. One incentive is to avoid inflexible hard law requirements that would otherwise kick in. An example of such behaviour is the creation of the gaming rating system by publishers to avoid government interference in their sector.

Organizations will also adopt soft law programs if compliance is a prerequisite for obtaining an in-demand resource such as insurance coverage, grant funding, or publication in leading journals. Impact on reputation is another strong incentive, which can be mediated by building-in provisions for independent third-party certifications, rankings, labelling, or monitoring.

The future of soft law 2.0

If the right incentives exist, an organization will have to develop mechanisms for the implementation of a soft law program. Fortunately, our scoping review classified the mechanisms toolkit using four categories: internal or external and levers or roles. Soft Law 2.0 involves explicitly including these incentives and mechanisms in a program. Doing so is essential to make soft law more effective and credible. Nevertheless, more experimentation, assessment, and research can help determine which combination and design are optimal for promoting responsible AI.

Soft law is not a panacea or silver bullet. In particular, its use by industry is in a state of crisis where recent debacles involving major corporations have spawned a “techlash” and growing distrust in measures proposed by industry actors. Despite this, we need soft law now and for the immediate future to face concerns and risks related to AI.

We know that soft law cannot solve all of the governance issues around AI. However, by building-in appropriate incentives and mechanisms for compliance, Soft Law 2.0 can be a more effective and credible tool for helping to fill the governance gaps and challenges that AI is presenting now and in the future.

The database and analysis described here is part of the Soft Law Governance of Artificial Intelligence program undertaken by the Center for Law, Science & Innovation at Arizona State University.