These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

A Frontier AI Risk Management Framework: Bridging the Gap Between Current AI Practices and Established Risk Management

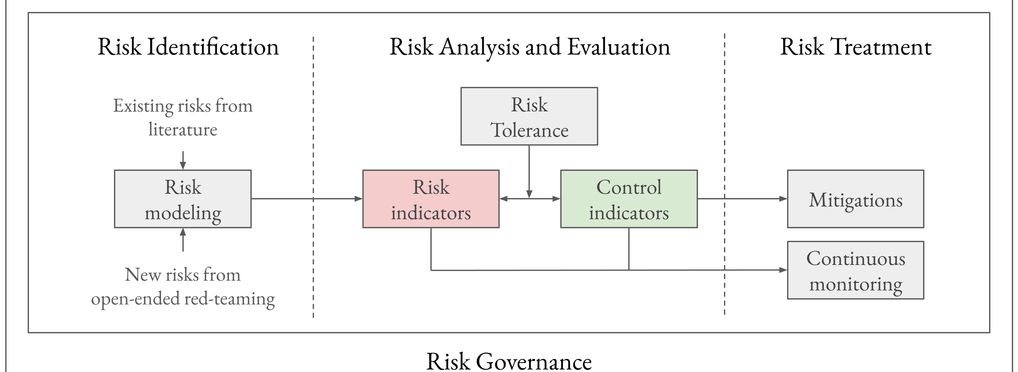

This tool provides a comprehensive risk management framework for frontier AI development, integrating established risk management principles with AI-specific practices. It combines four key components: risk identification through systematic methods, quantitative risk analysis, targeted risk treatment measures, and clear governance structures. The framework offers practical guidelines for implementing risk management throughout the AI system lifecycle, emphasising pre-training preparation to minimise the burden associated with it.

The recent development of powerful AI systems has highlighted the need for robust risk management frameworks in the AI industry. Although companies have begun to implement safety frameworks, current approaches often lack the systematic rigour found in other high-risk industries. This paper presents a comprehensive risk management framework for the development of frontier AI that bridges this gap by integrating established risk management principles with emerging AI-specific practices.

The framework consists of four key components:

- risk identification (through literature review, open-ended red-teaming, and risk modeling)

- risk analysis and evaluation using quantitative metrics and clearly defined thresholds

- risk treatment through mitigation measures such as containment, deployment controls, and assurance processes

- risk governance establishing clear organisational structures and accountability.

Drawing from best practices in mature industries such as aviation or nuclear power, while accounting for AI's unique challenges, this framework provides AI developers with actionable guidelines for implementing robust risk management. The paper details how each component should be implemented throughout the life-cycle of the AI system - from planning through deployment - and emphasises the importance and feasibility of conducting risk management work prior to the final training run to minimise the burden associated with it.

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Tool type(s):

Objective(s):

Impacted stakeholders:

Country/Territory of origin:

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

License:

Target groups:

Target users:

Stakeholder group:

Required skills:

Tags:

- ai risks

- ai risk management

- safety

- red-teaming

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI