These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

AI Inherent Risk Scale (AIIRS)

The AI Inherent Risk Scale (AIIRS) was developed in response to the rapid expansion of generative artificial intelligence (GenAI) across teaching, learning, research, and professional practice. While existing governance approaches address important issues such as data security, privacy, ethics, and misconduct, they do not adequately account for a distinct class of risk that arises when tasks depend on AI-generated information that may be incomplete, inaccurate, or difficult to verify. AIIRS addresses this gap by focusing on the inherent characteristics of tasks that use GenAI, rather than on user intent, system performance, or downstream policy compliance.

The core objective of AIIRS is to provide a structured, consistent, and defensible method for identifying and comparing the inherent risk associated with GenAI-assisted tasks. It is explicitly not a permissibility framework. It does not determine whether GenAI should be used. Instead, it establishes the level of inherent risk that may need to be actively managed through redesign, safeguards, oversight, or exclusion of AI use.

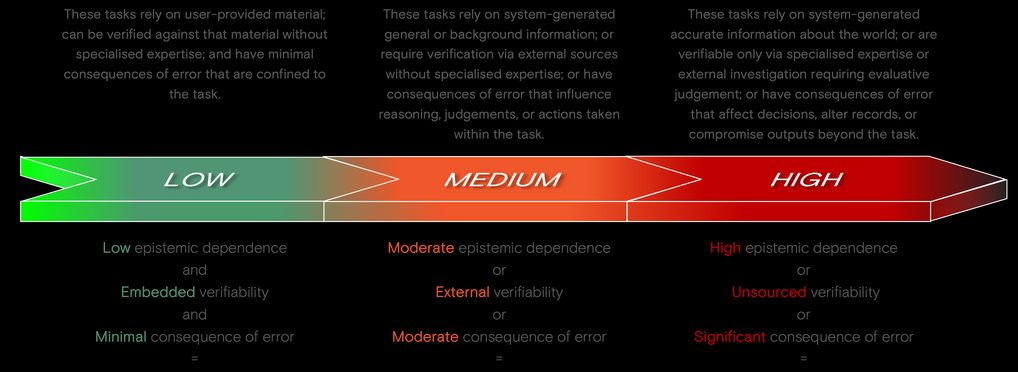

AIIRS classifies tasks into three risk bands—LOW, MEDIUM, and HIGH—by evaluating them against three evidence-informed criteria:

- Epistemic dependence – the extent to which a task requires the system’s representations of the world to be correct in order for the outcome to be usable.

- Verifiability – the basis on which the correctness of AI output can be checked, distinguishing embedded, external, and unsourced verification pathways.

- Consequences of error – the degree to which incorrect or misleading outputs affect decisions, records, or outcomes, particularly where impacts extend beyond the task itself.

These criteria capture how tasks rely on GenAI, how easily outputs can be validated, and how serious errors would be in practice. AIIRS uses a max-dominant classification model, ensuring that a single high-risk feature is not offset by lower-risk features elsewhere in the task. This supports proportionate risk management and prevents risk dilution.

AIIRS is designed as an upstream classification layer within existing governance systems. It does not replace institutional policy, regulatory obligations, or professional judgement. Rather, classification outcomes are intended to be interpreted within established governance, policy, and quality assurance frameworks. In higher education contexts, AIIRS supports regulatory expectations to identify and manage risks to academic quality and integrity through informed judgement and established processes.

The tool is intentionally task-bounded and applies to human-directed use of GenAI. It does not assess autonomous or agentic AI systems and does not evaluate system accuracy, ethics, privacy, or compliance risks, which are addressed by complementary frameworks such as S.E.C.U.R.E. GenAI Use Framework.

In practice, AIIRS enables organisations to distinguish between low-risk assistive uses, medium-risk uses requiring targeted safeguards, and high-risk uses requiring redesign, escalation, or exclusion. By providing a shared language and transparent method for classification, AIIRS strengthens accountability, supports proportionate controls, and enables trustworthy AI implementation aligned with the OECD AI Principles.

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Tool type(s):

Objective(s):

Impacted stakeholders:

Target sector(s):

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

Target users:

Stakeholder group:

Validity:

Enforcement:

Geographical scope:

People involved:

Required skills:

Technology platforms:

Tags:

- ai risks

- ai risk management

- risk signals

- risk

- ai risk standard

- ai risk mitigation

- governance risk and compliance (grc) for ai

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI