These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

AIRO (AI Risk Ontology)

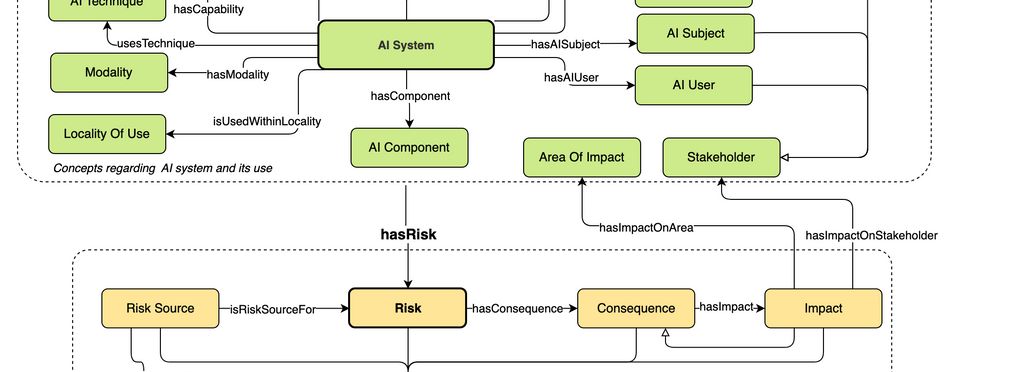

The AI Risk Ontology (AIRO) is an ontology for expressing AI use cases and their risks in a FAIR (Findable, Accessible, Interoperable, and Reusable) manner. AIRO is an open-source formal ontology that provides a minimal set of concepts and relations for modelling AI use cases and their associated risks. It is developed based on the requirements of the AI Act and international standards, including ISO/IEC 23894 on AI risk management and ISO 31000 series of standards on risk management. AIRO assists stakeholders in modelling and maintaining information regarding AI use cases and their associated risks in an interoperable, standardised, and queryable format.

AIRO provides the concepts and relations for expressing and maintaining information regarding AI use cases and their risks using open data specifications. Such data specifications utilise interoperable machine-readable formats to enable automation in information management, querying, and verification for self-assessment and third party conformity assessments. Additionally, they enable automated tools for supporting AI risk management that can both import and export information meant to be shared with various stakeholders, including AI users, providers, and authorities.

Using AIRO for modelling AI use cases and AI incidents enables classification, collation, and comparison of AI risks and impacts over time. In regard to the AI Act, AIRO lays the foundation for development of RegTech tools for determining “prohibited” and “high-risk” AI systems, documenting risk management system information, creating technical documentation, and reporting AI incidents.

Being implemented as an open knowledge graph, AIRO can be adopted and enhanced freely. AIRO is encoded using the Web Ontology Language (OWL 2) and documented in a human-readable format.

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Objective(s):

Impacted stakeholders:

Country/Territory of origin:

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

Target groups:

Target users:

Stakeholder group:

Geographical scope:

Tags:

- ai risks

- ontologies

- ai risk management

- eu ai act

- ai risk standard

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI