These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Assessment framework for non-discriminatory AI systems

The assessment framework for non-discriminatory AI systems was developed in 2022 by Demos Helsinki, University of Turku and University of Tampere as part of the Avoiding AI Biases -project for the Finnish Government's analysis, assessment and research activities. The framework helps to identify and manage risks of discrimination, especially in public sector AI systems and to promote equality in the use of AI.

The main intended audience of the framework is the public sector and civil servants who can use it to assess possible biased and discriminatory effects of AI systems when planning, procuring or deploying them. Indirectly it also acts as a tool for AI developers to assess their systems and processes, especially if intended for public use. The framework takes into account the Finnish Non-Discrimination Act that obligates authorities, education providers and employers not only to prevent discrimination, but also to promote equality.

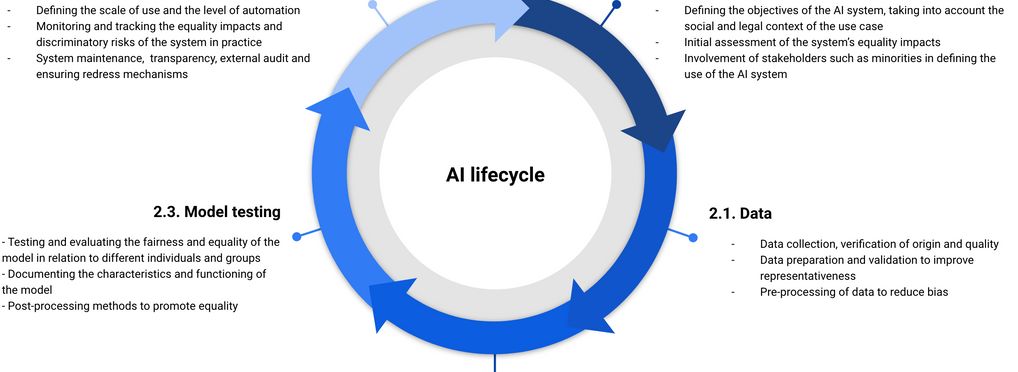

As per the lifecycle model, the assessment framework emphasises that the discriminatory impacts of AI systems can arise at different stages of development. As such, the framework serves as an algorithmic impact assessment process for addressing risks of discrimination and promoting equality throughout the lifecycle of an AI system:

1) Design, i.e. the initial assessment and definition of the AI system's objectives, motivations for use, necessity and equality impacts.

2) Development, covering three areas: data and its preparation, training of the algorithmic model and validation of the model.

3) Deployment, including issues such as human oversight, transparency and monitoring the system’s equality impacts in practice.

The assessment framework divides each stage of the AI lifecycle into questions, which describe the risks of bias and discrimination. It acts as a self-assessment tool which gives a risk score based on the answers inputted. We encourage users to tailor the assessment framework to specific use cases by modifying it as the risks of discrimination are always contextual.

The framework is available both in Excel and PDF format.

About the tool

You can click on the links to see the associated tools

Tool type(s):

Objective(s):

Impacted stakeholders:

Target sector(s):

Country/Territory of origin:

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

Target groups:

Target users:

Stakeholder group:

Validity:

Enforcement:

Geographical scope:

People involved:

Required skills:

Risk management stage(s):

Technology platforms:

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI