These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Control Audits AI Governance, Risk & Assurance Platform

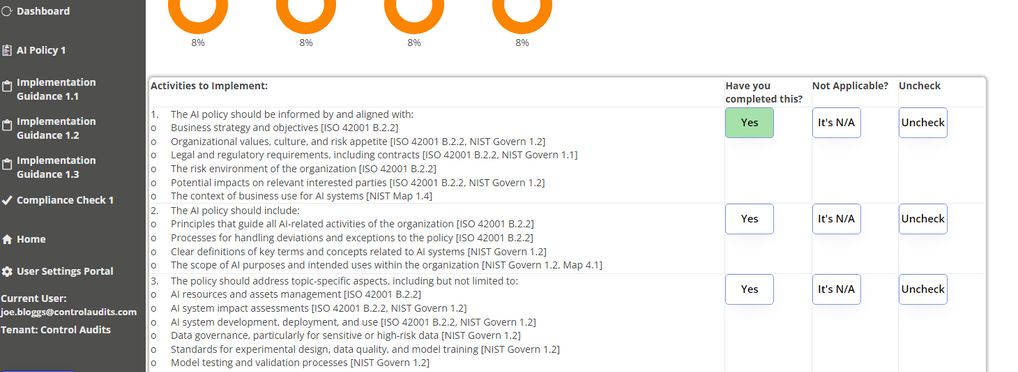

The Control Audits AI Governance, Risk and Assurance Platform is a comprehensive solution designed to help organizations implement, manage, and monitor AI governance practices throughout the AI lifecycle. This platform integrates key elements from ISO/IEC 42001 and NIST AI Risk Management Framework, while also aligning with the guidance on AI assurance from the EU, UK, USA and Australia. It provides a robust, standards-based approach to AI governance and assurance.

The platform offers:

- AI policy management tools

- Risk assessment and mitigation capabilities

- Compliance tracking and reporting

- Audit trail functionality

- Integration with existing enterprise systems

- AI assurance techniques as outlined in EU, UK, USA and Australian AI guidance

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Tool type(s):

Objective(s):

Impacted stakeholders:

Target sector(s):

Country/Territory of origin:

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

License:

Target users:

Stakeholder group:

Validity:

Enforcement:

Geographical scope:

People involved:

Required skills:

Technology platforms:

Tags:

- ai risks

- data governance

- ai assessment

- ai governance

- ai auditing

- ai risk management

- ai compliance

- ai security

- data assurance

- auditing

- ai

- risk

- 42001

- rmf

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI