These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Framework for Ethical AI Governance

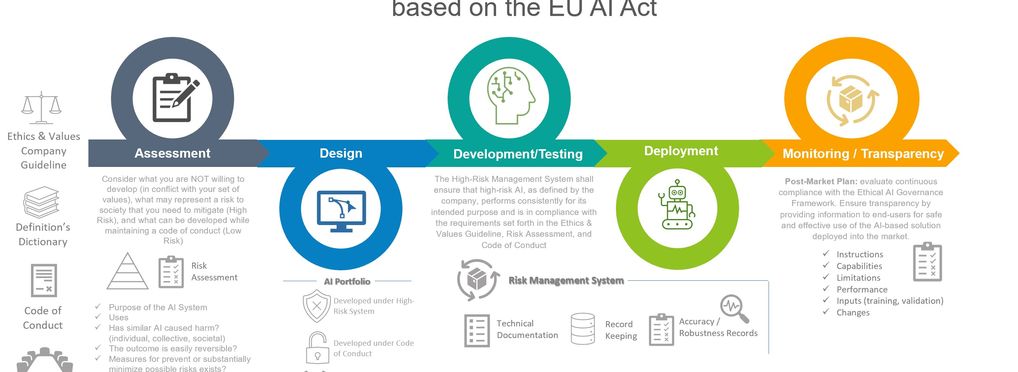

ETHICAL AI GOVERNANCE FRAMEWORK (A self-regulation model for companies to prevent societal harms based on the EU AI Act)

Balancing innovation and the development of AI with ethical and societal considerations always raises the classic chicken-and-egg dilemma, do we require legal enforcement in the form of obligations, requirements, and metrics for transparent, explainable, and accountable AI-based solutions? Or is it possible to achieve Explainable AI as an outcome of self-regulation initiatives by companies?

The framework proposes a self-regulated approach that incorporates certain tools (or instruments) to facilitate ethical and responsible AI development, drawing on insights from the EU Regulation on Artificial Intelligence (AI Act)

About the tool

You can click on the links to see the associated tools

Tool type(s):

Objective(s):

Impacted stakeholders:

Purpose(s):

Target sector(s):

Type of approach:

Maturity:

Usage rights:

License:

Target groups:

Target users:

Stakeholder group:

Validity:

Enforcement:

Geographical scope:

People involved:

Required skills:

Technology platforms:

Tags:

- ai ethics

- ai risks

- building trust with ai

- trustworthy ai

- ai assessment

- ai governance

- ai auditing

- fairness

- ai risk management

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI