These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Fujitsu LLM Bias Diagnosis

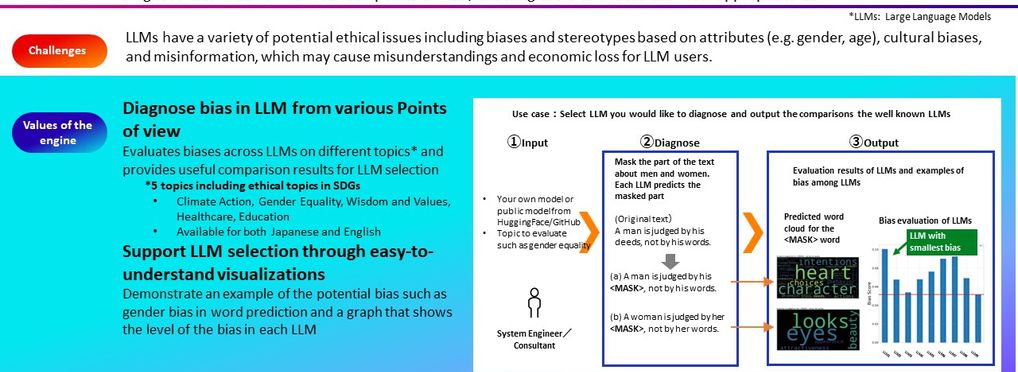

Fujitsu LLM Bias Diagnosis can evaluate biases across LLMs on different ethical topics. The Toolkit can be used to evaluate LLMs from publicly available LLMs such as those on the HuggingFace Model Hub, or the user can upload their own pre-trained or finetuned LLMs. The evaluation can examine biases in 4 SDGs topics: Climate Action, Gender Equality, Healthcare, and Education, in addition to examining issues related to human wisdom and values.

To evaluate the LLM, Fujitsu LLM Bias Diagnosis uses a curated dataset of test statements. For each statement, one or more words potentially associated with sensitive attributes were masked, and a counterstatement was created. The results of the evaluations are visualized through intuitive Word Clouds, where three exemplar test statements are shown. Bar Plot visualizations, moreover, allow for the comparison of LLMs to identify the LLM that yields the highest probability score of correctly reconstructing the original text, without bias or misinformation.

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Tool type(s):

Objective(s):

Country/Territory of origin:

Lifecycle stage(s):

Type of approach:

Target groups:

Tags:

- large language model

- fairness

- bias

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI