These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

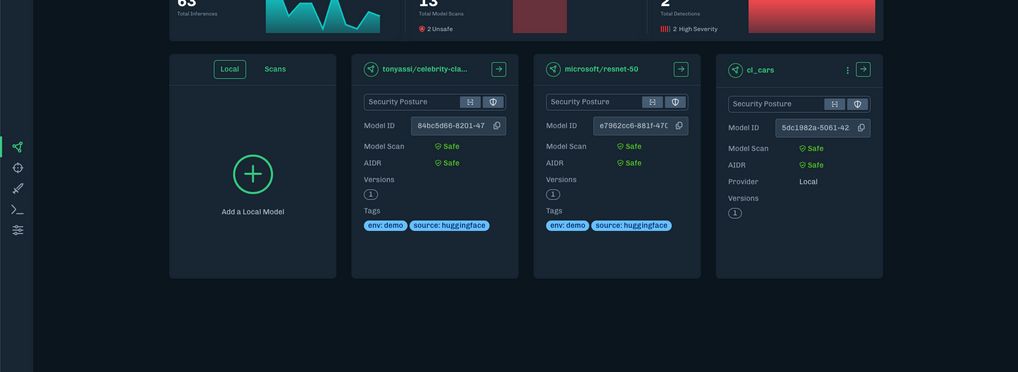

HiddenLayer AISec Platform

HiddenLayer’s AISec Platform is a GenAI Protection Suite purpose-built to ensure the integrity of AI models throughout the MLOps pipeline. The platform provides detection and response for GenAI and traditional AI models to detect prompt injections, adversarial AI attacks, and digital supply chain vulnerabilities. The AISec Platform delivers an automated and scalable defense tailored for GenAI, enabling fast deployment and proactive responses to attacks without necessitating access to private data or models.

Comprehensive AI Security & Threat Protection

HiddenLayer’s AISec Platform is a purpose-built Protection Suite that secures AI models across the MLOps pipeline. It proactively detects and mitigates risks from adversarial AI attacks, prompt injection, IP theft, PII leakage, and supply chain vulnerabilities—ensuring AI integrity without accessing private data or models.

Key Capabilities:

- AI Threat Detection & Malware Analysis: scans AI models for malicious code, providing early warnings against malware, adversarial attacks, and supply chain threats.

- Vulnerability Assessment & Continuous Monitoring: identifies known CVEs, zero-day vulnerabilities, and system weaknesses with scheduled and on-demand scans.

- Model Integrity & Tampering Protection: analyses model layers, components, and tensors to detect unauthorised changes while maintaining a secure baseline.

- Adversarial Attack Detection & Mitigation: defends against data poisoning, model injection, and extraction/theft using behavioral analysis, static analysis, and machine learning.

- Prompt Injection & PII Leakage Prevention: prevents unauthorized prompt manipulation and unintended data exposure, ensuring models remain secure.

- Security Framework Integration & Unified Reporting: aligns with MITRE ATLAS and OWASP LLM frameworks to map over 64 adversarial AI tactics, providing actionable insights for red and blue teams.

Key Benefits

✔ Malware Protection – Detects embedded malicious code that could compromise AI models.

✔ Model Integrity Assurance – Monitors model structures for tampering or corruption.

✔ Prompt Injection Prevention – Shields LLMs from malicious input manipulation.

✔ IP & Model Theft Protection – Stops inference attacks that could steal proprietary AI models.

✔ Excessive Agency Mitigation – Prevents AI from exposing backend systems to privilege escalation or remote code execution risks.

Securing AI through its lifecycle

- Automate & Scale AI Security – Deploy real-time protection across training, build, and production environments.

- Identify Hidden AI Risks – Detect malware, vulnerabilities, and integrity threats with continuous monitoring.

- Simulate & Defend Against AI Attacks – Run expert attack simulations to uncover security gaps and maintain compliance.

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Objective(s):

Purpose(s):

Country/Territory of origin:

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

Target groups:

Target users:

Geographical scope:

Tags:

- ai security

- adversarial ai

- red-teaming

- llm

- gen ai security

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI