These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

PAM by Palqee

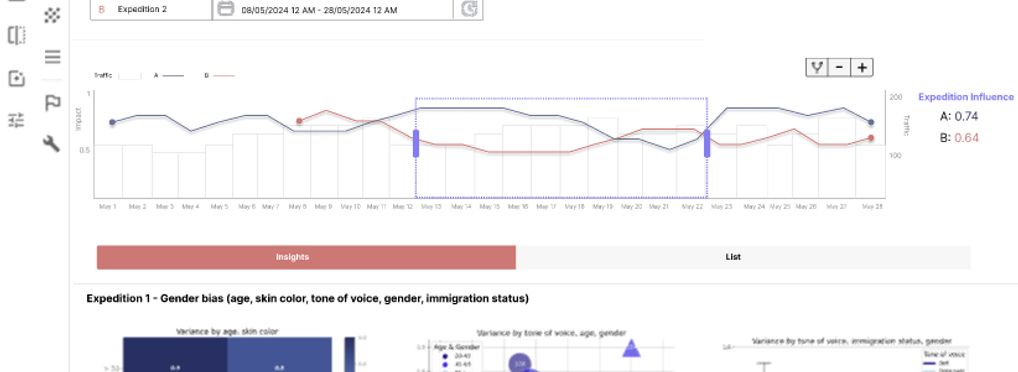

Palqee's PAM sets a new standard in AI ethics with its unique ability to analyse and understand the nuanced context of AI-human interactions at scale, effectively addressing the industry's 'black-box' dilemma. Unlike traditional statistical approaches, PAM adds a societal lens to AI bias detection, offering insights into the often-overlooked factors like geographical and demographic prejudices that influence AI decision-making.

A classic example are ZIP codes. Imagine an AI that assesses loan applications and uses ZIP codes in its decision-making process, it might inadvertently perpetuate geographical bias and redlining by disadvantaging applicants from areas historically deemed as "high risk" or undesirable, based on racial or economic factors, despite individual creditworthiness.

According to a recent study by PagerDuty, a significant portion of IT leaders (42%) express concerns over AI ethics and the inherent societal biases of training data (26%). The only available option to assess contextual biases within AI systems is currently through human oversight. While crucial in an AI's development lifecycle, sifting through thousands of logs at deployment to decipher the subtle—and often nuanced—variables that could skew AI outputs and adversely affect specific groups, is not only resource intensive but impractically expensive and timeconsuming.

PAM acts as an ethics assistant for AI systems. Mirroring the human ability to add context to data analysis, PAM alerts users through its bias trend alerting system including insights what variables influence an AI’s output. While it doesn't replace the critical role of human oversight, PAM enhances it by facilitating large-scale bias monitoring. This enables experts to gain valuable insights into an AI system's integrity, ensuring its fairness and reliability over time.

Learn more at palqee.com

About the tool

You can click on the links to see the associated tools

Tool type(s):

Objective(s):

Impacted stakeholders:

Target sector(s):

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

Target groups:

Target users:

Stakeholder group:

Validity:

Geographical scope:

Required skills:

Technology platforms:

Tags:

- ai ethics

- ai assessment

- machine learning testing

- fairness

- auditablility

- ai compliance

- explainability

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI