These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Resaro AI Solutions Quality Index Engineer (ASQI Engineer)

AI Solutions Quality Index Engineer (ASQI Engineer) is an open-source framework for testing and assuring AI systems. Built for scale and reliability, it uses containerised test packages, automated assessments, and repeatable workflows to make evaluation transparent and robust. With ASQI Engineer, organisations also run AI Solutions Quality Index's that they have created themselves, giving teams full control and confidence in AI quality.

ASQI Engineer is in active development and contribution to test new packages, share score cards and test plans, and help define common schemas to meet industry needs are welcome. The initial release focuses on comprehensive chatbot testing with extensible foundations for broader AI system evaluation.

Key features:

1. Durable Execution: DBOS-powered fault tolerance with automatic retry and recovery for reliable test execution.

2. Container Isolation: Reproducible testing in isolated Docker environments with consistent, repeatable results.

3. Multi-System Orchestration: Coordinate target, simulator, and evaluator systems in complex testing workflows.

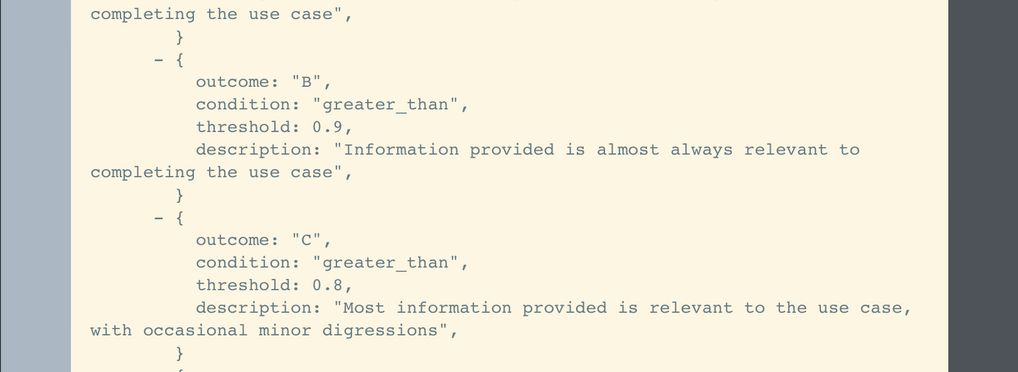

4. Flexible Assessment: Configurable score cards map technical metrics to business-relevant outcomes.

5. Type-Safe Configuration: Pydantic schemas with JSON Schema generation provide IDE integration and validation.

6. Modular Workflows: Separate validation, test execution, and evaluation phases for flexible CI/CD integration.

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Tool type(s):

Objective(s):

Impacted stakeholders:

Target sector(s):

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

License:

Target users:

Stakeholder group:

Validity:

Enforcement:

People involved:

Required skills:

Technology platforms:

Tags:

- open source

- machine learning testing

- benchmarking

- llm redteaming

- quality measurement

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI