These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

SAIMPLE

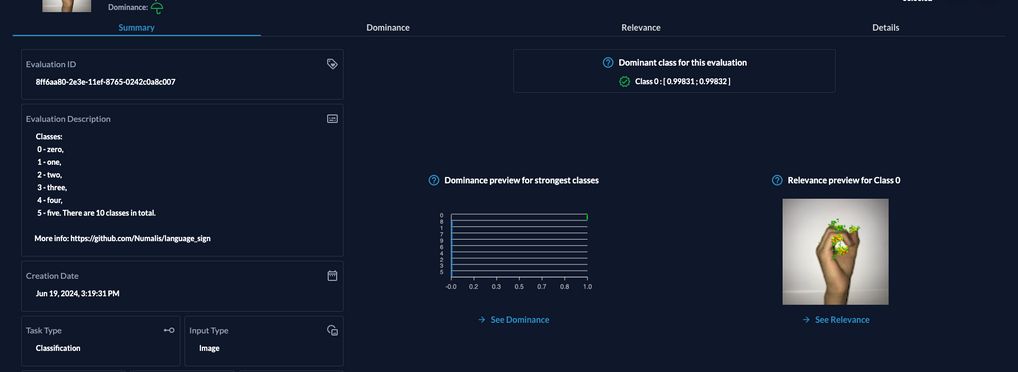

SAIMPLE® is developed by Numalis, a deeptech helping critical industries to confidently adopt Artificial Intelligence by providing state-of-the-art methods and tools to develop trustworthy AI systems through robustness validation and explainable decision-making processes. Saimple is also referenced in CONFIANCE.AI catalog of tools for the development of trustworthy AI systems.

SAIMPLE®, is a static analyzer based on abstract interpretations designed specifically for AI algorithm validation. It leverages state-of-the-art techniques described in ISO/IEC 24029-2:2023 standard for the assessment and validation of Machine Learning (ML) models robustness using formal methods against real-world perturbations within the domain of use.

Additionally, it delivers human-understandable visualizations of models decisions across the input space allowing to extract relevant explainability components.

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Tool type(s):

Objective(s):

Impacted stakeholders:

Target sector(s):

Country/Territory of origin:

Lifecycle stage(s):

Type of approach:

Usage rights:

License:

Target groups:

Target users:

Validity:

Enforcement:

Geographical scope:

People involved:

Required skills:

Technology platforms:

Tags:

- trustworthy ai

- validation of ai model

- ai auditing

- machine learning testing

- ai compliance

- model validation

- ai vulnerabilities

- validation

- robustness

- explainability

- adversarial ai

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI