These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

The Citrusx Platform

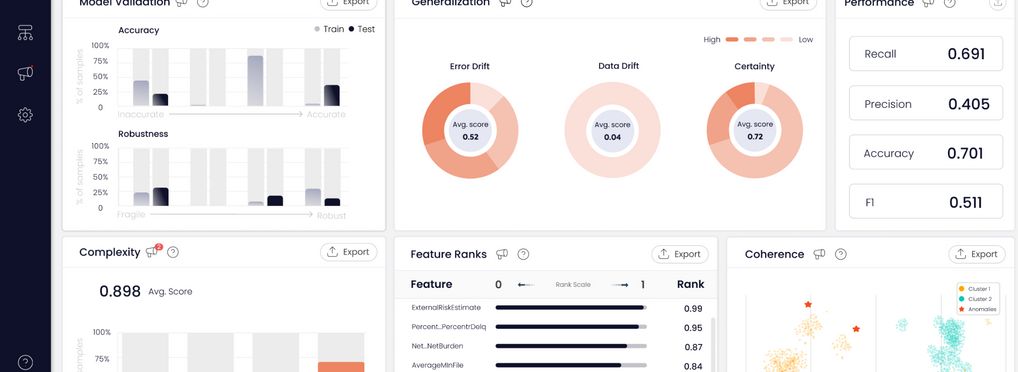

Citrusx is an end-to-end platform for AI transparency and explainability, trusted by publicly listed companies in regulated industries. Through continuous actionable validation, governance, and monitoring, organizations can maintain confidence in their models. The holistic solution keeps all stakeholders informed for better decisions and outcomes while complying with regulations.

About the tool

You can click on the links to see the associated tools

Tool type(s):

Objective(s):

Impacted stakeholders:

Purpose(s):

Target sector(s):

Country/Territory of origin:

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

Target groups:

Target users:

Stakeholder group:

Validity:

Enforcement:

Geographical scope:

People involved:

Required skills:

Technology platforms:

Tags:

- ai ethics

- ai responsible

- ai risks

- build trust

- building trust with ai

- data documentation

- data governance

- demonstrating trustworthy ai

- documentation

- evaluation

- transparent

- trustworthy ai

- validation of ai model

- ai governance

- machine learning testing

- fairness

- bias

- transparency

- trustworthiness

- ai risk management

- ai compliance

- model validation

- reporting

- model monitoring

- mlops

- ai quality

- ai vulnerabilities

- performance

- regulation compliance

- accountability

- ml security

- validation

- robustness

- explainability

- ethical risk

- ai policy

- model risk management

- model risk management

- model governance

- model governance

- bias mitigation

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI