These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

trail - AI Governance Copilot

Leverage AI responsibly:

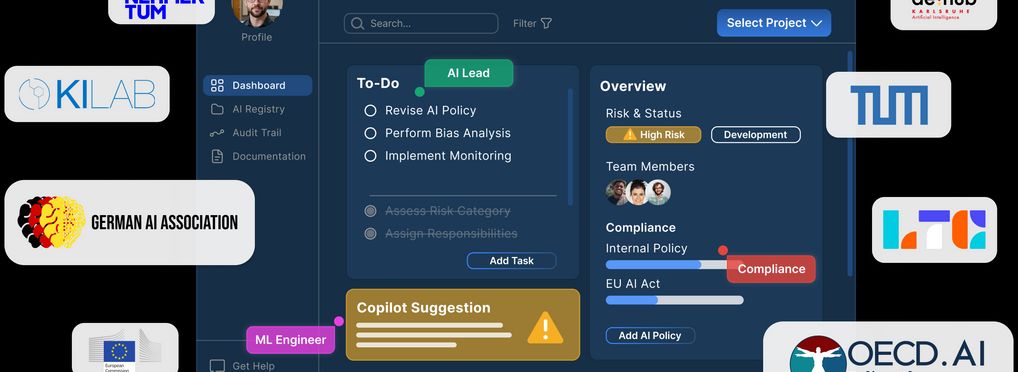

trail is a developer-friendly AI governance platform that helps companies build, deploy and scale AI with trust and efficiency. It is a simple way for technical and compliance stakeholders to ensure quality, transparency and regulatory readiness without additional manual overhead.

Using an intuitive web-interface and by integrating through a few lines of code, trail adapts to existing developer environments and governance workflows to become your copilot.

What trail does:

In a nutshell, trail tells you who should do what, when and how to gain control and achieve compliance — without slowing you down.

Trail helps you to identify all of your requirements — coming from regulation (e.g. the EU AI Act), policies, standards (e.g. the ISO42001) or dedicated governance frameworks — and to structure and centralize your information and AI use cases.

By combining both your individual needs and a state-of-the-art governance framework, trail guides you through the actionable steps to govern AI effectively.

It allows you to integrate AI Governance into your existing workflows and focus on the tasks that require the human attention of the right person at the right time, while trail takes care of the rest through smart automation.

Trail’s AI registry, risk management and audit trail enable trust through transparency, risk control, and standardized development processes in one central hub for all AI projects from design (or procurement) to deployment. This unlocks higher quality of AI and facilitates team collaboration.

The copilot boosts efficiency and saves time by orchestrating governance workflows and automating manual tasks, like writing documentation for reporting or regulatory compliance purposes and writing AI policies. trail combines speed and governance for lasting AI success.

Additionally, trail collaborates with renowned partners to obtain AI certification.

About the tool

You can click on the links to see the associated tools

Tool type(s):

Objective(s):

Impacted stakeholders:

Target sector(s):

Lifecycle stage(s):

Type of approach:

Maturity:

Usage rights:

License:

Target groups:

Target users:

Stakeholder group:

Validity:

Enforcement:

Benefits:

Geographical scope:

People involved:

Required skills:

Technology platforms:

Tags:

- ai ethics

- ai responsible

- biases testing

- collaborative governance

- data documentation

- demonstrating trustworthy ai

- documentation

- ethical charter

- trustworthy ai

- ai governance

- ai auditing

- fairness

- transparency

- auditablility

- ai risk management

- ai compliance

- ai register

- regulation compliance

- auditing

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI