These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

TrustWorks - AI Governance module

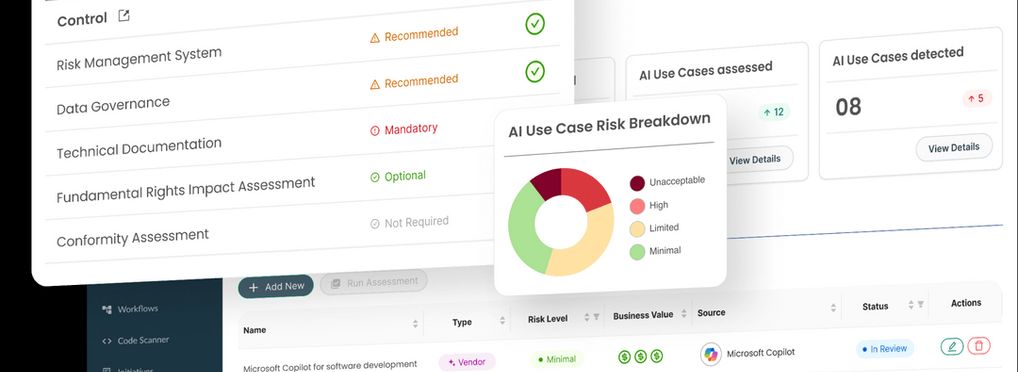

TrustWorks AI Governance module aims to achieve regulatory compliance with the EU AI Act and the coming wave of AI regulations.

Any organisation can easily streamline the registration and classification of AI systems (including Shadow AI) and implement continuous AI risk assessment and mitigation measures. The tool also facilitates meeting and exceeding transparency and reporting requirements and adhering to the latest governance frameworks.

TrustWorks leverages intelligent integrations to automatically detect AI capabilities across three key dimensions: third-party vendors, source code analysis of internal tools and ongoing projects.

- Instant map of AI use cases: Identify and document all AI usage (systems, machine learning models and vendors) across the organisation in real-time and comply with transparency requirements and beyond.

- AI risks classification: Classify AI systems based on the risk framework proposed by the AI Act to understand the applicable regulatory requirements.

- Streamlined assessments: Assess conformity for high-risk AI systems, and meet all the reporting compliance requirements with purpose-designed AI Act templates.

- AI risk and incident management: Implement continuous risk assessment and mitigation to safeguard AI systems. Ensure safety, compliance, risk management and monitoring across all risk categories, with a focus on high-risk AI.

- AI adoption and audit log: Assess and track the implementation of AI use cases, prioritising those with the highest business value. Build audit workflows and checklists with an easy-to-use drag-and-drop builder.

About the tool

You can click on the links to see the associated tools

Tool type(s):

Objective(s):

Type of approach:

Maturity:

Usage rights:

Validity:

Enforcement:

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI