These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Z-Inspection

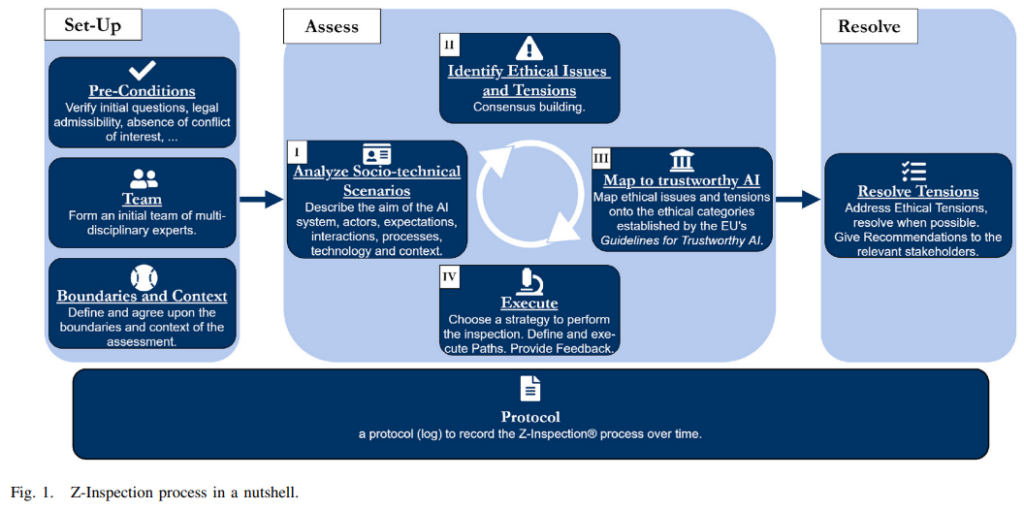

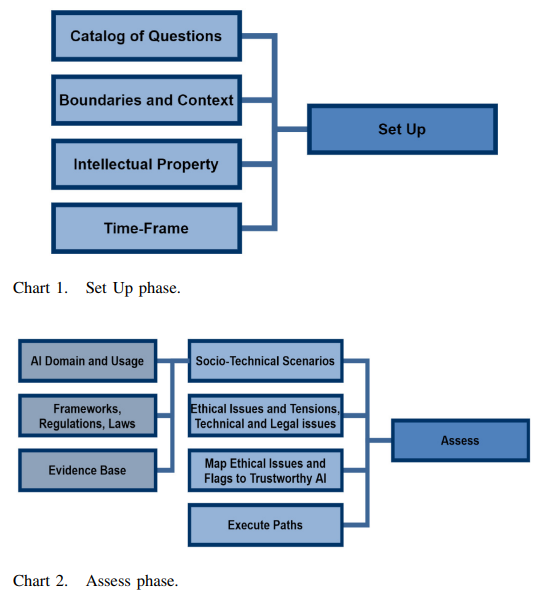

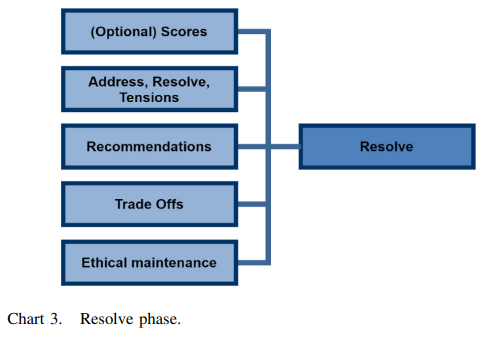

Z-Inspection® is a general inspection process for Ethical AI which can be applied to a variety of domains such as business, healthcare, public sector, among many others.

It uses applied ethics to assess Trustworthy AI in practice.

The Z-Inspection® process has the potential to play a key role in the context of the new EU Artificial Intelligence (AI) regulation.

The work is distributed under the terms and conditions of the Creative Commons (Attribution-NonCommercial-ShareAlike CC BY-NC-SA) license.

About the tool

You can click on the links to see the associated tools

Developing organisation(s):

Tool type(s):

Objective(s):

Impacted stakeholders:

Target sector(s):

Type of approach:

Maturity:

Usage rights:

Target groups:

Target users:

Validity:

Benefits:

Geographical scope:

People involved:

Technology platforms:

Use Cases

Assessing the trustworthiness of the use of machine learning as a supportive tool to recognize cardiac arrest in emergency calls

Assessing the ethical, technical and legal implications of using Deep Learning in the context of skin tumour classification

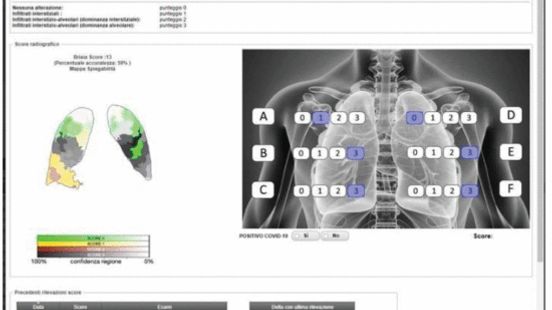

Assessing Trustworthy AI in times of COVID-19. Deep Learning for predicting a multi-regional score conveying the degree of lung compromise in COVID-19 patients

Assessment for Responsible Artificial Intelligence

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI