The information displayed in the AIM should not be reported as representing the official views of the OECD or of its member countries.

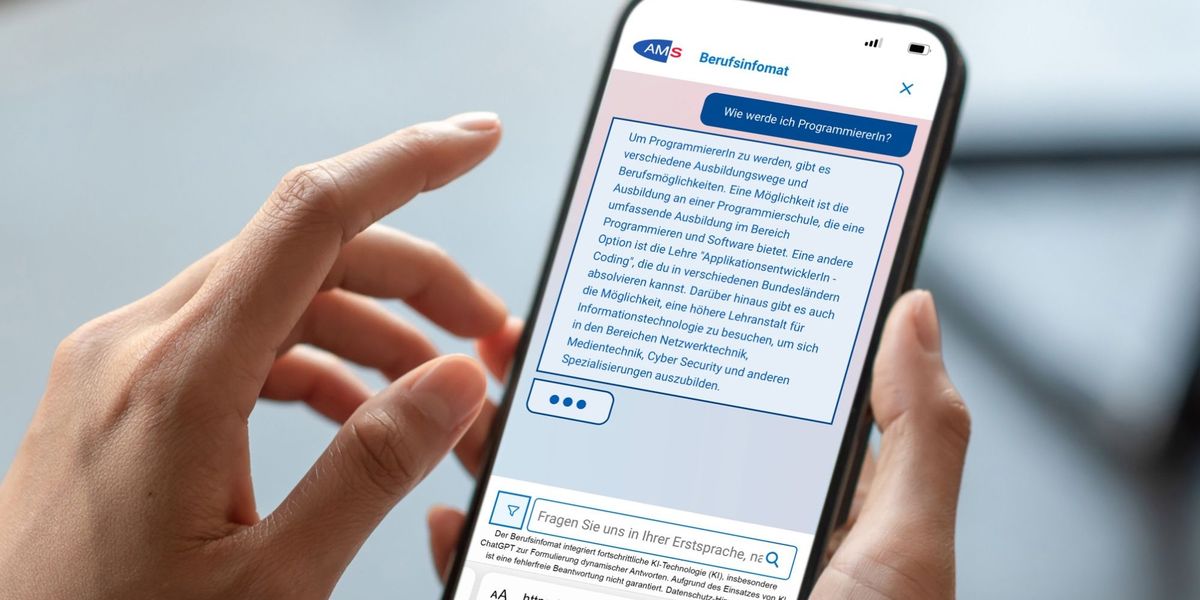

The Austrian Public Employment Service’s ChatGPT-powered chatbot, Berufsinfomat, has been criticized for reproducing gender stereotypes by recommending science programs to men and humanities to women. Developed by AMS and AI firm Goodguys to assist job seekers, the tool has sparked user complaints and prompted efforts to correct its discriminatory outputs.[AI generated]