The information displayed in the AIM should not be reported as representing the official views of the OECD or of its member countries.

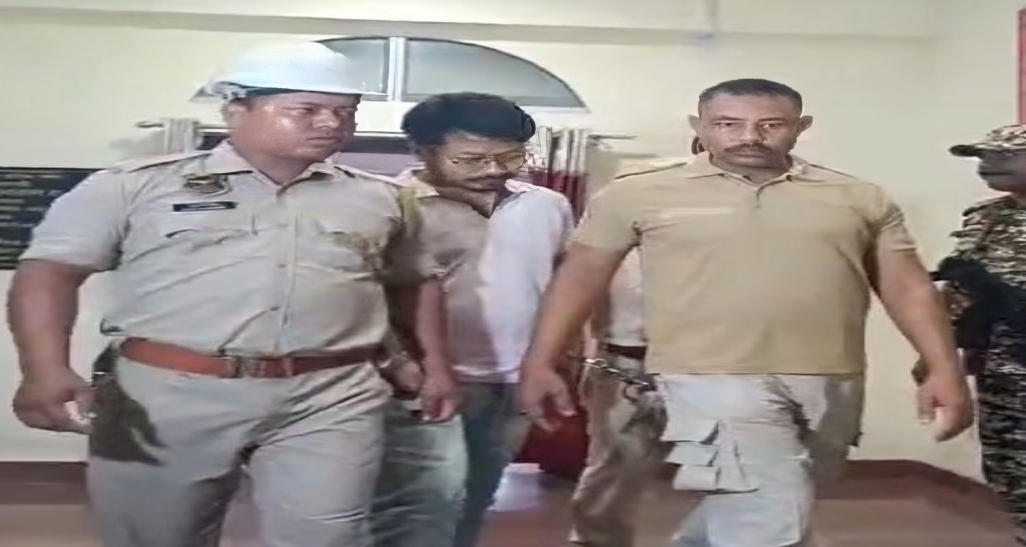

Pratim Bora, ex-boyfriend of Assam influencer Archita Phukan, was arrested for using AI platforms to create and circulate fake, explicit images and videos of her online. The AI-generated content falsely linked Phukan to the adult industry, causing reputational harm and public outrage before police intervention exposed the fabrication.[AI generated]