The information displayed in the AIM should not be reported as representing the official views of the OECD or of its member countries.

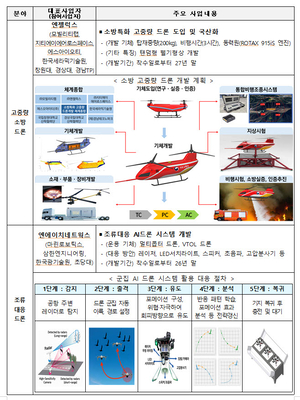

South Korea's Ministry of Land, Infrastructure and Transport has selected consortia to develop AI-based drones for wildfire suppression and airport bird detection. The projects aim to enhance disaster response and aviation safety, but as the systems are still in development, no AI-related incidents or harm have occurred yet.[AI generated]