The information displayed in the AIM should not be reported as representing the official views of the OECD or of its member countries.

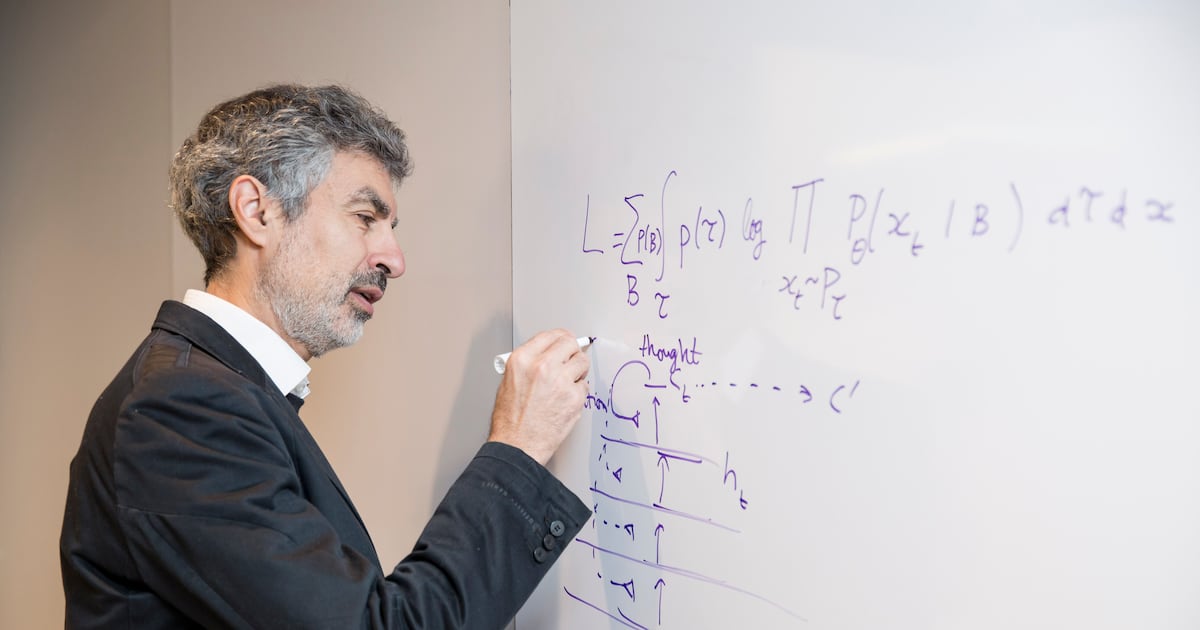

Yoshua Bengio, a leading AI researcher and 'godfather of AI,' has warned that rapid development of hyperintelligent AI systems with self-preservation goals could pose an existential threat to humanity. He urges prioritizing AI safety and governance to prevent potential catastrophic outcomes, including human extinction.[AI generated]