The information displayed in the AIM should not be reported as representing the official views of the OECD or of its member countries.

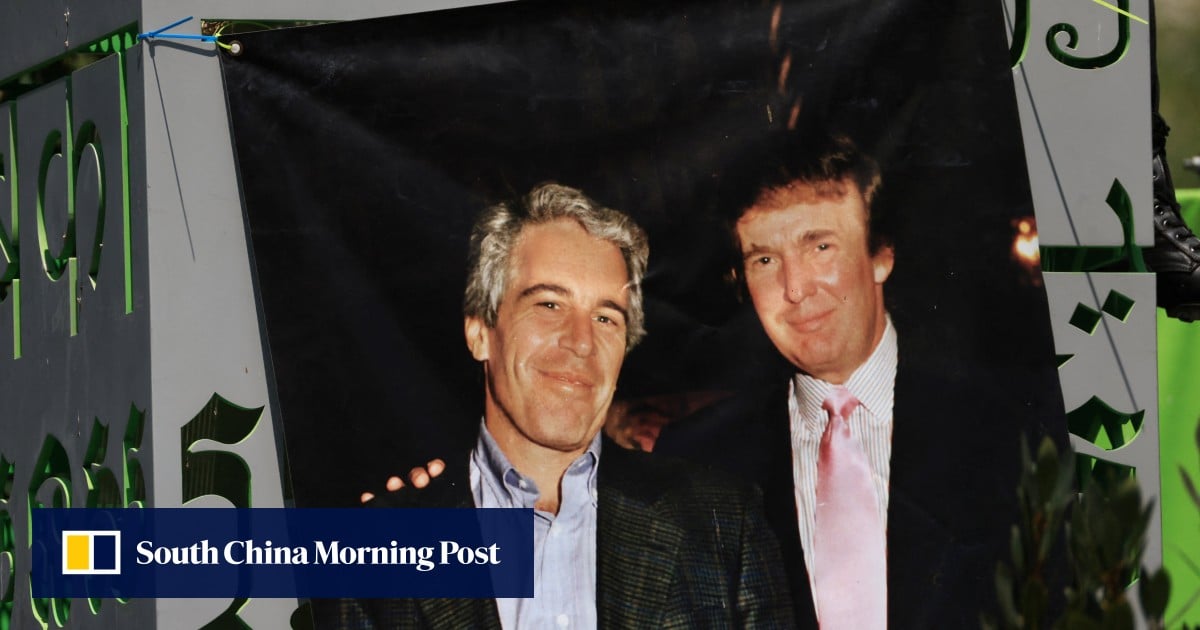

An AI-generated audio clip falsely depicting Donald Trump demanding officials block the release of Epstein-related documents went viral on social media, amassing millions of views. Disinformation watchdogs confirmed the audio was fake, highlighting the growing use of AI deepfakes to spread political misinformation and cause public confusion in the United States.[AI generated]

Why's our monitor labelling this an incident or hazard?

An AI system was explicitly used to generate a fake audio clip that was widely disseminated, leading to misinformation and disinformation. This misinformation can harm communities by creating confusion, polarizing public opinion, and undermining democratic processes. Since the AI-generated content directly led to the spread of false narratives and public confusion, this qualifies as an AI Incident under the definition of harm to communities. The event is not merely a potential risk but an actual occurrence of harm caused by AI-generated disinformation.[AI generated]