The information displayed in the AIM should not be reported as representing the official views of the OECD or of its member countries.

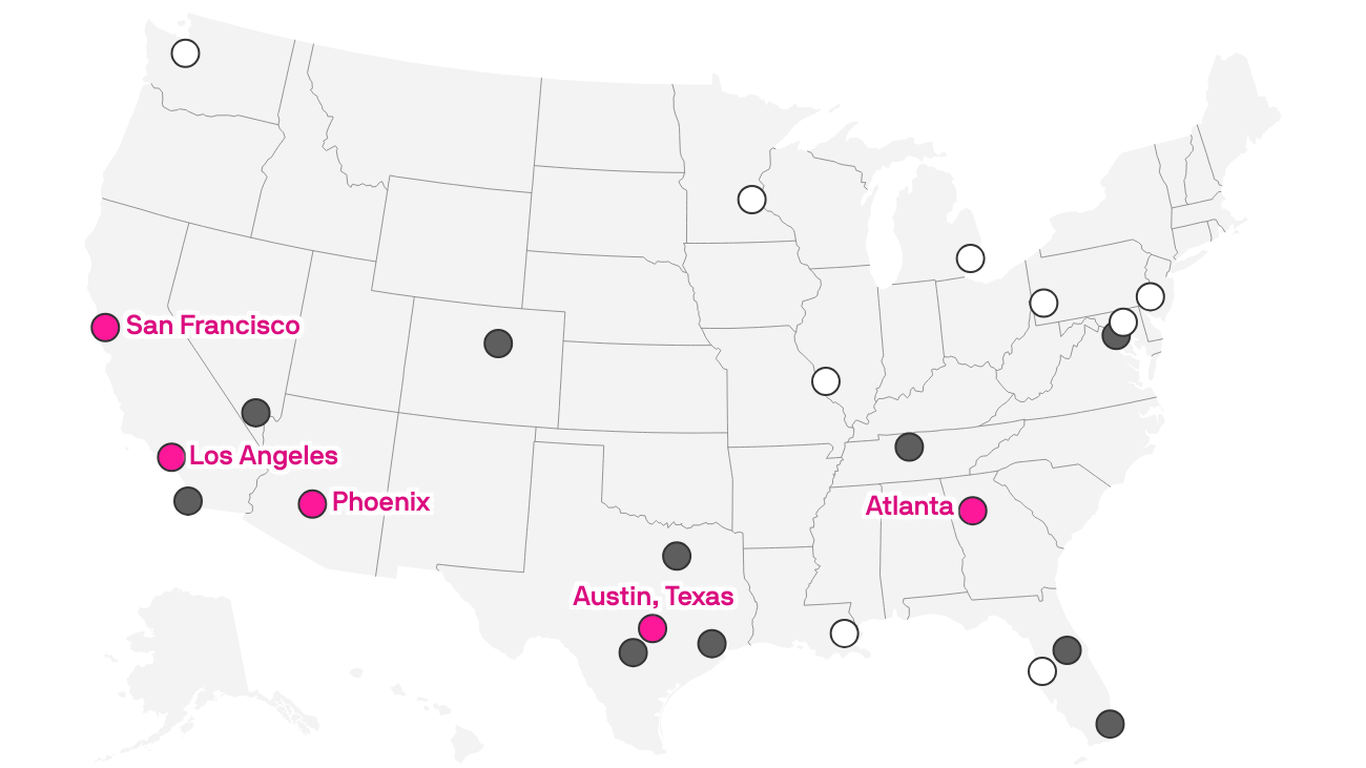

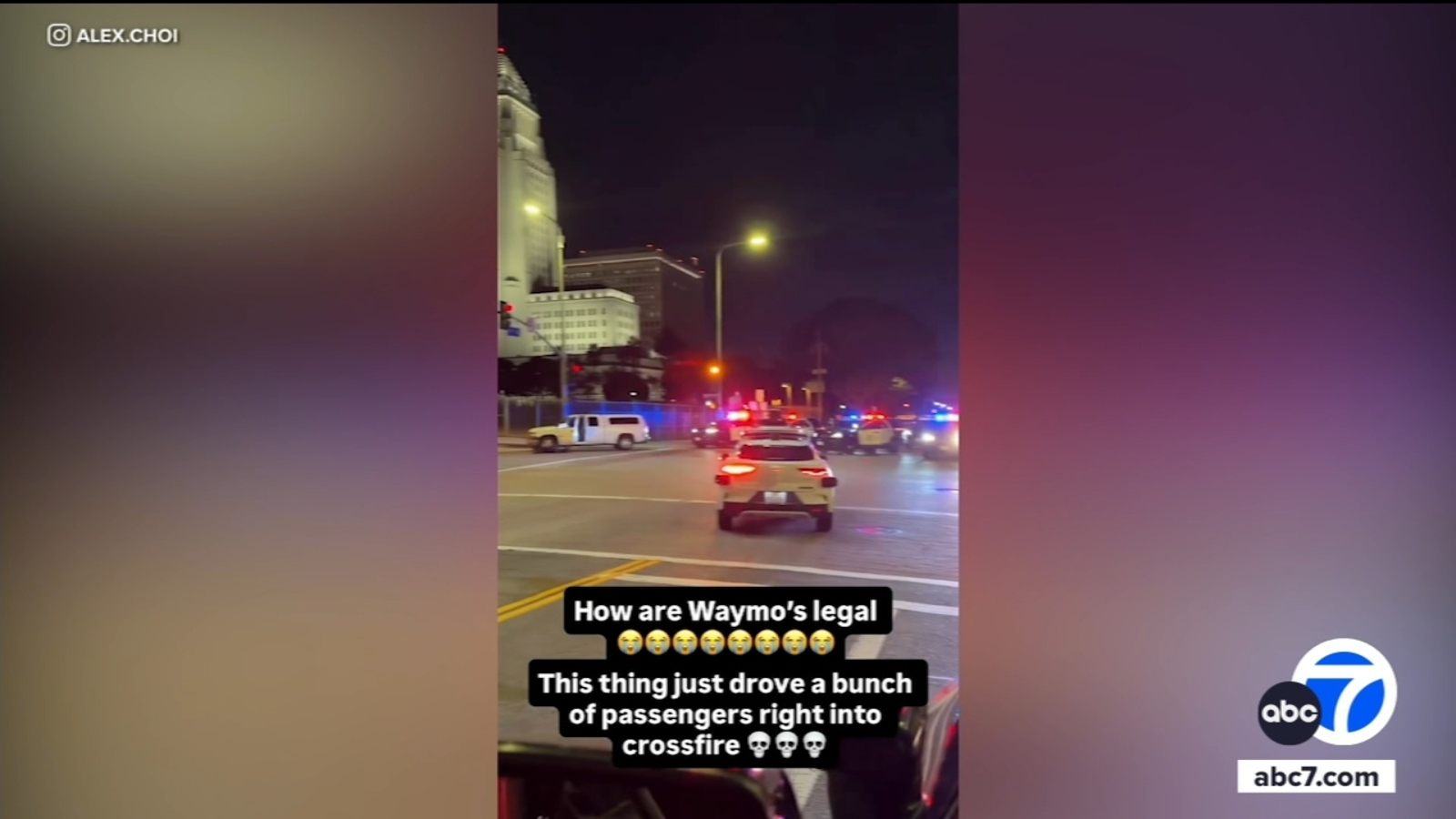

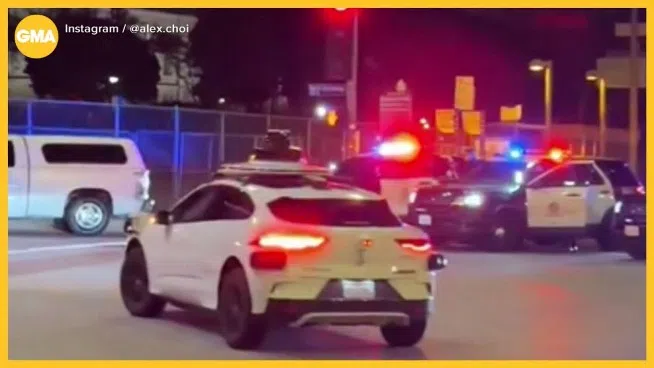

Waymo's autonomous vehicle drove into the middle of an active police standoff in downtown Los Angeles, creating a hazardous situation and raising concerns about AI navigation safety. Separately, Santa Monica ordered Waymo to halt overnight charging operations due to noise and light disturbances caused by its driverless fleet, highlighting community impacts of AI deployment.[AI generated]

)