The information displayed in the AIM should not be reported as representing the official views of the OECD or of its member countries.

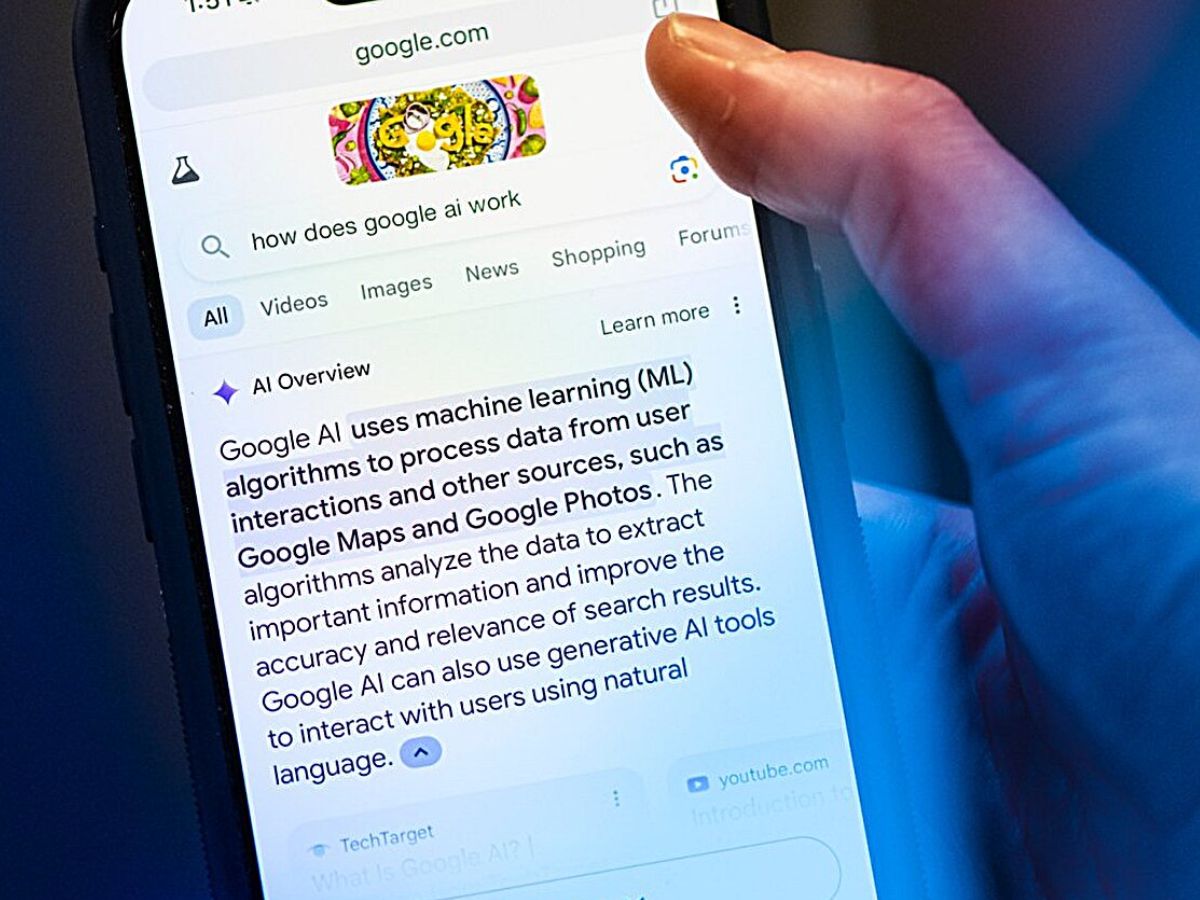

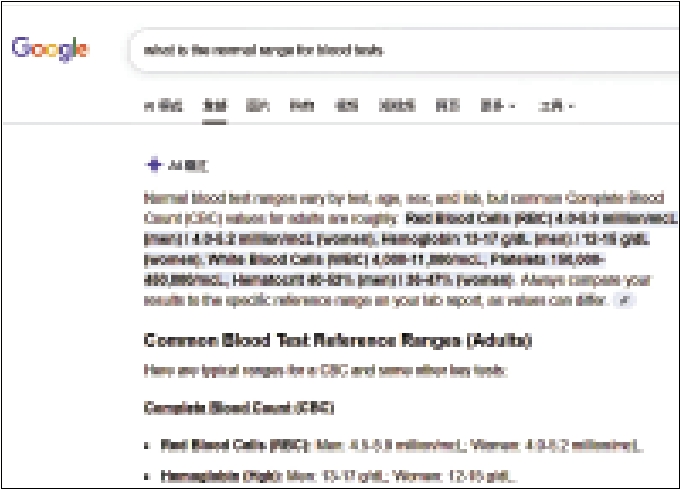

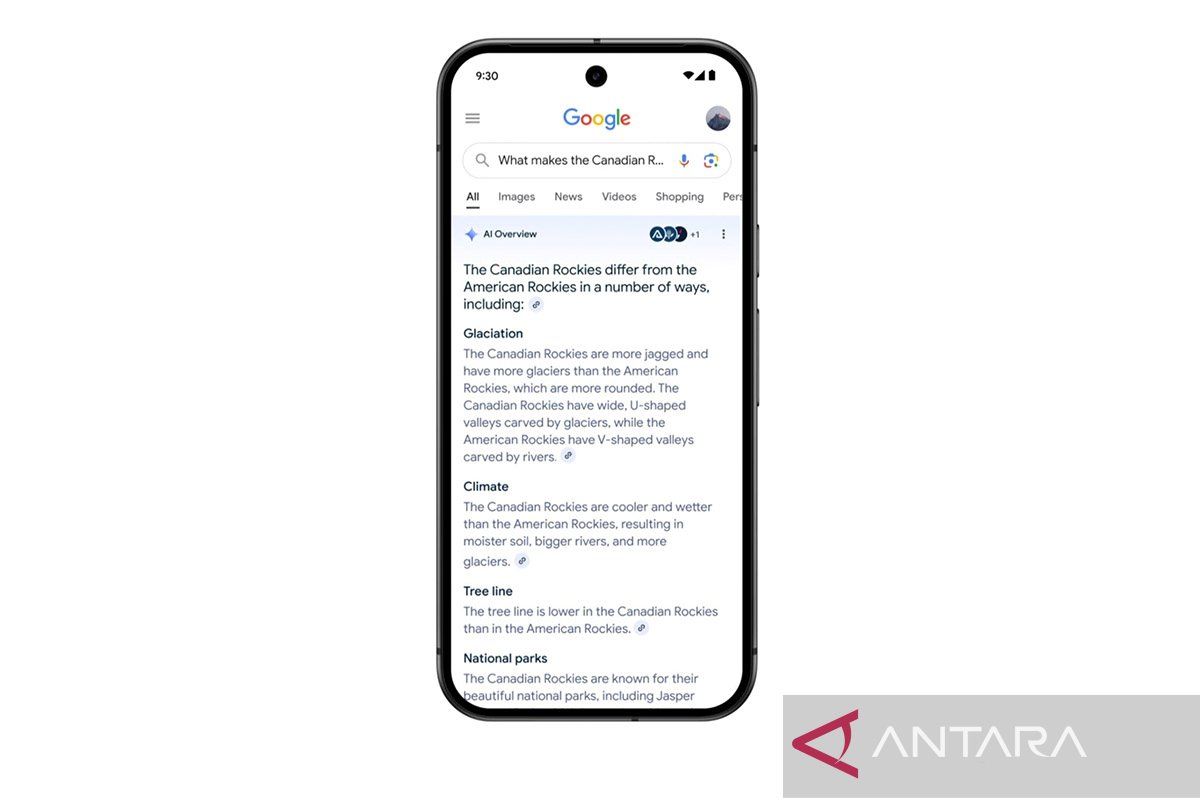

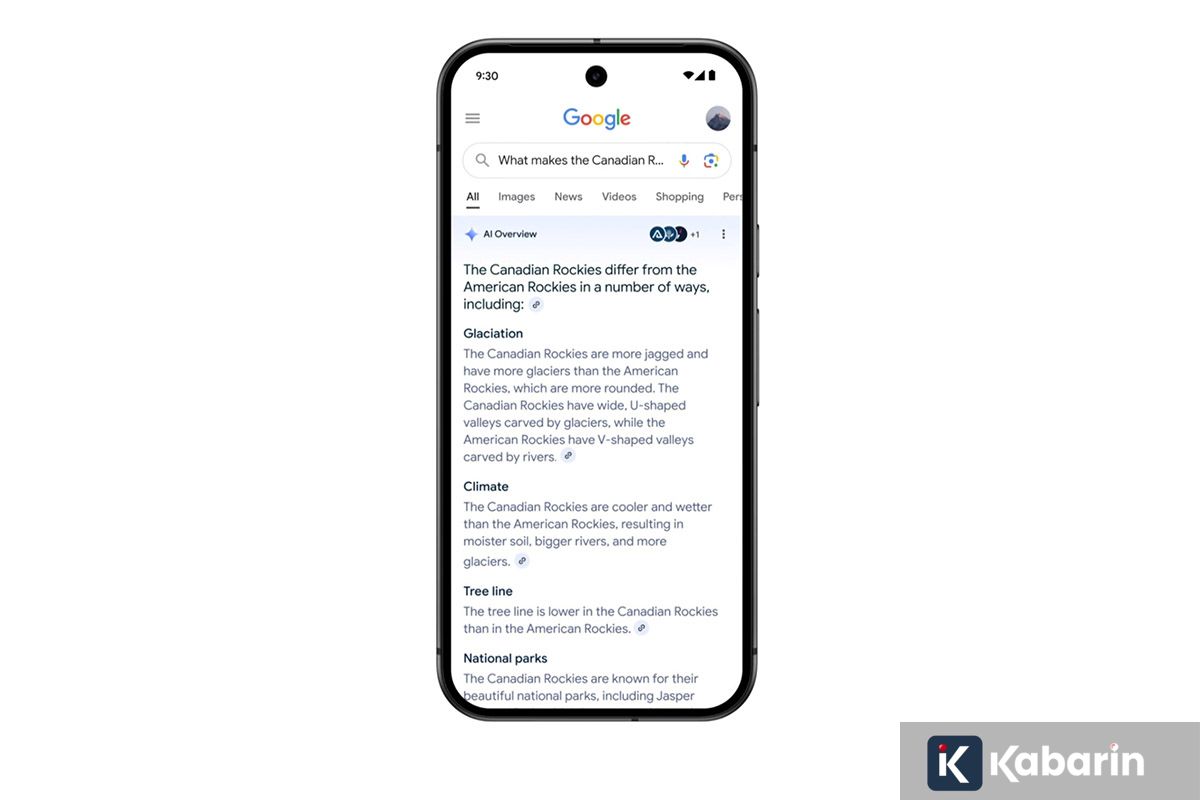

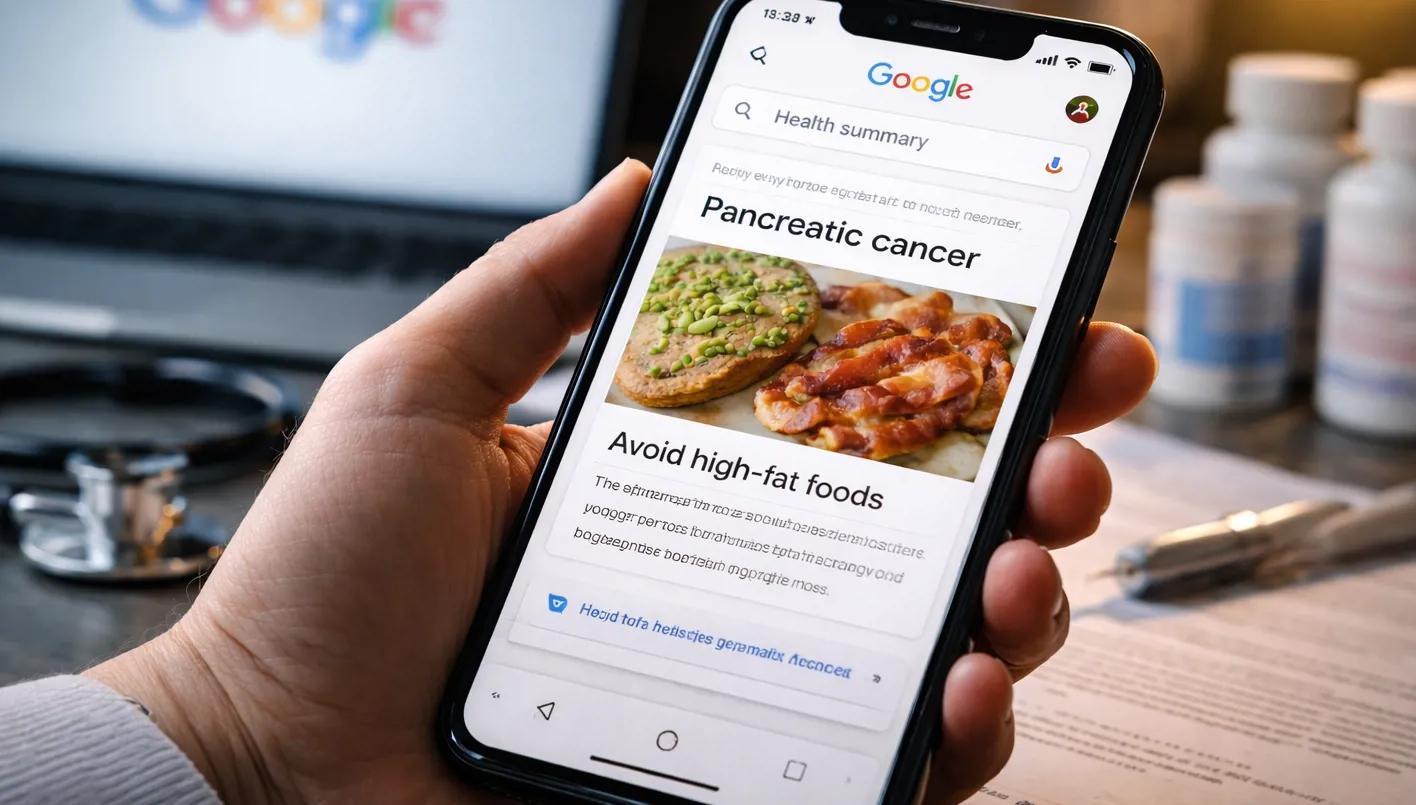

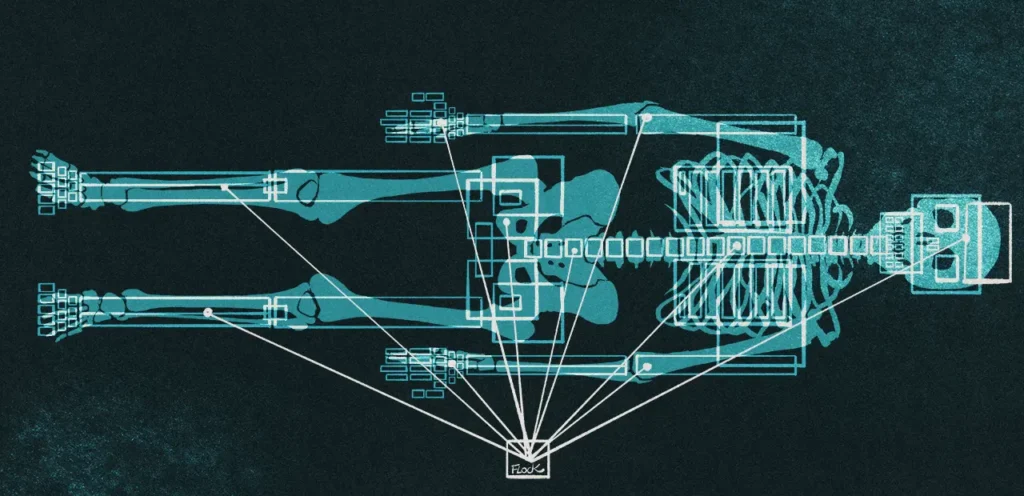

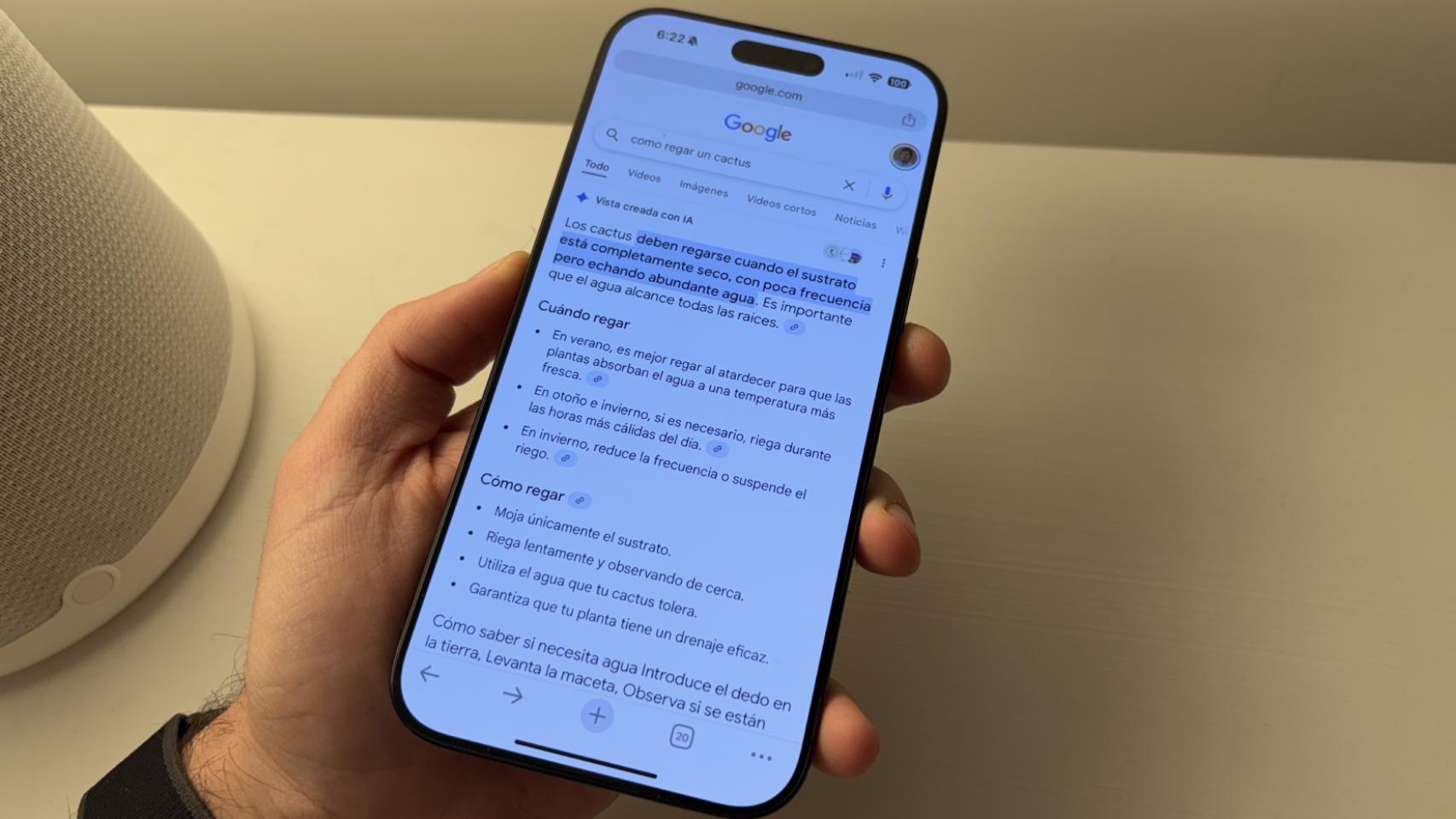

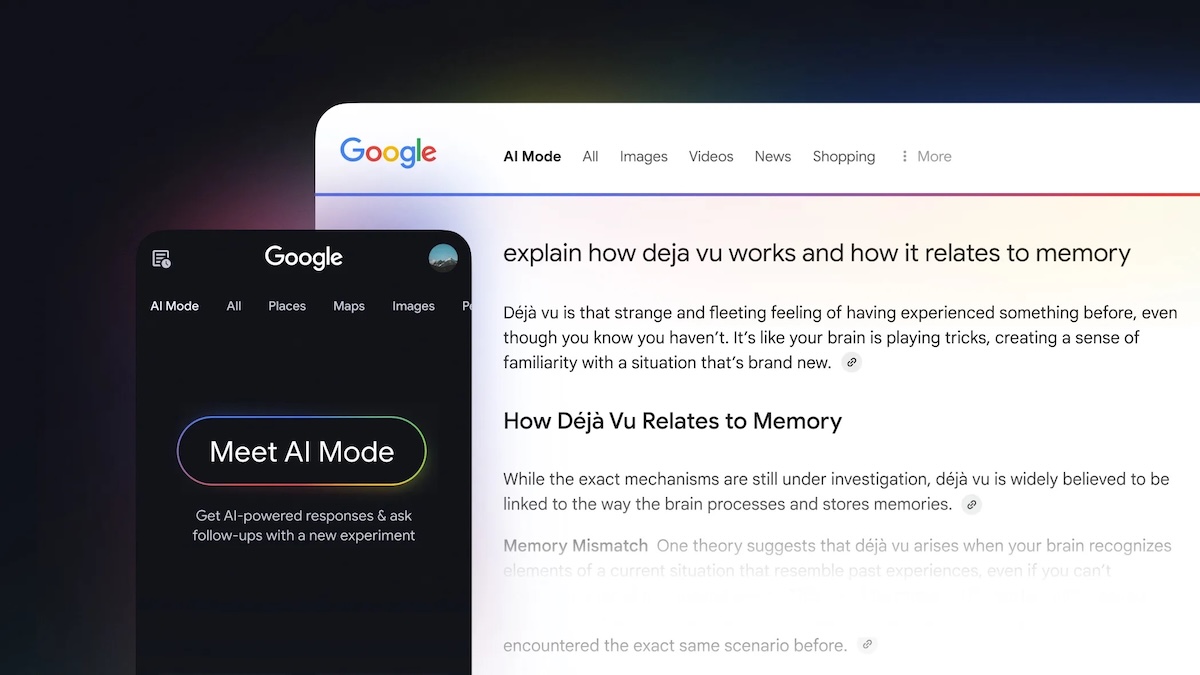

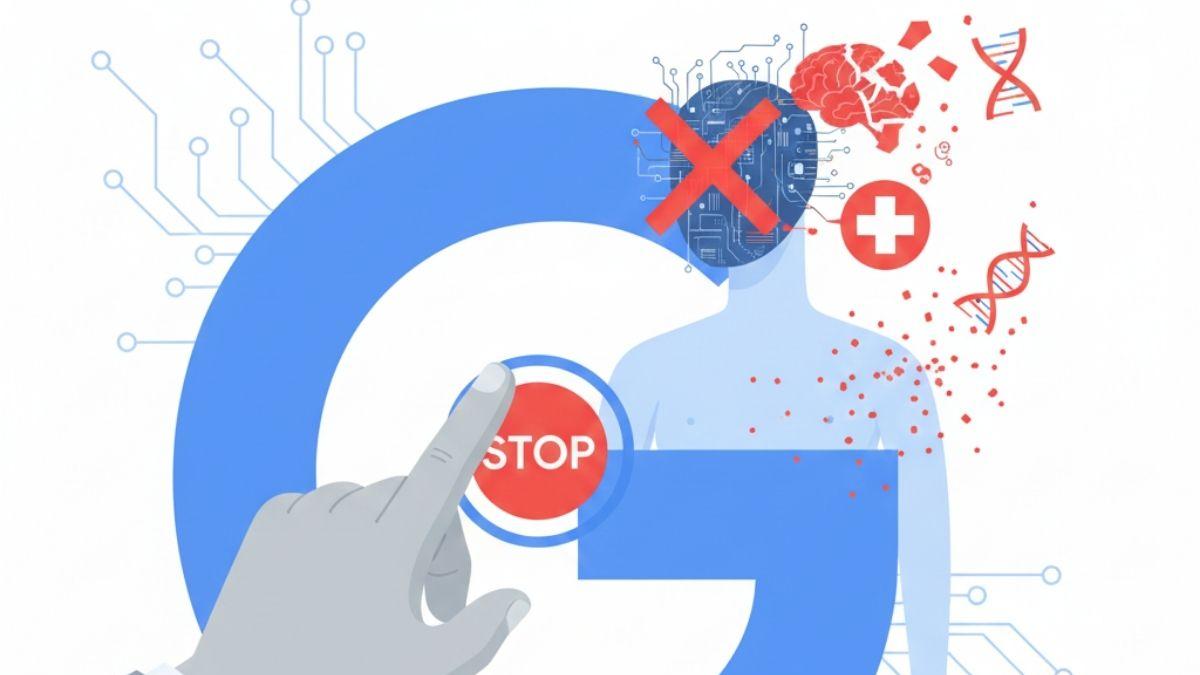

Google's AI Overviews, which generate health information summaries atop search results, have provided inaccurate and misleading medical advice, including dangerous recommendations for cancer and liver disease patients. Experts warn these errors could worsen health outcomes or increase mortality, directly putting users at risk of harm.[AI generated]

)

)