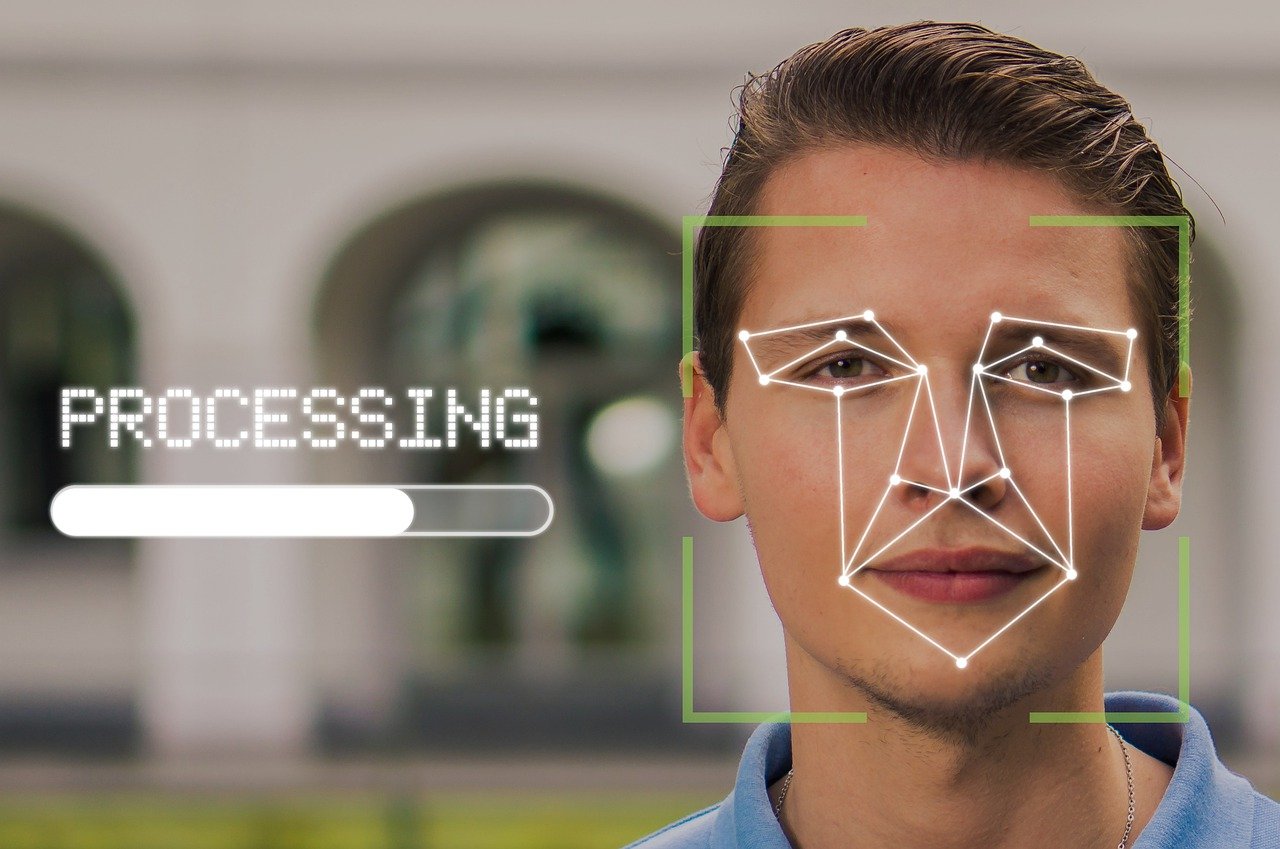

The article explicitly involves AI systems such as facial recognition biometric systems and body cams with data processing capabilities. The sanction against the university for illegal biometric data use constitutes an AI Incident because it involves realized harm (violation of privacy rights through unlawful biometric data processing). However, since the article covers multiple topics and the main narrative is about regulatory actions, opinions, and policy developments rather than a single new incident, the overall classification aligns with Complementary Information. The body cam issue represents a potential risk (hazard) but no direct harm is reported. The facial recognition misuse is a past incident now sanctioned. The article also discusses broader governance and policy responses, which are typical of Complementary Information. Given the article's broad scope and focus on regulatory updates and enforcement, Complementary Information is the most appropriate classification.