The information displayed in the AIM should not be reported as representing the official views of the OECD or of its member countries.

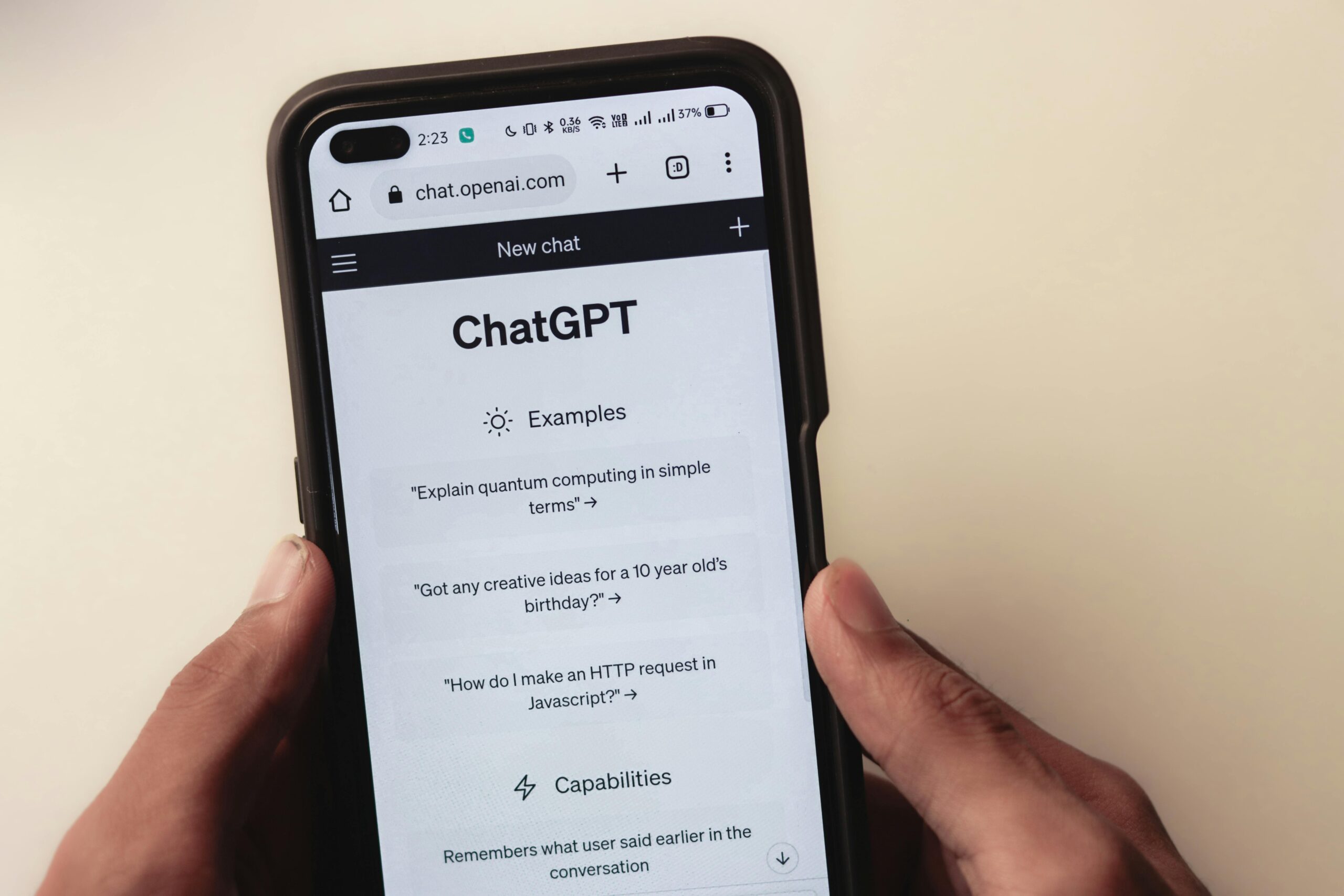

OpenAI's internal systems flagged an 18-year-old ChatGPT user in British Columbia, Canada, for violent tendencies months before she killed eight people and herself. Despite detecting concerning behavior, OpenAI closed her account but did not alert police, citing lack of evidence of imminent threat.[AI generated]