Circle of trust: Six steps to foster the effective development of tools for trustworthy AI in the UK and the United States

AI subtly permeates people’s daily lives – filtering email and helping navigation with apps – but it also manifests in more remarkable ways, such as disease identification and assisting scientists in tackling climate change.

Alongside this great potential, AI also introduces considerable risks. Fairness, reliability, accountability, privacy, transparency, and safety are critical concerns that prompt a crucial question: Can AI be trusted? This question has sparked debates from London to Washington and Brussels to Addis Ababa as policymakers wrestle with the unprecedented challenges and opportunities ushered in by AI.

AI systems and applications are considered trustworthy when they can be reliably developed and deployed without adverse consequences for individuals, groups or broader society. A multitude of frameworks, declarations, and principles have emerged from various organisations worldwide to guide the development of more trustworthy AI in a responsible and ethical way. This includes the European Commission’s Ethics Guidelines for Trustworthy AI, the OECD AI Principles, and UNESCO’s Recommendation on the Ethics of AI. These frameworks helpfully outline various foundational elements for trustworthy AI systems’ desired outcomes and goals, similar to the critical concerns listed above. However, they offer little specific guidance on practically achieving these objectives, outcomes, and requirements related to AI trustworthiness.

That’s where tools for trustworthy AI come in. These tools help bridge the gap between AI principles and their practical implementation, providing resources to ensure AI is developed and used responsibly and ethically. Broadly speaking, these tools comprise specific methods, techniques, mechanisms, and practices that can help measure, evaluate, communicate, improve, and enhance the trustworthiness of AI systems and applications.

The transatlantic landscape

A RAND Europe study published in May 2024 assessed the present state of tools for trustworthy AI in the UK and the U.S., two prominent jurisdictions deeply engaged in developing the AI ecosystem. Commissioned by the British Embassy in Washington, the study pinpoints examples of tools for trustworthy AI in the UK and the U.S., identifying hurdles, prospects, and considerations for future alignment. The research took a mixed-methods approach, including document and database reviews, expert interviews, and an online crowdsourcing exercise.

Moving from principles to practice

The landscapes of tools for trustworthy AI in the UK and the U.S. are complex and evolving. High-level guidelines are increasingly complemented by more specific, practical tools. The RAND Europe study revealed a fragmented and growing landscape, identifying over 200 tools for trustworthy AI. The U.S. accounted for 70% of these tools, the UK for 28%, and the remainder represented a collaboration between U.S. and UK organisations. Drawing on the classification used by OECD.AI, the U.S. landscape leaned more towards technical tools, which relate to the technical dimensions of the AI model, while the UK produced more procedural tools that offer operational guidance on how AI should be implemented.

Interestingly, U.S. academia was more involved in tool development than its UK counterpart. Large U.S. tech companies are developing wide-ranging toolkits to make AI products and services more trustworthy, while some non-AI companies have their own internal guidelines on AI trustworthiness. However, there’s limited evidence about the formal assessment of tools for trustworthy AI. Furthermore, multimodal foundation models that combine text and image processing capabilities make developing tools for trustworthy AI more complex.

Actions to shape the future of AI

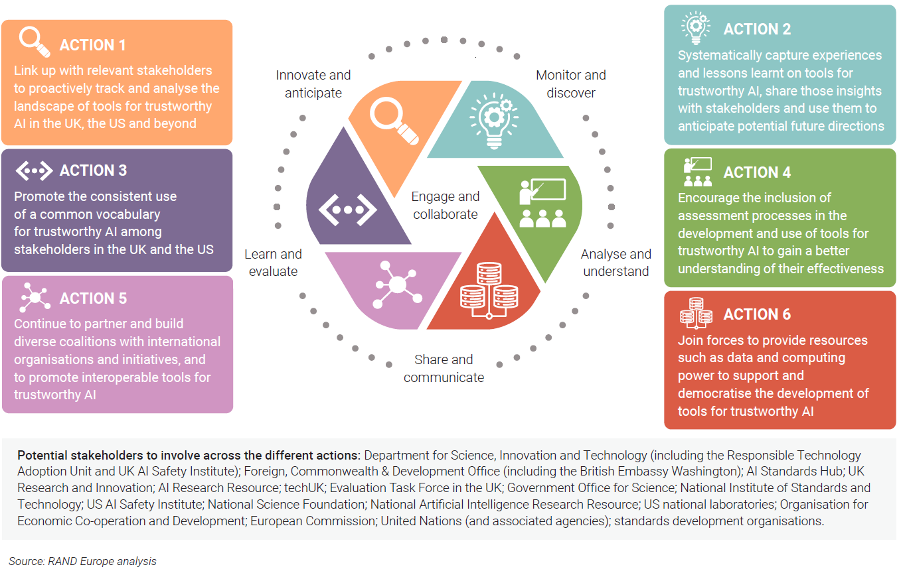

The research led to a series of considerations for policymakers and other stakeholders in the UK and the U.S. When combined with other activities and partnership frameworks – both bilateral and international, these practical actions could cultivate a more interconnected, harmonised and nimble ecosystem between the UK and the U.S.:

- Connect with relevant stakeholders to proactively track and analyse the landscape of tools for trustworthy AI in the UK, the U.S., and beyond.

- Systematically document experiences and lessons learned on tools for trustworthy AI, share those insights with stakeholders and use them to anticipate potential future directions.

- Promote consistent and shared vocabulary for trustworthy AI among UK and US stakeholders.

- Include assessment processes in developing and using tools for trustworthy AI to understand their effectiveness better.

- Build diverse coalitions with international organisations and initiatives and promote interoperable tools for trustworthy AI.

- Join forces to provide resources such as data and computing power to support and democratise the development of tools for trustworthy AI.

A future of trust?

The actions we suggest are not meant to be definitive or exhaustive. They are topics for further discussion and debate among relevant policymakers and, more generally, stakeholders in the AI community who want to make AI more trustworthy. These actions could inform and support the development of a robust consensus on tools, which would be particularly beneficial for future discussions about broader AI oversight.

The future of AI is not just about trustworthy technology; it’s about trust, a subjective concept that could vary widely between individuals, contexts and cultures. Ideally, more trustworthy AI should lead to greater trust, but this is not always the case. Conversely, a system could be trusted without being trustworthy if its shortcomings are not apparent to its users. This personal and global issue requires careful consideration and collaborative efforts from policymakers, researchers, industry leaders and civil society worldwide.

The analysis in this article represents the views of the authors and is not official government policy. For more information on this research, visit www.rand.org/t/RRA3194-1