From risk to resilience: No-code AI governance in the Global South

As OECD countries advance national AI strategies, a critical question looms for developing nations: how do they govern AI now, without waiting years for top-down frameworks? One answer is to equip local teams to build and continually refine their own guardrails using accessible, no-code tools. Done well, AI shifts from a replacement risk to a skills multiplier. This post outlines how no-code AI tools, paired with locally authored rules, enable developing communities to leapfrog slow, centralised governance models.

The Global South leads in ChatGPT use

A 2023 Boston Consulting Group survey shows India (45%), Morocco (38%), and Argentina and Brazil (32%) leading global ChatGPT use. Yet their policy frameworks lag behind this rapid spread. Citizens are already using generative AI for daily tasks, while many governments are still drafting strategies: technology diffuses in months, but regulation often takes years. A Lowy Institute analysis puts hard numbers on this dynamic. When AI complements labour rather than replaces it, countries can unlock their share of the USD 17 trillion in cumulative value by 2030.

The AI divide is widening

Benefits cluster in high-income economies while skills, compute, and capital constraints hold back many in the Global South. UNCTAD’s Technology & Innovation index highlights a readiness gap between high-income economies and many in the Global South, where frontier‑tech adoption remains structurally limited. At the same time, recent McKinsey surveys underscore that cloud compute services in many African settings cost more than in OECD countries due to small‑scale markets, exchange rate instability, and fewer local data centres.

No-code, plain-language AI flips that script. It allows domain experts to operationalise local knowledge with minimal spend. Evidence from no- or low-code studies shows that this citizen developer route can bridge talent gaps quickly.

What exactly is no-code AI?

Any platform that lets users build, prompt or chain AI services through menus or plain text, no Python required. Think Microsoft’s Power Automate, Airtable’s AI scripts, or direct GPT prompt links. The point is not the tool but the access: domain experts can solve local problems without waiting for scarce engineers.

How no-code AI becomes self-governed

If people can rapidly build AI tools for their work, they can also create the rules to govern them. The key is to make both the tools and the guardrails usable by domain experts, not just technologists.

A telling case comes from the United States. A National Park Service facility manager—who had “never written a line of code”—built an AI tool in 45 minutes that automated funding request forms. A process that took two to three days now takes minutes, saving an estimated 14,000 labour days annually across 433 parks (roughly 20 years of staff time).

In Mexico, customs brokers face a different bottleneck: bilingual tariff codes. A firm in Guadalajara now uses Aduanapp’s “Classify”, a no-code, AI-powered tariff tool that “serves as a consultative aid, complementing rather than replacing the expertise of customs brokers.” Clerks upload product details and the system suggests codes for both Mexico’s NICO list and the U.S. HTS in seconds. Aduanapp claims teams can “process dozens or even hundreds of classifications in minutes.” Brokers still make the final call, but early field data show clearance times cut by more than half.

IKEA offers a private-sector parallel. After its “Billie” AI began handling ~47% of routine customer inquiries, IKEA retrained 8,500 employees from customer-enquiry roles to interior-design advisors rather than cutting their jobs. This retraining freed staff for customer-facing roles, such as design, sales, and more complex, empathy- and creativity-driven service roles, where humans still outperform AI. In retraining and repositioning staff, IKEA generated an extra $1.4 billion in revenue. This is vibe teaming in practice: AI manages the well-defined tasks it excels at while staff manage the creative and interactive roles, so the workforce capacity multiplies rather than being substituted away.

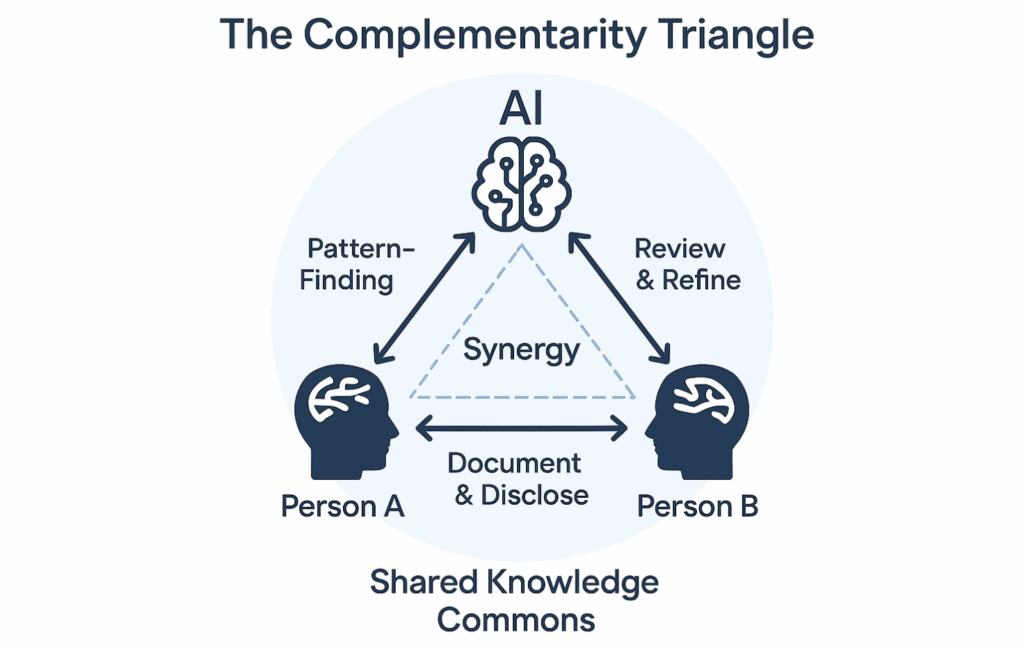

Researchers at the Brookings Institute call this approach “vibe teaming”: AI delivers pattern-finding and scaling capabilities while people bring context, values, and domain judgment, all shared through a standard knowledge base. Vibe-teaming is a term for a collaborative partnership—for example, two people and an AI assistant—where the technology handles pattern-finding and drafting, leaving the people free to strategise, design, and make decisions. The result is an improvement in a team’s “collective intelligence”—how diverse teams leverage expertise, coordinate focus, and blend thinking styles to solve problems that individuals couldn’t tackle alone.

For the Global South, this approach inverts the usual dependency cycle. Communities need not wait for large IT teams, capital outlays, or year-long coding boot camps. Deep contextual knowledge—gained over years—can be scaled in a matter of hours.

Why does this matter for governance? Because each of the stories above pairs a no-code build with a locally drafted rule-set. The Park Service assistant logs each prompt and triggers human sign-off for hazardous tasks; Aduanapp routes every suggested code to a senior broker for approval. In other words, the same plain-language interface that enables teams to build AI helpers also allows them to embed guardrails, monitor usage, and escalate problems—governance at the speed of adoption.

A five-step governance toolkit for no-code AI

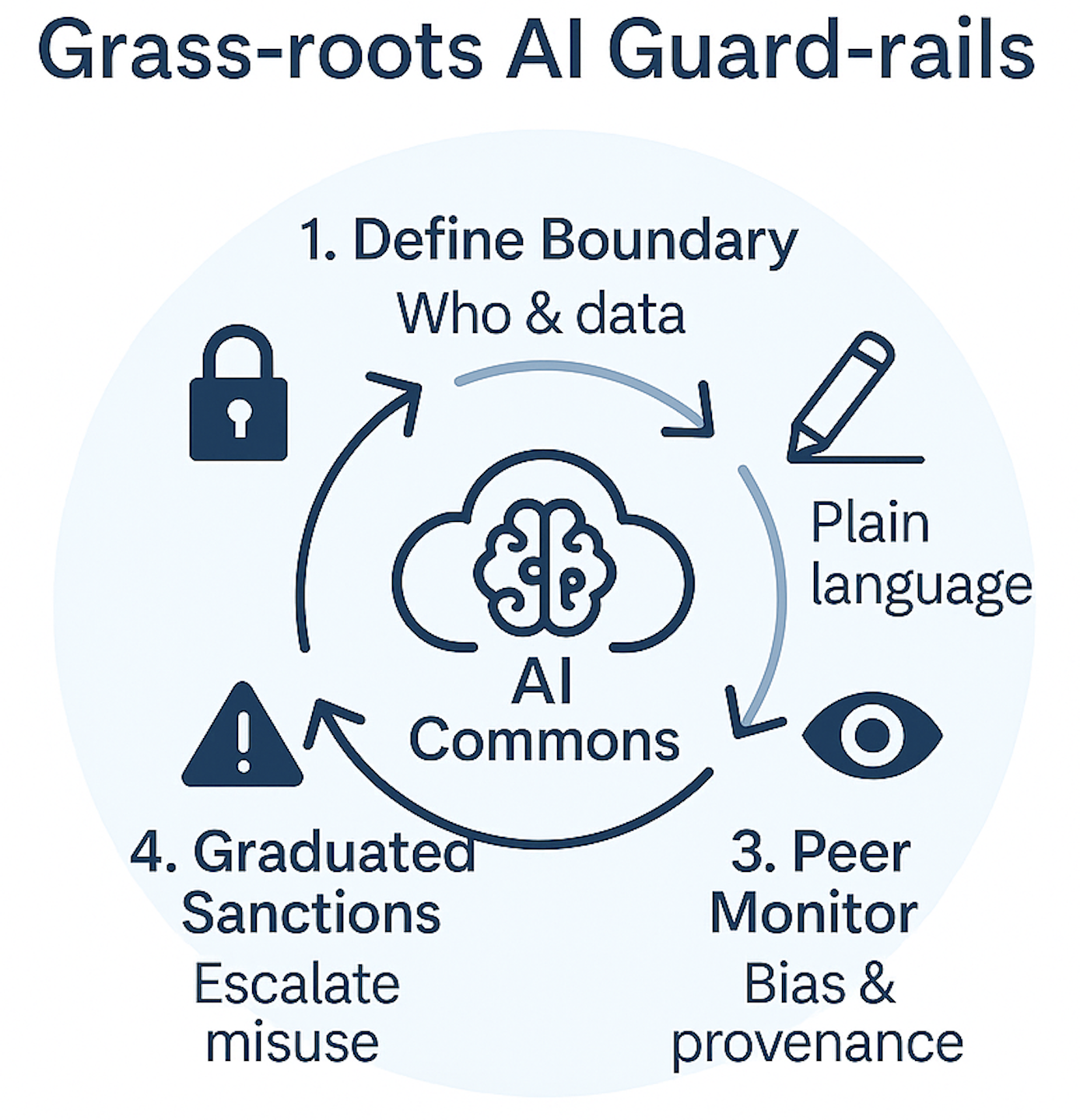

Elinor Ostrom’s fieldwork showed that commons thrive when users set rules, monitor peers and apply graduated sanctions. Commons are things we share that are not privately owned or government-run, such as language. Task-based economics finds that technology lifts wages only when it complements human judgment. Both insights travel well to grassroots AI governance. ASEAN officials refer to this as a “Third Way”: pro-innovation, risk-based, and community-led—neither laissez-faire nor imposing blanket bans. Along the way, they are turning AI governance into a source of geopolitical power. This practice-informed scholarship forms the foundation for the five-step governance toolkit.

The framework also draws on examples from Kenya, India, and the United States, offering a practical, repeatable cycle for communities to develop, monitor, and refine their own AI governance.

- Step 1: Define access boundaries. Who can use AI tools? Which datasets are in scope? Role-based permissions reduce risk without stalling productivity. One-page guidelines in local languages beat lengthy, opaque policies.

- Step 2: Co-create usage rules. Teams write plain-language rules together and pin them in shared workspaces. When people write the rules, they follow them. Locally authored norms also lower the risk of unsafe prompting.

- Step 3: Implement peer monitoring. A two-minute bias and provenance check before sharing outputs catches subtle errors early. Collective responsibility becomes routine quality control.

- Why not rely on external audits? First, they occur infrequently and are costly. Peer checks, on the other hand, happen every day. Second, peers hold rich context: they know when a district’s rainfall data are wrong or when a headline is culturally off. Finally, peer review fosters shared accountability, the social glue that Ostrom found essential for commons success. Formal audits still matter; peer monitoring makes sure there is something worth auditing.

- Step 4: Red-team and escalate Monthly “red-team sprints” deliberately probe for unsafe behaviour. Log issues and have a clear “stop-the-line” path for high-risk outputs. This aligns with international safety evaluations but remains practical.

- Step 5: Apply graduated responses. Use proportionate consequences: friendly nudges for minor slips, team-lead reviews for recurring problems, and temporary access removal for serious breaches. Avoid both overreaction and impunity.

This toolkit maps directly to the OECD AI Principles (human-centred, fairness, transparency, accountability, safety, responsibility) and the NIST AI Risk Management Framework (“Govern, Map, Measure, Manage”).

Bottom-up governance already works

India and beyond – farmer chat. Digital Green’s Farmer Chat enables extension workers and farmers to interact with AI through voice, text, or images in local languages. It lowers literacy barriers and embeds community knowledge into the system. AI amplifies, rather than replaces, local expertise.

U.S. counties – a public AI register template from the Center for Democracy & Technology has been adopted by dozens of localities, or counties. Each publishes an inventory of AI tools, their data sources and a grievance contact. Peer governance emerges without waiting for state legislation to be enacted.

Across settings, one pattern recurs: local actors write the rules, test them in real-time, iterate quickly, and share lessons upward once national bodies are ready.

Why this matters now

The Global North-South AI divide will continue to widen if governance and capacity building remain centralised and slow. Bottom-up approaches enable communities to capture immediate gains and establish safety norms in parallel.

The IMF finds that AI’s economic dividends could be more than twice as large in advanced economies compared to low-income countries, based on differential levels of preparedness, exposure, and infrastructure capacity. This risk of divergence highlights the vital need for local capacity building and inclusive governance.

Emerging markets reinforce the risk of inequality. IMF analysis reveals that many low- and middle-income countries lag significantly in AI readiness, particularly in digital infrastructure, regulation, and skills. Underlying infrastructure costs also exhibit significant regional disparities, with IP transit pricing in major hubs for the Global South being 3-4 times higher than in European centres, such as London. Unless governance and capability-building scale in tandem, adoption gaps risk entrenching productivity divides.

UNESCO’s 2023 report, Indigenous People‑Centred Artificial Intelligence: Perspectives from Latin America and the Caribbean, calls for participatory AI policies that embed Indigenous values, practices and data sovereignty from start to finish. It warns that externally developed tools risk skewing cultural norms and misappropriating traditional knowledge. The report emphasises that grassroots AI governance is not only efficient but also essential to protect local norms and foster inclusive, values-aligned AI adoption.

In ASEAN, governments are articulating a “Third Way” on AI safety: practical, risk-based and innovation-friendly, grounded in community accountability rather than blanket bans or laissez-faire minimalism. Bottom-up guardrails align with that stance—especially when they are written and enforced by the people who use the systems.

Taking the first steps

The Global South is already adopting AI on a large scale. With the right tools, this adoption can translate into a competitive advantage—while protecting local values and interests.

Ministries, TVET bodies, co-operatives and SME clusters should:

- Adapt the five-step framework to local needs.

- Start small with pilots that solve a concrete bottleneck.

- Measure outcomes: labour days saved, bias incidents detected, productivity or yield gains.

- Share evidence with national policymakers as broader strategies take shape.

Day 30: pick one high-volume task, build a no-code flow, and approve a one-page rule sheet.

Day 60: run the first red-team drill; publish a short AI register.

Day 90+: once there’s enough data collected, measure the change in labour-hours used and share the data with the OECD.AI community.

Success hinges on local ownership, not imported templates. It is about training teams to produce and enforce their own guardrails, tuned to local risks, data realities and community values. That capability will matter even more as AI systems evolve.

Progress can be tracked in three public dashboards—labour days saved, bias flags caught, and small-enterprise revenue lift—updated regularly. Any ministry, co-op or SME that submits anonymised metrics will appear on the map. Early transparency makes success visible and can accelerate policy diffusion.

What the OECD and GPAI can do next

The OECD and GPAI can support member states and partners by publishing a “No-Code Governance Starter Kit,” which includes open templates, public dashboard tools, and red-teaming protocols. It could also help regional pilot sites to collect outcome data and share lessons across contexts by building a practical repository of real-world AI governance innovations.

The technology exists. Communities are ready. Our choice is whether to empower them to build their own AI future—or leave them waiting for solutions designed elsewhere.