Artificial intelligence and intellectual property: Navigating the challenges of data scraping

The Global Partnership on AI (GPAI) has released a new report examining the intellectual property (IP) implications of how organisations collect and use data to train AI systems, with a particular focus on data scraping. This analysis examines approaches and potential solutions for addressing IP considerations in AI development. GPAI approved and released the report on 30 January 2025, which benefited from the OECD’s AI Governance Working Party’s (AIGO) input and from GPAI reviews and workshops, notably those of the GPAI IP Project co-chaired by Lee Tiedrich and Yann Dietrich. This is part of the GPAI Working Party on Innovation and Commercialisation, co-chaired by Robert Kroplewski and Laurence Liew as well as input from Carolyn Blankenship and Tagui Ichikawa from Hitotsubashi University (Japan). The GPAI IP Project published reports in 2023 and 2022, which collectively include information about the GPAI IP Project members.”

Understanding the challenge

The training of AI models, particularly large language models, relies heavily on access to extensive datasets. A widely used method for collecting such data is “data scraping,” which refers to the automated extraction of information from third-party websites, databases, or social media platforms. Data scraping can affect several types of IP and similar rights, including copyright, database rights, trademarks, trade secrets, publicity, and moral rights. Complicating matters further, existing IP laws (many of which predate modern AI practices) differ across jurisdictions. Unsurprisingly, data scraping litigation around several legal issues is increasing globally, with prominent cases emerging in the United States, European Union, and beyond. Additionally, concerns about AI-generated outputs—particularly those that mimic an individual’s style, voice, or likeness without authorisation— have prompted varied legal responses to protect rights and prevent misuse. The recently released International Scientific Report on the Safety of Advanced AI cites protecting IP rights as one of the risks posed by advanced AI systems.

Data scraping is a widespread practice encompassing various methods but lacks a universally accepted definition. Furthermore, different actors in the data scraping ecosystem encounter distinct legal issues. While data scraping has garnered much attention for its commercial uses, it is also used to support research and other endeavours, suggesting the need for policy tools tailored to different use cases.

The report highlights how data scraping affects different stakeholders in the AI ecosystem:

- Content creators and other rights holders face challenges protecting their IP and other rights as their works are scraped for AI training.

- Research institutions and academia rely on data scraping for scientific advancement.

- AI data aggregators make scraped data available to third parties without payment or on a fee-based basis and can include non-profits and commercial entities.

- AI developers need access to vast datasets to train their models.

- Technology companies and platforms can act both as sources of scraped data and as scrapers themselves.

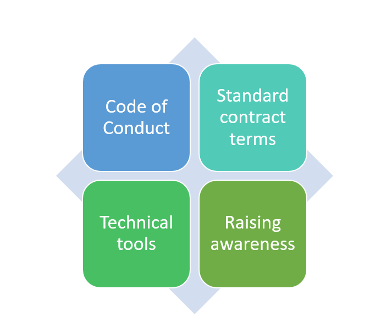

What can we do? Voluntary codes of conduct and standardised contract terms and technical tools are among the recommendations

The report proposes several policy approaches to address these challenges, which can be used together: a) a voluntary data scraping code of conduct, b) standardised technical tools, c) standardised contract terms, and d) awareness-raising initiatives. While the report focuses on IP and similar rights, the proposed policy approaches potentially provide policymakers with the flexibility to holistically address IP, privacy, and other issues presented by data scraping in an internationally coordinated manner while accounting for relevant limitations and exceptions and other policy differences across jurisdictions. Such policy tools could be designed to adapt and evolve.

Furthermore, policymakers could consider these potential approaches when considering changes to applicable laws. Importantly, the process of developing these policy tools could help policymakers address core issues by working towards recognising important terminology, creating standardised definitions and measures that support innovation with the effective protection of IP and other rights, following the OECD AI Principles and other relevant multilateral efforts, such as the G7 Hiroshima AI Process (Government of Japan, 2024). The policy approaches could be particularly effective if developed with global and multi-stakeholder input, including across the data ecosystem, to better ensure that diverse viewpoints are appropriately reflected in their development.

1. A voluntary code of conduct

- A key recommendation is the development of a voluntary “data scraping code of conduct” that could: establish broadly applicable provisions while providing specific guidelines for different actors in the AI ecosystem. These provisions could address the distinct roles of AI data aggregators and users of scraped data. To promote consistency, the code could include standard terminology, ensuring a shared understanding of data scraping activities among stakeholders. Additionally, it could include mechanisms for monitoring adherence, such as a registry system, and offer recommendations for transparency and documentation practices. Finally, the code could include standard contract terms.

2. Standard technical solutions

- The report emphasises the importance of developing standardised technical tools that canhelp protect IP rights and enable rights holders to manage access to their data with greater ease. These tools could include data access control mechanisms, automated contract monitoring, and direct payment systems. Such standardised tools could streamline compliance for organisations while simplifying protection of rights holders’ across multiple platforms.

3. Standard contract terms

- Standard contract termscould address legal and operational issues associated with data scraping, streamline negotiations, and reduce transaction costs. These terms could serve as optional starting points while allowing organisations to negotiate their specific conditions. As explained in another recent report, an AI Wonk blog post and prior GPAI reports, the development of these terms would benefit from collaboration among multiple stakeholders and could be tailored for different use cases, ranging from non-profit research to commercial applications.

4. Awareness raising initiatives

- The report stresses the importance of raising awareness among all stakeholders regarding data scraping and its legal implications, with the objective of empowering them with information on how to protect and manage their rights. This includes assisting rights holders in understanding their protections, educating AI system users about responsible usage, and ensuring that all participants in the AI data ecosystem understand their roles and responsibilities.

The report acknowledges that while updating IP laws may be desired by at least some jurisdictions in the long term, flexible and voluntary measures can help address immediate challenges while accommodating diverse legal approaches across jurisdictions. These recommendations align with the OECD AI Principles, which promote responsible AI innovation while protecting rights holders, and the Hiroshima AI Generative AI Code of Conduct.

As we continue to navigate the rapidly evolving landscape, this report provides guidance for policymakers, developers, rights holders, and other stakeholders. It represents an important step toward creating a framework that respects IP and other rights and fosters technological advancement.

For more information and to read the full report, Intellectual property issues in artificial intelligence trained on scraped data.