AI Risk & Accountability

Exploring how to ensure AI systems are accountable to the public.

Overview

Experts

The Expert Group on AI Risk & Accountability is comprised of multidisciplinary and cross-sector AI and foresight experts from around the world.

AI Risk & Accountability Group Co-chairs

From the AI Wonk

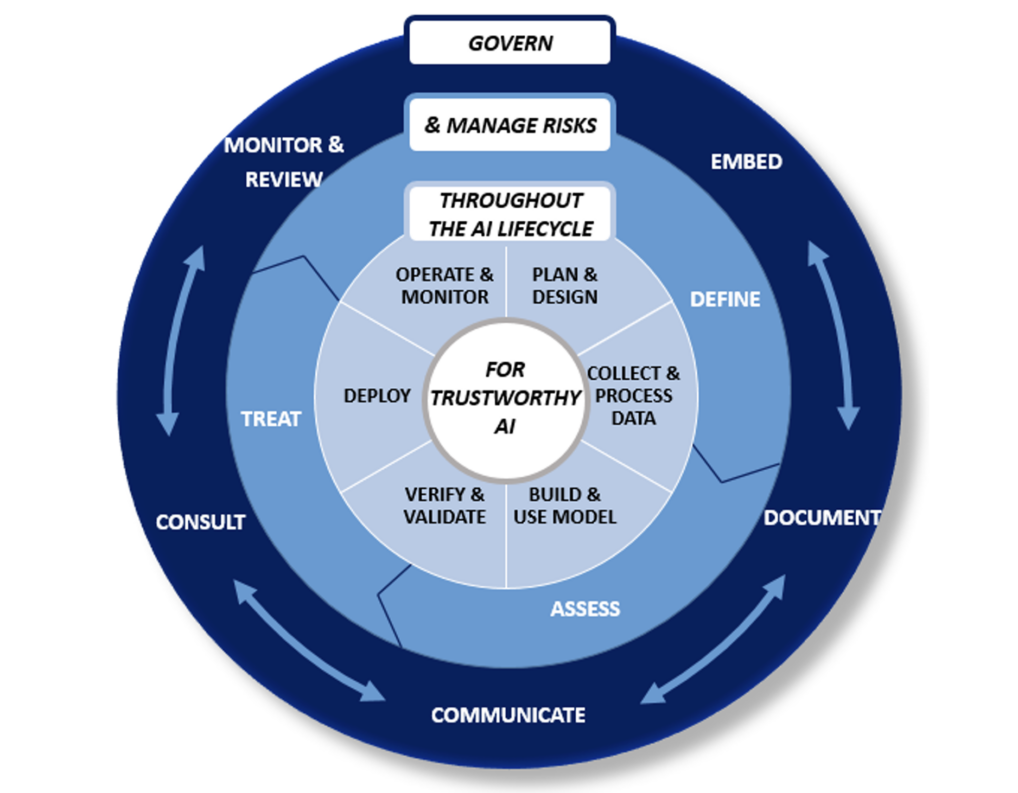

More related postsHigh-level AI risk management interoperability framework: Governing and managing risks throughout the lifecycle for trustworthy AI

From the report Common guideposts to promote interoperability in AI risk management

Artificial intelligence and responsible business conduct

As policymakers integrate AI risk management into regulations and other market access requirements across the OECD and partner countries, proactively managing risks can help support the burgeoning AI industry and facilitate investment in responsible AI systems. The Expert Group on Risk & Accountability is in the process of developing practical guidance for companies in the AI value chain on how to conduct due diligence by identifying, preventing, mitigating and remedying harmful impacts related to AI systems. This guidance is based on the OECD Due Diligence Guidance for Responsible Business Conduct and rooted in the OECD AI Principles.