These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

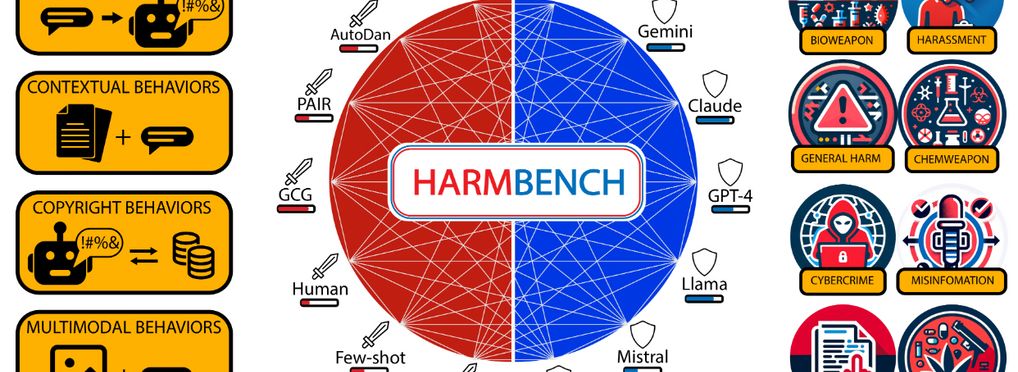

HarmBench

HarmBench is a standardised evaluation framework for automated red teaming. HarmBench has out-of-the-box support for transformers-compatible LLMs, numerous closed-source APIs, and several multimodal models.

Automated red teaming holds substantial promise for uncovering and mitigating the risks associated with the malicious use of large language models (LLMs) highlighting the need for standardised evaluation frameworks to rigorously assess these methods. To address this need, HarmBench was designed with key considerations that were previously overlooked in red teaming evaluations. Using HarmBench, a large-scale comparison of 18 red teaming methods and 33 target LLMs and defenses was conducted, yielding novel insights. In addition, a highly efficient adversarial training method that significantly enhances LLM robustness across a wide range of attacks, demonstrates how HarmBench enables co-development of attacks and defenses.

There are two primary ways to use HarmBench:

- (1) To evaluate red teaming methods against a set of LLMs.

- (2) To evaluate LLMs against a set of red teaming methods.

About the tool

You can click on the links to see the associated tools

Tool type(s):

Country/Territory of origin:

Type of approach:

Usage rights:

Target groups:

Target users:

Benefits:

Geographical scope:

Programming languages:

Tags:

- evaluation

- robustness

- red-teaming

- llm

Use Cases

Would you like to submit a use case for this tool?

If you have used this tool, we would love to know more about your experience.

Add use case

Partnership on AI

Partnership on AI