These tools and metrics are designed to help AI actors develop and use trustworthy AI systems and applications that respect human rights and are fair, transparent, explainable, robust, secure and safe.

Assessing Trustworthy AI in times of COVID-19. Deep Learning for predicting a multi-regional score conveying the degree of lung compromise in COVID-19 patients

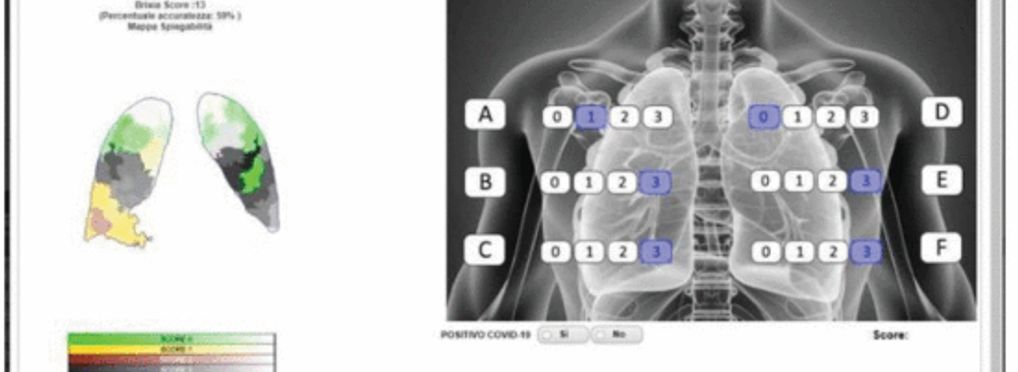

The BS-Net system is an end-to-end AI system that is able to estimate the severity of damage in a COVID-19 patient’s lung by assigning the corresponding Brixia score to a CXR image. The system is composed of multiple task-driven deep neural networks working together and was developed during the first pandemic wave. After the Institutional Board (Comitato Etico di Brescia) clearance in mid-May 2020, the system was trained and its performance was verified on a large portion of all CXRs acquired during the first pandemic peak from COVID-19 patients within ASST Spedali Civili of Brescia, Italy.

Aiming at facilitating clinical analyses and considerations, the system has been experimentally deployed in the radiology department of ASST Spedali Civili of Brescia, Italy, since December 2020. A team of radiologists working at the hospital assisted the engineers in the design of the implemented solutions

Research Questions

We conducted a post-hoc self-assessment focused on answering the following two questions.

1) What are the technical, medical, and ethical considerations determining whether or not the system in question can be considered trustworthy?

2) How may the unique context of the COVID-19 pandemic change our understanding of what trustworthy AI means in a pandemic?

Full paper: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9845195

Benefits of using the tool in this use case

The Z-Inspection is designed to allow us: 1) to assess the design process that led to the conception of the AI system itself (usually with some representatives of the organization that created the system); 2) to address issues related to the AI system’s source code as well as issues related to the training of the algorithm, especially the dataset that was used for the training; and 3) to consider the different impacts the AI system might have on users, patients, and society, in general.

The interdisciplinary approach and the specific procedure of the Z-Inspection make it possible to raise all relevant questions (related to the three components) in a coherent and unified process.

Shortcomings of using the tool in this use case

We must understand the Z-Inspection as an on-going process to highlight potential ethical issues, rather than a procedure designed to provide a final answer regarding the ethical worth of an AI system.

Learnings or advice for using the tool in a similar context

The Z-Inspection process can itself be assessed according to what has been called an ethics-based auditing (EBA), which is not “a kind of auditing conducted ethically, nor [. . . ] the ethical use of ADMS [Automated Decision-Making Systems] in auditing, but [. . . ] an auditing process that assesses ADMS based on their adherence to predefined ethics principles [. . . ]. EBA shifts the focus of the discussion from the abstract to the operational, and from guiding principles to managerial intervention throughout the product life cycle, thereby permeating the conceptualization, design, deployment and use of ADMS”

Although there are a large variety of tools that were designed in order to help the governance mechanisms of AI systems, we believe that Z-Inspection is particularly helpful since it is able to combine the three main components of auditing processes: 1) functionality auditing; 2) code auditing; and 3) impact auditing.

About the use case

You can click on the links to see the associated use cases

Objective(s):

Impacted stakeholders:

Target sector(s):

Country of origin: