AI in finance: Balancing innovation and stability

AI has already transformed financial markets. But how does it affect how financial regulators balance innovation with compliance? OECD analysis maps and examines the different approaches to regulating AI in finance across 49 jurisdictions worldwide.

AI’s growing role in finance

Artificial intelligence has emerged as a transformative force across many activities in today’s rapidly evolving digital finance world. Integrating AI into the financial system promises unprecedented efficiency gains and improved customer experience from banking and consumer finance to asset management, trading and insurance.

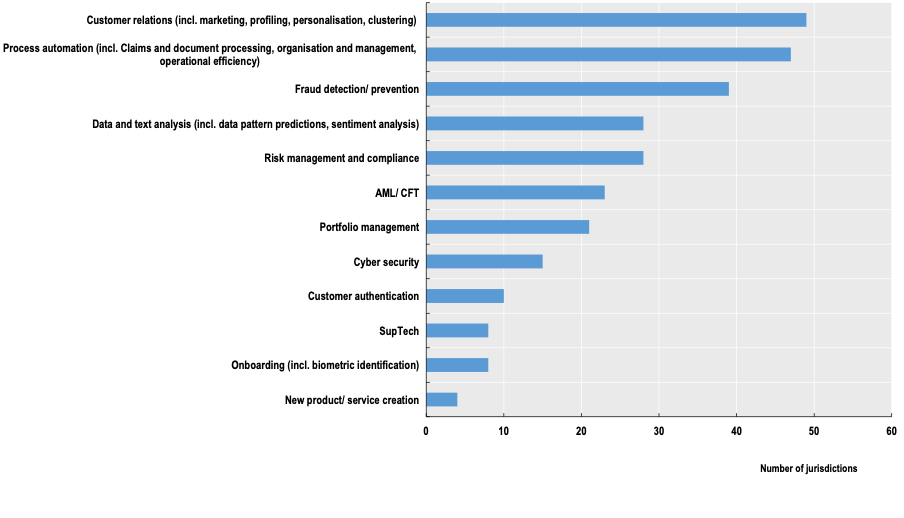

Examples of use cases and tasks involving AI in finance

Source: OECD (2024), “Regulatory approaches to Artificial Intelligence in finance”, OECD Artificial Intelligence Papers, No. 24, OECD Publishing, Paris, https://doi.org/10.1787/f1498c02-en.

The OECD-FSB Roundtable in AI in Finance, held in May 2024, provided an opportunity to hear from market participants about the extent to which they now use AI technologies and the benefits in areas such as risk modelling, trading, fraud detection, and financial crime prevention, including sanctions screening. Currently, at least by regulated financial institutions, generative AI is not widely used, and it mainly improves operational efficiency in back-office functions and experimentation.

However, like other forms of digital financial innovation, AI could create or amplify risks, which warrant close monitoring and assessment by policymakers. These risks include market integrity, consumer and investor protection, and financial stability.

Regulating AI: Is finance special?

The complexities of using AI in finance make it essential to understand how policymakers approach the issue and its implications for the future of finance. The recent OECD report Regulatory Approaches on AI in Finance provides a comprehensive overview of AI regulation in finance across 49 OECD and non-OECD jurisdictions.

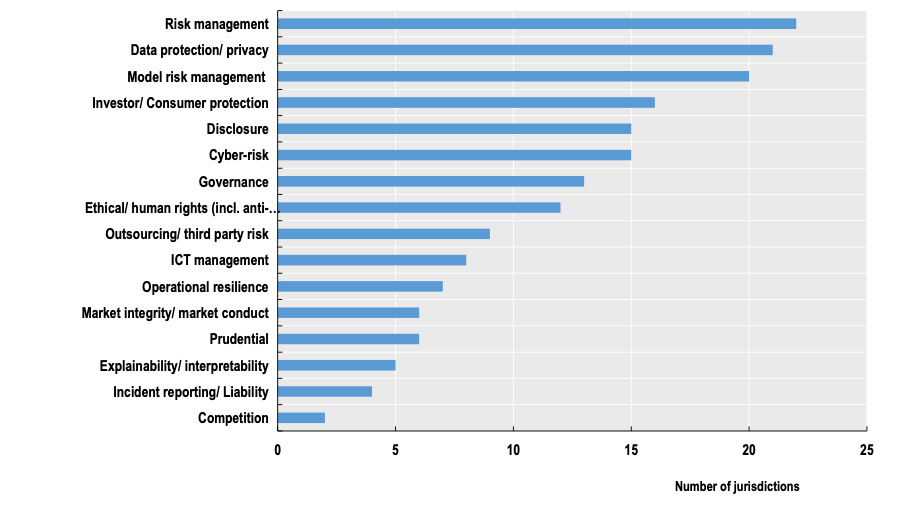

The vast majority of respondent jurisdictions have regulations that apply to the use of AI in finance. Since financial regulation follows a technology-neutral principle, existing laws, rules, and guidance remain applicable regardless of the technology. This includes laws and regulations on prudent business practices, consumer and investor protection, cybersecurity, and operational resilience, among other areas (Figure 1). Advances in technology do not render existing safety and soundness standards or compliance requirements obsolete.

General frameworks for risk management, especially model risk management, are prime examples of existing rules that may apply to AI-based models in finance. This was, in fact, the most common answer in a survey conducted for the study. This is hardly surprising, considering that Machine Learning (ML) models have been used in finance for decades.

Examples of areas covered by existing financial sector rules

Most jurisdictions surveyed have introduced some form of policy that covers AI in parts of finance, albeit in different forms. Some jurisdictions have introduced, or are in the process of introducing, AI legislation, such as the AI Act in the EU and laws in Brazil, Chile, and Colombia. These legislations tend to be cross-sectoral, and in the case of the EU AI Act, have explicit provisions covering only part of the financial sector.

More than a dozen jurisdictions have non-binding policy guidance, such as principles, national strategies and white papers, which either specifically target financial activities or apply across sectors, including finance. It is important to note that most jurisdictions do not plan to introduce new regulations for AI in finance soon.

All these different approaches are non-mutually exclusive. Introducing explicit new policies for using AI in finance does not negate the applicability of existing rules. When AI is used in areas covered by existing rules or guidance, these rules or guidance should generally apply, whether the decision is made by AI (with or without human intervention), traditional models, or humans. In jurisdictions with binding legislation that covers finance, existing sector-specific regulations continue to apply without explicitly referencing AI.

The principle of proportionality also informs countries’ approaches to AI rules in finance and is embedded in their legal and regulatory frameworks. Proportionality allows compliance assessments with rules commensurate with different financial sector participants’ risk profiles and systemic importance. It stems from the need to limit public intervention to what is necessary to achieve the desired policy objectives.

Ensuring AI rules remain fit for purpose

Most respondents to the OECD survey did not identify gaps in their current regulatory and supervisory frameworks applicable to AI in finance. That said, there is consensus that this area requires continuous monitoring and assessment to ensure these frameworks remain fit for purpose.

Most respondent jurisdictions recognise the need to better assess whether existing rules that apply to AI in finance should be expanded to make deployment safer and more responsible. Due to AI’s complexity and innovation speed, this is expected to become more urgent.

This process will benefit from international co-operation and engagement of regulators and supervisors with industry, as advocated by the OECD AI Principles for developing and implementing AI policies.

At the implementation level, several countries recognise a potential need for more help to assist entities in their compliance efforts. This is increasingly important due to the unique issues of deploying more advanced AI tools in finance. For instance, it could be particularly beneficial when identifying gaps under existing rules in mitigating risks, or if re-interpreting these rules or guidance would help achieve policy objectives. While only a few respondent jurisdictions have issued clarifications, they all acknowledge the benefits of providing such guidance.

Harnessing AI’s full potential in finance

Most respondent jurisdictions routinely review existing policy frameworks to ensure their effectiveness. This process can help them identify potential incompatibilities between various policies regarding the use of AI in finance (e.g., data regulation) and ensure regulatory alignment across relevant policy areas.

Policymakers in financial markets have extensive experience balancing productive innovation with a secure and vibrant financial system. AI innovation is no different. It is vital that they continue to closely monitor AI use in finance, including by evaluating current policy frameworks, to ensure that regulation is not a hurdle to responsible and productive AI innovation in finance while ensuring effective oversight and risk mitigation.

As we progress and encounter ongoing and rapid advancements in AI innovation, policymakers, financial institutions, and technology providers must collaborate to establish a robust and resilient policy framework to harness AI’s full potential, promote innovation, build trust, and enable the full realisation of productivity gains.

Read more at “Regulatory approaches to Artificial Intelligence in Finance,” OECD, 2024. Available at: https://www.oecd.org/en/publications/regulatory-approaches-to-artificial-intelligence-in-finance_f1498c02-en.html