How anticipatory governance can lead to AI policies that stand the test of time

Artificial intelligence is a transformative force, reshaping economies, societies, and geopolitics in real-time. Governments and industries are pouring resources into advancing AI, seeking economic rewards and strategic advantages. However, this surge of innovation raises a pressing question: How can AI governance policies possibly keep pace, pave the way for its benefits, and mitigate the risks?

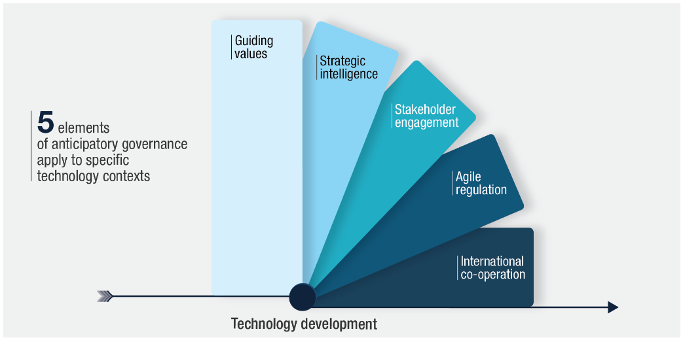

The recent Steering AI’s Future report offers valuable lessons for effective AI governance, focusing on five key elements from the Framework for Anticipatory Governance of Emerging Technologies: guiding values, strategic intelligence, stakeholder engagement, agile regulation, and international co-operation.

Figure 1. Five elements of emerging technology governance

First, identify and focus on shared values for AI governance – for instance, prioritising fairness, transparency, and responsible use while rejecting practices that infringe on privacy, promote bias, or violate human rights – as you develop both technology and policy. The OECD AI Principles, the first intergovernmental standard on AI, provide a flexible framework for policy development. Initially agreed upon in 2019 and updated in 2024, these Principles serve as a global reference point for trustworthy AI.

Second: To better envision AI’s future, gather insights from what we can observe now. Monitoring exercises can identify weak signals for the future, whether it’s opportunities to seize or negative effects to mitigate. Tracking AI incidents and hazards, as done by the OECD AI Incidents and Hazards Monitor, can help to establish a collective understanding of risk patterns and their complexities, enabling us to anticipate and prevent future issues more effectively.

Exploring the medium- and long-term implications of AI is also valuable for shaping its governance. OECD evidence reveals a range of potential benefits and risks to consider. Early analysis indicates that AI is streamlining processes and enhancing productivity, particularly in data-intensive industries like finance and logistics. We already see AI accelerating scientific progress, such as advances in areas including drug discovery, climate modelling and materials science research. Additionally, AI can empower citizens and civil society, contributing substantially to poverty alleviation.

Third, create space for meaningful dialogue. Involve stakeholders when they can still influence what happens next, whether during the innovation design stage or when an AI system is in use. Whether it’s educating the public through blog posts like this one, gathering diverse perspectives from tech experts to school teachers, or collaborating with governments and specialists to co-create solutions, varied informed engagement fosters better policy.

Fourth, then, is agile regulation: approaches that enable innovation while providing appropriate safeguards. So-called “sandboxes” and other controlled regulatory environments, when carefully designed, can offer AI firms a temporary legal opportunity to test cutting-edge ideas, allowing regulators to see where they need to adapt next. Tailoring established tools, such as the OECD Responsible Business Conduct Guidelines for Multinational Enterprises, or the new reporting framework under the Hiroshima AI Process Code of Conduct, can help simplify compliance across borders and integrate insights from firms’ practical experiences into regulation.

Finally, it’s essential to acknowledge that the transnational and cross-sectoral development, use, and impacts of AI necessitate collaboration among policymakers and other stakeholders. A one-size-fits-all approach will never suffice for AI governance. However, efforts to harness the benefits of AI and mitigate its risks will only succeed if diverse initiatives can work in harmony, rather than competing or contradicting each other. Global co-operation, as seen in the partnership between the OECD and the Global Partnership on AI (GPAI), is essential for effective governance.

Steering artificial intelligence policy toward a trustworthy future is a worthwhile goal in its own right. Getting it right is essential for those who will use and benefit from or be affected by AI. Leveraging guiding values, strategic intelligence, stakeholder engagement, agile regulation, and international co-operation is key to navigating this evolving landscape.