Responsible AI licenses: a practical tool for implementing the OECD Principles for Trustworthy AI

Recent socio-ethical concerns on the development, use, and commercialization of AI-related products and services have led to the emergence of new types of licenses devoted to promoting the responsible use of AI systems: Responsible AI Licenses, or RAILs.

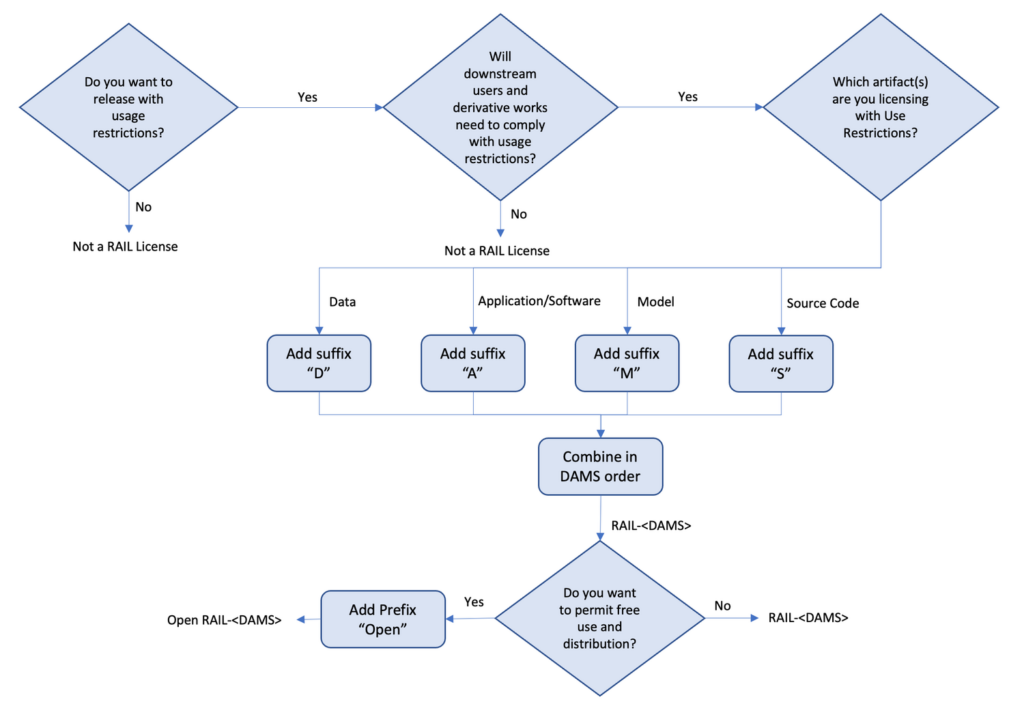

RAILs are AI-specific licenses that include restrictions on how the licensee can use the AI feature due to the licensor’s concerns about the technical capabilities and limitations of the AI feature. This approach concerns the two existing types of these licenses. The RAIL license can be used for ML models, source code, applications and services, and data. When these licenses allow free access and flexible downstream distribution of the licensed AI feature, they are OpenRAIL

The RAIL Initiative was created in 2019 to encourage the industry to adopt use restrictions in licenses as a way to mitigate the risks of misuse and potential harm caused by AI systems.

The case of BLOOM

One example of this is BigScience. In 2021, Hugging Face led a one-year research initiative with the support of many actors including the French research institutions GENCI and CNRS. The initiative aimed to create the first open and collaboration-based multilingual (46 languages) Large Language Model (LLM) developed on a transparent basis. The fruit of the research, BLOOM, was released on 12 July 2022.

BLOOM does not use an open source license, but rather a specific license that promotes both open access and responsible use of the ML model, an OpenRAIL license. Later, the BLOOM license was modified to become a general license for use with any ML model. As such the AI community can freely use BigScience OpenRAIL-M as a far-reaching licensing tool.

Actors at different levels are using Responsible AI Liscenses, RAILs

All of this took place while major players in the AI space released their other major models. Meta released its LLM OPT-175, chatbot SEER, and vision BB3 models, all under a responsible AI license for research purposes. Stable Diffusion by Stability.ai is the latest major ML release that uses an OpenRAIL license. It is a multimodal generative model and became viral in a matter of weeks. BigCode, an open collaborative project stemming from BigScience and led by Hugging Face and ServiceNow is currently working on a new OpenRAIL for an LLM trained for code generation.

With all of these actors working in the RAILs space, it leads to believe that open and responsible innovation in the AI industry are not mutually exclusive.

RAILs respond to the OECD AI Principles and ongoing regulatory proposals

These licenses are in line with ongoing AI regulatory proposals, namely the EU AI Act. In the long run having responsibility engrained in the development, release, and use of AI could reduce many inequalities, of which socio-economic and gender are just a couple of examples. To this end, RAILs and OpenRAILs add value by deterring the usage of AI in critical and harmful areas, informed by the technical capabilities and limitations of the AI system.

Responsible restrictions move down the value chain

RAILs have been designed with a “value chain” approach because they require use restrictions to be included in the downstream uses of licensed AI features and their derivatives. Looking at the BLOOM LLM as an example, a company may want to use the BigScience BLOOM model to develop a commercial chatbot. To do so, the company accesses the model, modifies it, and finetunes it to be the chatbot app’s technical backbone. Each of these actions will be governed by the OpenRAIL license. According to the terms defined in the OpenRAIL, the chatbot is then considered a derivative of the original model, and the use of the chatbot will be governed by the use-based restrictions defined in the OpenRAIL license. This means that when commercializing the chatbot with a commercial license or any other legal agreement, these use restrictions will have to be part of the new license, effectively sending the use restrictions down the value chain and spreading the responsible AI culture.

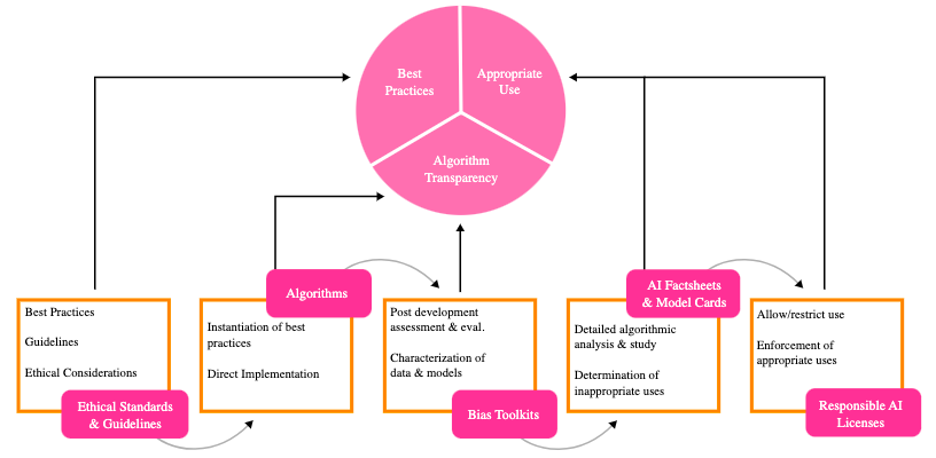

RAILs would have even greater value if articulated with more complementary tools for responsible AI available in the AI governance ecosystem such as model cards. Model cards may inform the draft and choice of a RAIL. Depending on the capabilities and limitations of the model extracted from the model card, the license will include specific use restrictions. The restrictions are designed to avoid using the model for certain tasks and scenarios identified by the model card.

How BigScience OpenRAIL-M stands up to the OECD AI Principles

To give a more practical approach to showing the articulation between the BigScience OpenRAIL-M license and the OECD AI Principles, the table below shows exactly how the articulation between existing and already implemented AI governance tools, such as OpenRAILs, and widely accepted international AI principles is happening.

| OECD AI Principles | BigScience OpenRAIL-M |

| Principle 1.1: Inclusive growth, sustainable development and well-being Stakeholders should proactively engage in responsible stewardship of trustworthy AI in pursuit of beneficial outcomes for people and the planet, such as (…) reducing economic, social, gender and other inequalities”. | The BigScience license is the result of the community’s concerns about openly releasing AI features through existing open licenses. The license was designed to reduce potential harmful uses of AI features which could lead to undesired outcomes. OpenRAILs help to reduce the misuse of AI applications. |

| Principle 1.2: Human-centred values and fairness “AI actors should respect the rule of law, human rights and democratic values, throughout the AI system lifecycle. (…) To this end, AI actors should implement mechanisms and safeguards, such as the capacity for human determination, that are appropriate to the context and consistent with the state of art.” | The license can be conceived as a mechanism that can be implemented by the AI community and is appropriate for the context of open sharing, consistent with the capabilities and limitations of AI. Use restrictions on the use of the licensed AI feature are conducive to respect for human rights throughout an AI system’s lifecycle. |

| Principle 1.3: Transparency and explainability Transparency and explainability: “to foster a general understanding of AI systems; to make stakeholders aware of their interactions with AI systems (…)”. | The license promotes transparency by prohibiting the automated generation of content without explicit disclaimers to that effect (aligned with the AI Act transparency provision). The license is meant to complement the model card by informing users of the ML model’s capabilities and limitations. |

| Principle 1.4: Robustness, security and safety “AI actors should ensure traceability, including in relation to (…) decisions made during the AI system lifecycle”. | The license ensures that any redistribution or derivatives will include -at a minimum- the same behavioural-use restrictions as the original license. In this way, the licensor ensures a default decentralized control via copyleft-style downstream restriction clauses. |

| Principle 1.5: Accountability “AI actors should be accountable for the proper functioning of AI systems and for the respect of the above principles, based on their roles, the context, and consistent with the state of art.” | The licensor must stand for respectful and responsible use of the licensed AI feature. The license states that the output generated belongs to the user who is accountable for its use. The licensor has the right to restrict the model’s use in case of violation of the license. |

RAILs as effective AI policy tools

This assessment of the OECD AI Principles implies that policy makers could do more to integrate RAILs and OpenRAILs into their AI policy strategies and public procurement initiatives.

| OECD.AI Policy Recommendations | Responsible AI Licenses |

| Principle 2.1: Investing in AI research and development “Governments should consider long-term public investment, and encourage private investment, in research and development, including inter-disciplinary efforts, to spur innovation in trustworthy AI that focuses on challenging technical issues and on AI-related social, legal and ethical implications and policy issues.” | The presence of RAILs in public research frameworks raises government awareness about their potential. BigScience could be used as a proxy. International cooperation aimed at proposing solutions for the open sharing and responsible use of AI has the potential to be used in the near term for things like public procurement. |

| Principle 2.2: Fostering a digital ecosystem for AI “(…) “governments should consider promoting mechanisms (…) to support the safe, fair, legal and ethical sharing of data.” | RAILs have the potential to promote ethical data sharing by specifying permitted uses aligned with the data steward’s values. |

| Principle 2.3: Shaping an enabling policy environment for AI “Governments should promote a policy environment that supports an agile transition from the research and development stage to the deployment and operation stage for trustworthy AI systems. (…) Governments should review and adapt, as appropriate, their policy and regulatory frameworks and assessment mechanisms as they apply to AI systems to encourage innovation and competition for trustworthy AI.” | OpenRAILs promote open access and responsible innovation while fostering competition via quality, innovation, and product differentiation. |

| Principle 2.4: Building human capacity and preparing for labour market transformation “Governments should also work closely with stakeholders to promote the responsible use of AI (…) and aim to ensure that the benefits from AI are broadly and fairly shared.” | Governments could explore the use of new licensing frameworks via policy reports and guidelines to protect the responsible use of specific AI systems, especially in light of upcoming AI regulations (EU AI Act). |

| Principle 2.5: International co-operation for trustworthy AI “Governments (…) should actively cooperate to advance these principles and to progress on responsible stewardship of trustworthy AI; Governments should work together in the OECD and other global and regional fora to foster the sharing of AI knowledge, as appropriate. They should encourage international, cross-sectoral and open multi-stakeholder initiatives to garner long-term expertise on AI.” | Both BigScience and the RAIL Initiative are international, cross-sectoral, and open multi-stakeholder initiatives focused on garnering long-term expertise in AI. Governments are welcome to engage with them, but also to promote them. |

RAIL licenses are designed as a complementary tool for the ongoing challenges that AI policy and regulatory efforts tackle on the international stage. They can be a practical tool to put OECD AI Principles and policy recommendations into practice. The OECD report which documents ongoing national AI policy strategies also leads to believe that Responsible AI licenses are a pertinent and efficient governance instrument for any national AI policy strategy. RAILs have the potential to become a basic instrument for governing economic interactions in the AI value chain. They could also promote AI innovation, potentially help to establish a responsible sharing culture in the near term, and benefit society at large.