Six crucial policy considerations for AI, data governance and privacy: Insights from the OECD

The rapid evolution of artificial intelligence (AI) presents unprecedented opportunities and significant challenges, particularly in data governance and privacy.

A new report by the OECD delves into these complexities, including the implications of generative AI driven by vast amounts of training data that often includes personal data. Written with the contributions of two working groups with representatives from OECD and partner economies, and an expert group of representatives from industry, regulators, civil society, and academia, the report highlights the need for global coordination to address these challenges.

Here are six crucial policy considerations to harmonise AI’s advancements with privacy principles and how they relate to the report’s main takeaways.

1. Privacy and AI

While privacy frameworks have always applied to AI, they play an increasingly important role in the age of Generative AI. A growing number of actions from regulators and the courts remind us that privacy must be considered at conception and during AI’s development and deployment stages.

Challenges abound. For instance, informing people in advance about how their data might be used can be impossible. If trained on unbalanced datasets, AI-generated data may also lead to inaccurate outcomes or bias. Access to modify or delete personal data can also be challenging for both individuals to obtain and organisations to provide, given the nature of AI systems. This can lead people to face privacy harms and AI developers or users to violate privacy laws.

Given these challenges, ensuring AI developments align with privacy rules from the outset and by design requires proactive efforts to identify common ground and bridge gaps. The report therefore emphasises the crucial need to build bridges between AI and privacy policy communities. This integration should ensure that privacy safeguards get embedded into AI systems throughout their lifecycle, promoting innovation based on privacy.

2. Mutual understanding and collaboration between policy communities

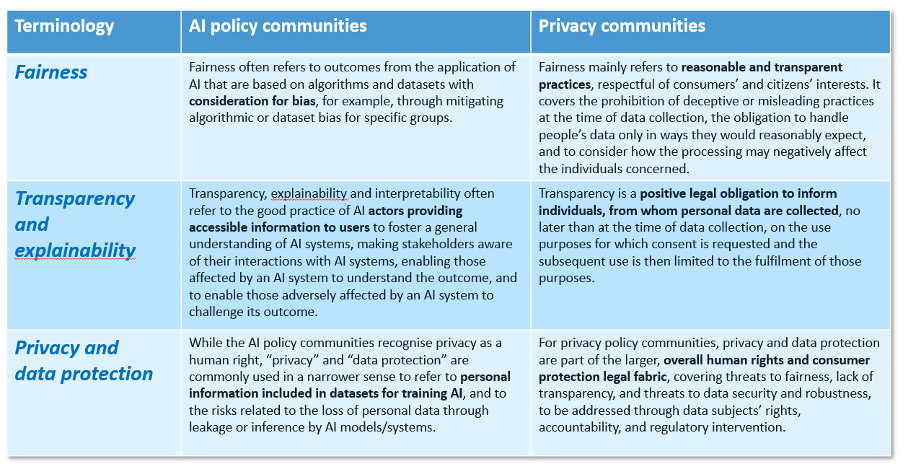

Terminology and conceptual differences between privacy and AI policy communities can lead to misunderstandings and create uncertainties regarding compliance with existing privacy and AI regulations for AI developers and deployers.

Key concepts such as fairness, transparency, and explainability may have different meanings in the AI and privacy policy communities depending on the context, for instance. A key value-add of the report is that it not only acknowledges the different interpretations of essential concepts between the AI and privacy communities but also analyses these differences and discusses how these concepts are interrelated.

The report highlights the importance of sustained interactions between these communities to foster awareness and improve mutual understanding. Doing so can effectively align their approaches and help to develop AI that embeds privacy considerations from the outset.

In practice, however, AI and privacy policy communities still often operate in silos, which can lead to varied approaches across policy streams, jurisdictions, and legal systems. The report thus calls for collaboration to align policy responses, leveraging each community’s knowledge and experience to effectively align approaches.

3. Fairness

Ensuring that AI systems process personal data fairly and lead to fair outcomes is paramount in both AI and privacy principles. The report discusses principles such as collection limitation, purpose specification, use limitation, openness, and data quality as fundamental to achieving fairness.

4. Transparency and explainability

Transparency is essential to inform individuals about how their personal data is used in AI systems and is also a prerequisite condition for processing personal data based on informed consent. Transparency builds trust and should enable users to make informed decisions regarding their data. The report mentions practical initiatives like model cards to enhance AI interpretability, ensuring that the information provided is meaningful and that AI outcomes are understandable.

5. Accountability

Integrating AI and privacy risk management frameworks is crucial for addressing attendant risks in designing or adopting AI systems, and accountability mechanisms as they have been developed in the two communities can ensure that AI systems comply with data protection laws across jurisdictions and ethical standards. As well, well-established privacy management programmes can help identify, prevent and mitigate potential discrimination by AI systems.

Note: These tables represent preliminary analysis and are not exhaustive.

6. Global synergies and cooperation

AI’s global reach necessitates international regulatory cooperation to address privacy challenges effectively. The report underscores the need for global synchronisation, clear guidance, and cooperative efforts to tackle AI’s impact on privacy. Indeed, cooperation can help prevent regulatory fragmentation and ensure consistent application of privacy principles across jurisdictions.

To help, the report provides a snapshot of national and regional developments in AI and privacy, indicating that while significant steps have been taken, more coordination is needed. Among other domains, the report identifies Privacy Enhancing Technologies (PETs) as a promising area of cooperation for addressing data governance and privacy concerns in AI. While not foolproof, PETs can help bridge the gap between developing safe AI models and protecting individuals’ privacy rights.

The OECD can play a pivotal role in fostering coordination, drawing on its extensive substantive expertise and established policy work in AI, data protection, and privacy.

Integrating AI and privacy

The challenges at the intersection of AI, data governance and privacy are significant and the sheer interest in the new Expert Group on AI, Data and Privacy shows that the concern of striking a balance between innovative AI and privacy is global.

With its extensive experience in the areas of privacy and AI, the OECD is ready to help stakeholders develop AI systems that respect and protect privacy, thus ensuring that technological advancements benefit society while protecting individual rights.

The authors would like to acknowledge the contribution of Sergi Gálvez Duran, Celine Caira, Sarah Berube, Andras Molnar, Limor Shmerling Magazanik and other members of the OECD Secretariat, in the writing of this blog post.