What are the tools for implementing trustworthy AI? A comparative framework and database

The OECD Network of Experts Working Group on Implementing Trustworthy AI is helping move “from principle to practice” through the development of a framework to evaluate different approaches and a database of use cases. The framework helps AI practitioners determine which tool fits their use case and how well it supports the OECD AI Principles for trustworthy AI. As users apply the framework, their submissions will form a live database with interactive features and information on the latest tools.

>> READ THE REPORT: Tools for trustworthy AI: A framework to compare implementation tools for trustworthy AI systems

The OECD’s work to establish sound principles for trustworthy AI

With the proliferation of AI systems in so many aspects of our lives, bringing both great promise and many valid concerns, it is easy to understand why trust in AI is such a topical issue. It is widely accepted that, in fact, trust in AI depends on AI systems being trustworthy. This begs the following question: what characteristics should an AI system have in order to be trustworthy? That, in effect, is what the OECD AI Principles provide.

At the OECD, policy makers and other stakeholders have been working hard on defining trustworthy AI since 2017. The resulting OECD AI Principles, adopted in 2019, consider an AI system “trustworthy” if it promotes inclusive growth, human-centred values, transparency, safety and security, and accountability.

The next challenge appears in putting these principles into practice—that is, in determining if an AI system promotes inclusive growth and the like. The OECD Network of Experts on AI (ONE AI) Working Group on Implementing Trustworthy AI has been developing a framework of tools for trustworthy AI to serve as guidance for AI developers and other stakeholders. What follows is a look at our process and where things currently stand.

From principles to practice: Tools to achieve trustworthy AI

There are tools out there to help AI actors rise to the challenge of building and deploying trustworthy AI. These tools vary significantly, from software programmes that detect bias to procedural guidelines for ensuring an AI system is used properly to educational initiatives for AI implementers.

However, these tools can be hard to find and absent from the ongoing discussions about international policy. Establishing a space for AI actors to share information about their experiences would surely be helpful. It would be a place to share approaches, mechanisms and practices to implement trustworthy AI. All of this should be brought together as a common framework to ensure that the collected data are comparable and easily accessible. The Working Group set out to create such a space.

First, we asked questions

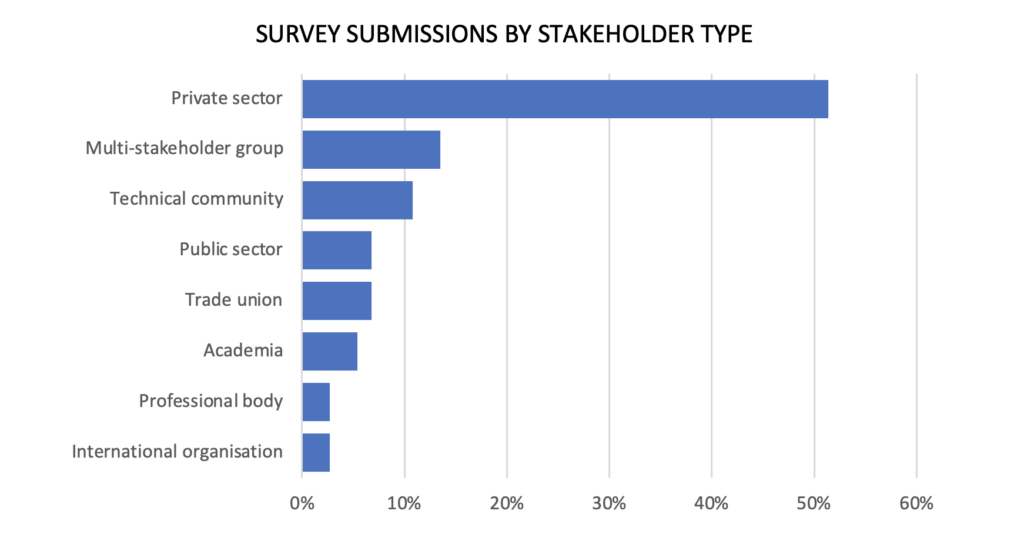

We started by doing research. The first step was to conduct a survey of relevant AI actors to see what they are doing to design, build and operate trustworthy AI systems, as embodied by the OECD AI Principles. In conducting this survey, we hoped to create a snapshot of who is doing what, what’s working, and perhaps more importantly, where the gaps lie.

Zooming in on technical, procedural and educational tools

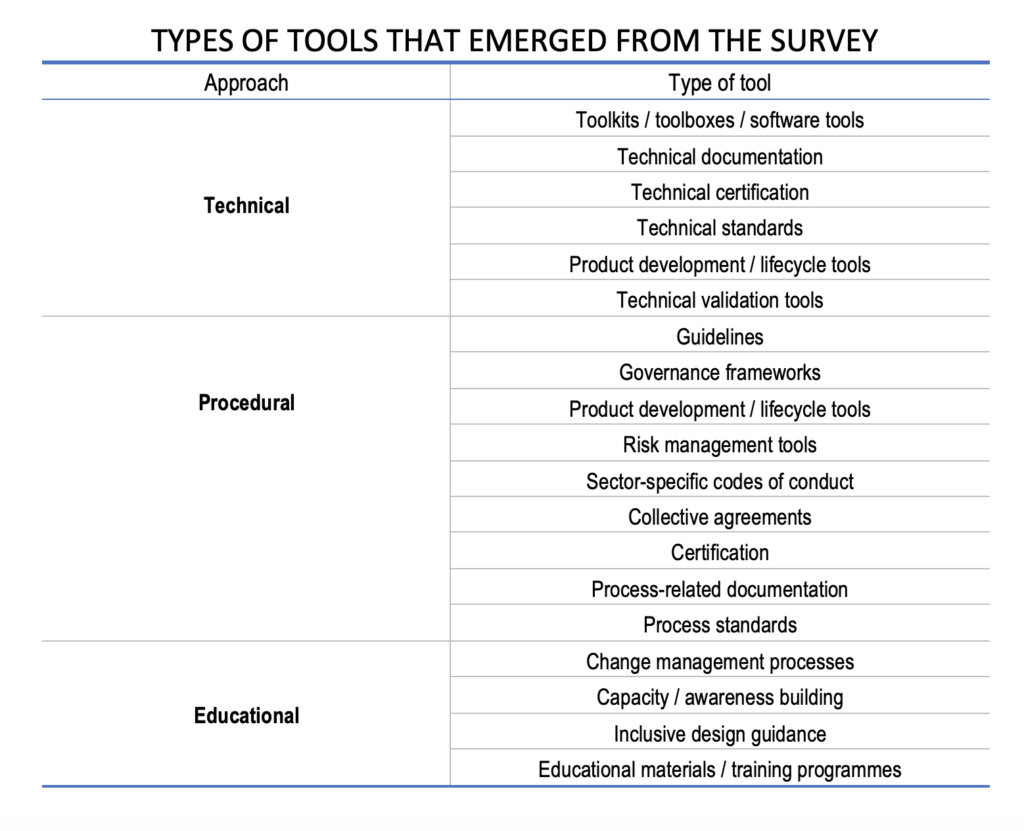

The experts in our working group analysed over 75 survey responses. Rather quickly we noticed that the variety of AI applications among respondents made it difficult to establish valid comparisons. Some initiatives were made up of tools or frameworks that we could share with others to help them implement the AI Principles, such as toolkits to check for biases or robustness in an AI system, risk management guidelines or educational material. Others focused on use case scenarios for achieving specific goals like fraud prevention or disease detection. Still other submissions focused on raising awareness about documents or reports on AI-related issues, with little information pertaining to practical solutions.

Equipped with the results, we decided to focus our efforts on analysing the tools that we could share with other actors to help them make their own AI systems trustworthy. First, we organized these tools into three categories:

- Technical: These aim to address the technical aspects of issues such as bias detection, transparency, explainability, performance, robustness, safety and cybersecurity.

- Procedural: These provide operational or implementational guidance for AI actors, in areas such as governance of AI systems, risk management frameworks, product development and lifecycle management.

- Educational: These encompass mechanisms to build awareness, inform, prepare or upskill stakeholders involved in or affected by the implementation of an AI system.

A framework for comparing trustworthy AI tools

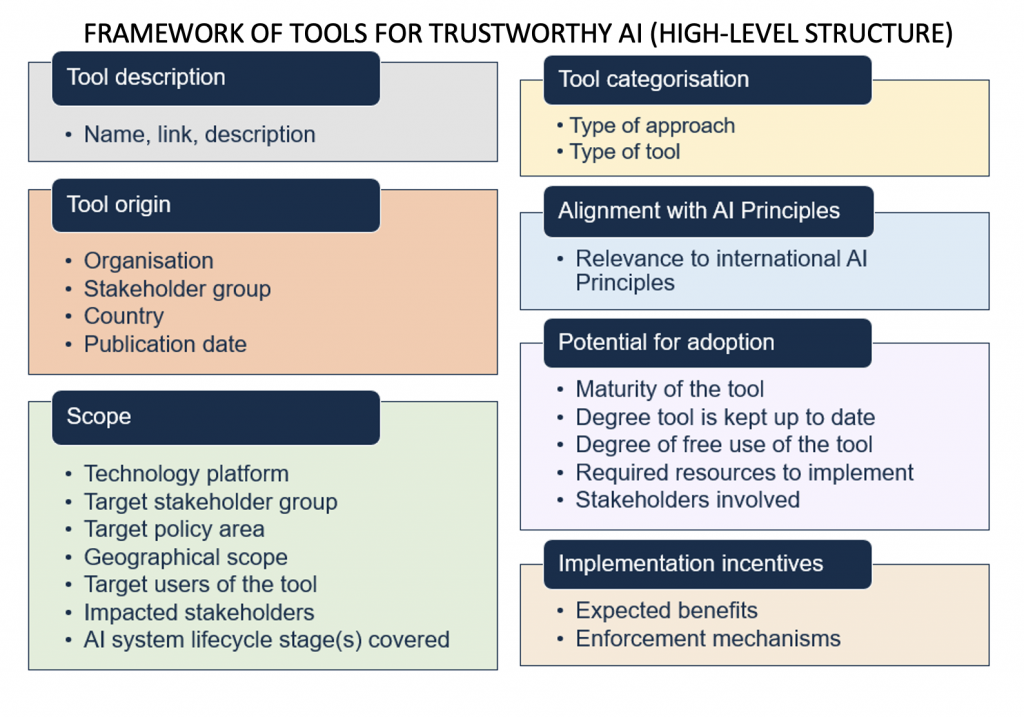

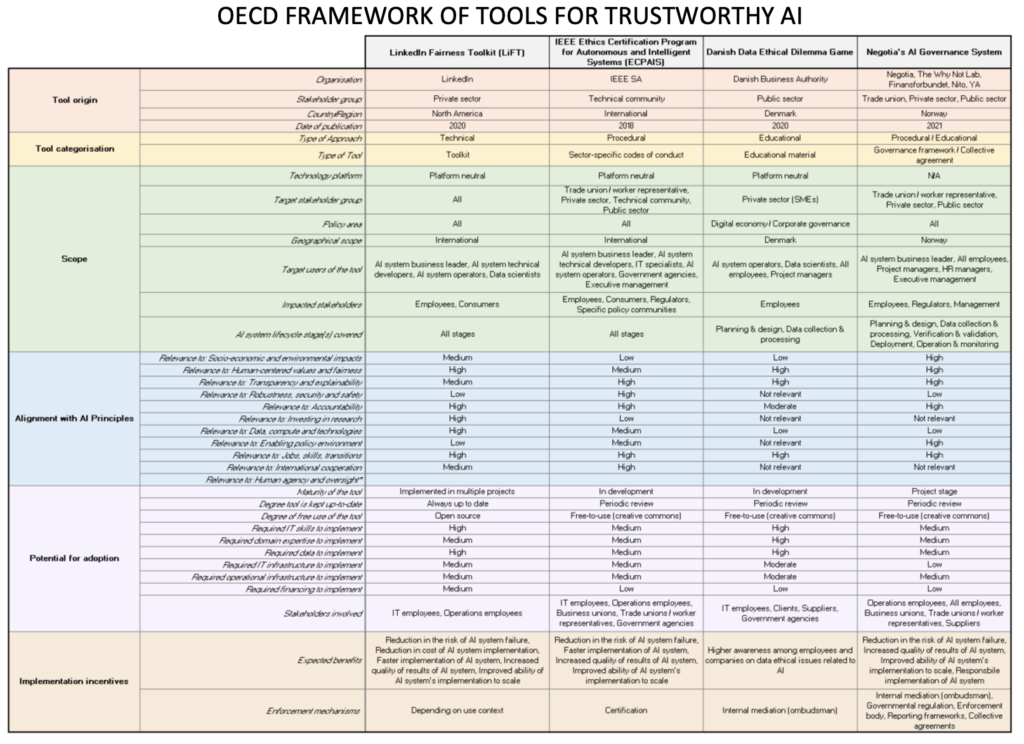

To facilitate comparison, we put together a framework for structuring information about the tools, which lists basic details and underlying characteristics structured around seven key dimensions:

- Description: This includes the name, background information and hyperlinks to additional information.

- Origin: This covers the organisation, stakeholder group and geographical region from which the tool originates, as well as the first date of publication..

- Categorisation: This information covers the type of approach and the type of tool.

- Scope: This covers details about platform specificity; target users, policy areas, stakeholder groups; geographical scope; impacted stakeholders; and AI system lifecycle stage(s) covered.

- Alignment with international AI Principles: Including the tool’s relevance to the OECD AI Principles and the European Commission’s key requirements for trustworthy AI.

- Adoption potential: Here we list the maturity of the tool/approach and the degree to which it is kept up to date. It lists the required resources and legal conditions for using it, and the stakeholders who need to be involved in using it.

- Implementation incentives: Here, we enumerate the benefits users can expect to achieve from using the tool/approach and possible enforcement mechanisms that may facilitate the implementation.

At this stage, the framework has undergone several iterations of expert testing and validation. It has been complemented with relevant research from OECD partner organisations like the Global Partnership on AI (GPAI), the Open Community for Ethics in Autonomous and Intelligent Systems (OCEANIS) and Arizona State University (ASU).

The framework should be especially useful for identifying tools according to AI system characteristics and contexts. While it is not designed to assess the level of quality or how complete an individual tool is, the framework does provide means for identifying the types of tools that are best suited for specific contexts.

Applying the framework to real-life tools

To test the framework, we applied it to four tools. The test has helped to illustrate its effectiveness and comparative value. The tools were chosen to represent a variety of characteristics, objectives, stakeholder groups, and contexts. The first tool – the LinkedIn Fairness toolkit – is a technical tool from the private sector. It aims to minimise biases in AI systems, it is open source and has been implemented in several projects.

The second tool is the Ethics Certification Programme for Autonomous and Intelligent Systems. It is procedural and comes from the Institute of Electrical and Electronics Engineers (IEEE), an actor in the technical community. The tool provides sector-specific codes of conduct to help mitigate the ethical risks of AI systems.

The third example is an educational tool that comes from the public sector via the Danish government. With a national focus, it uses gamification to create awareness amongst businesses of the challenges involved with developing responsible and ethical AI solutions.

The fourth and final example is a governance system with both procedural and educational elements, led by trade unions and backed by a multi-stakeholder coalition in Norway. Still in the project stage, this tool will address issues related to implementing AI systems in the workplace.

The examples illustrate how the framework can help gather and share tools, practices and approaches to implement trustworthy AI in a comparable and accessible manner.

Access the Excel file with a sample of the OECD Framework of tools for Trustworthy AI

An online database

Applying the framework will lead to building a live database, freely available on OECD.AI. It will include interactive features and provide information on the latest tools to help ensure that AI systems in different contexts abide by the OECD AI Principles.

We sincerely hope and believe that this database will help operationalize the principles and practices of trustworthy AI across the globe. We look forward to your feedback to make this database a practical and useful tool in your implementation of trustworthy AI. Please email us at ai@oecd.org.