Dewey Murdick's publications

Dewey Murdick's videos

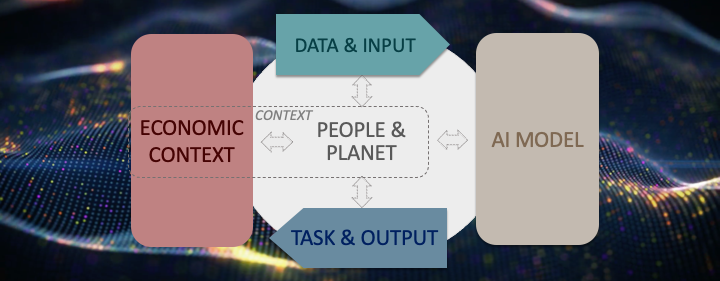

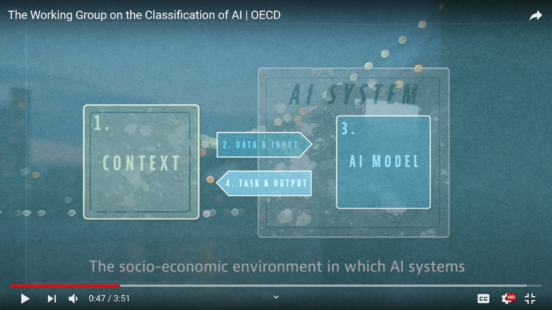

The OECD Framework for the Classification of AI Systems

–Different types of AI systems raise very different policy opportunities and challenges. As part of the AI-WIPS project, the OECD has developed a user-friendly framework to classify AI systems. The framework provides a structure for assessing and classifying AI systems according to their impact on public policy in areas covered by the OECD AI Principles.

The OECD Al Systems Classification Framework

–The OECD’s Network of Experts on AI developed a user-friendly framework to classify AI systems. It provides a structure for assessing and classifying AI systems according to their impact on public policy following the OECD AI Principles. This session discusses the four dimensions of the draft OECD AI Systems Classification Framework, illustrates the usefulness of the framework using concrete AI systems as examples, and seeks feedback and comments to support finalisation of the framework. Aclassification framework to understand the labour market impact will also be introduced.