Artificial intelligence in health: big opportunities, big risks

“How would you feel about your health provider relying on artificial intelligence for your medical care?”

This question was put to Americans in December 2022, just before the explosion of ChatGPT; three in five said they would be uncomfortable. In the same study, around one-third of people believed that artificial intelligence would provide better outcomes, slightly more than those who said it would create a worse outcome, with the remaining saying that it would not make much difference.

The American public is right to be cautiously optimistic about using artificial intelligence in healthcare. Looking to the future, artificial intelligence holds significant opportunities and worrying risks. AI can potentially improve health outcomes for individuals and allow health professionals to focus on care and prevention, accelerate research and innovation, and provide better protection against future pandemics. However, AI may expand inequities, create harms, and jeopardise individual privacy.

To understand where we are and where we are going, it is often best to first look at the past.

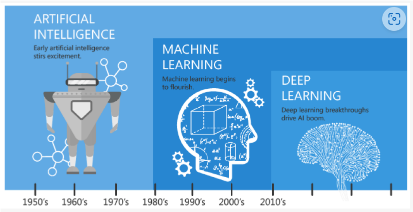

A brief history of artificial intelligence

The world is waking up to the opportunities and risks that come from using artificial intelligence. But artificial intelligence has been incubating for 80 years, starting with McCulloch and Pitts’s computer model for a neuron in 1943.

Over the next 40 years, progress was slow until microprocessors led to the commodification of computing power and developed ‘machine learning’. This gave way to the first broadly public display of artificial intelligence in 1997, with Deep Blue defeating the world chess champion Garry Kasparov. The next big shifts were with Watson defeating Ken Jennings and Brad Rutter at Jeopardy! in 2011 and AlphaGo defeating the world’s best – Lee Sedol – in 2016. These advances proved the ability of machines to best humans at well-defined tasks.

The last 10 years have seen advancements in which artificial intelligence increased its own knowledge through the practice of deep learning. In 2017, AlphaGoZero was able to defeat AlphaGo – and in doing so showcased new successful strategies for Go never devised by humans in the thousands of years they’ve been playing the game. In 2021, Boston Dynamics was able to teach a robot how to do a backflip, demonstrating how to use deep learning to teach a task without well-defined rules.

Source: Lee D, Yoon SN. Application of Artificial Intelligence-Based Technologies in the Healthcare Industry: Opportunities and Challenges. International Journal of Environmental Research and Public Health. 2021; 18(1):271. https://doi.org/10.3390/ijerph18010271

While each of these advances was impressive and well-covered in the media, it is through the release of generative artificial intelligence technologies, specifically large language models like OpenAI’s ChatGPT, Google’s PaLM, and Meta’s LLaMa, that AI has caught the public’s attention. Like smartphones in the late 2000s, these tools make the promise and opportunity of AI tangible for the public by putting them into everyone’s hands.

The public now realises that the promise of artificial intelligence is not science fiction. It is a modern reality. As reality comes into focus, the public wants to ensure that this technology behaves like a benevolent companion, like Jane from Orson Scott Card’s Ender’s Game series, rather than a malevolent villain, such as A Space Odyssey’s HAL 9000. Health is one of the areas where the public has expressed concern for risks while having optimism about the benefits of artificial intelligence.

Artificial intelligence opportunities in health

Anecdotally, the number of news stories and publications about using artificial intelligence in health is growing exponentially or even faster. In academia, this signals the many opportunities to use artificial intelligence and the capabilities to design, develop, deploy, and measure its impact in health.

There are many examples that can be placed into three groups:

- Health services management;

- Clinical decision-making; and

- Predictive medicine.

Health service management captures ideas where artificial intelligence helps health workers to perform regular duties. AI may help to capture and code clinical information for health records, scan literature for articles of greatest importance, or help to translate medical terminology for patients.

For example, two reports released this year – More Time to Care and Patients before Paperwork –demonstrate significant potential for improving healthcare efficiency. More Time to Care found that approximately 10% of health providers’ time is spent on administrative tasks that could be automated, in part by artificial intelligence. Patients before Paperwork found that rationalising business processes and automating data entry tasks could bring benefits of about 10%. In Canada – the country of origin for the report – a 10% improvement would translate to 5.5 million patient visits per year in its population of 40 million people.

Clinical decision-making is where artificial intelligence helps health providers by examining images, health records, or medical history to supply information to the health provider about patient diagnosis. This allows providers to access more information and accelerate their ability to provide care, which improves the delivery of health care and ultimately improves health outcomes. In addition, artificial intelligence can access academic literature across languages, expanding the breadth of science that is available to improve health outcomes.

A recent study shows how a regional health system in Canada used a robust data and artificial intelligence platform to set up a breast cancer learning health system. The solution supported the treating physician by scanning unstructured notes to summarise key facts to aid in the diagnosis. The unstructured notes came from across all health providers and through time. 2.5 million people had their records scanned and several hundred people were identified as being at risk for breast cancer that would have otherwise been missed. In other words, the AI found people that might have otherwise fallen through the cracks.

Predictive medicine shifts the focus from treatment to prevention. In this case, artificial intelligence can look at the health records across a population and find patterns that lead to various health outcomes. AI can then give a provider information to support in delivering advice today with beneficial outcomes for the future.

Researchers used AI to find a new antibiotic to fight a multidrug resistance superbug (acinetobacter baumannii). During the study, the researchers trained their neural network to screen 7500 potential molecules that could be effective. Fortunately, they found the molecules that were most likely to prevent the growth of the superbug, and then narrowed it down to 240 that were most promising for a physical testing. From this, they were able to find nine that were best at inhibiting the bacteria. Later work found one – RS102895 – that was able to fight the bug and was safe. This was made possible because the AI was able to scan the potential molecules and dramatically narrow researchers’ focus. The AI achieved this in a matter of hours, rather than the months or years that it would normally have taken.

Novel approaches to AI in the development of drugs can evaluate millions of possibilities, find the most promising, and then move to traditional methods of clinical testing. In so doing, using the predictive power of AI will accelerate our ability to advance health care.

There are more examples of artificial intelligence in health and being discovered and reported every day. At the same time of looking at the opportunities, it is important to be aware of and mitigate its risks.

RELATED >> Artificial intelligence in health still needs human intelligence

Risks of artificial intelligence in health

Along with the opportunities for artificial intelligence in healthcare are potential risks:

- Privacy breaches: Artificial intelligence is trained on large data sets which may involve personal information. Further, more effective AI solutions are those that have linked datasets that are able to find deeper patterns. Given that health data are personal and may have large linkages, there are privacy risks should the linked data be made available or breached. OpenAI, the creator of ChatGPT, confirmed a data leak in March 2023. Further, they said that some conversations with the service could be seen by others who were on ChatGPT at the same time.

- Social injustice: As artificial intelligence is trained on large datasets, many of those datasets may have been created based on subsets of the population, leaving out marginalised populations or those that are not predominantly English-speaking. Further, care must be taken to ensure that out-of-date social norms are not used for training. Otherwise, the resulting algorithms can be biased, discriminatory, and create harms when the underlying biases are unknown.

- Lack of informed consent: Principles of informed consent generally rely on a linear approach to data collection, access, and use where there is a transparent mapping between the use of the data and where and how it was collected. Given the exponential expansion of AI and its foray into unforeseen uses, informed consent is nearly impossible to reach.

- De-humanising healthcare: If artificial intelligence starts making health actions without a professional in the loop, there is a risk of de-humanising healthcare and generating distrust in patients towards recommendations. As said earlier, three of five Americans are uncomfortable with AI providing healthcare. There are also concerns of the potential disruption in the health workforce through automation.

- Accountability for harm: As with any uncertain process, there is a risk of harm from using artificial intelligence. Anecdotally, the public’s tolerance for errors applied by an artificial algorithm will be far less than that for a health professional. There are also issues of where to place accountability and liability should something go wrong.

These risks must be considered when starting initiatives on artificial intelligence. The OECD AI Principles and OECD Framework for the Classification of AI Systems supply good tools to assess the risks of artificial intelligence so they can be understood and effectively mitigated.

To AI or not to AI. Is that the question?

There are big opportunities for using artificial intelligence in health; however, there are also big risks. Harms may come from the use of AI algorithms and from lost opportunities from NOT using AI algorithms. The question is not whether to use AI but rather how to use it responsibly and transparently. Defining the criteria that achieve optimal beneficial outcomes and protections is a challenge that must be urgently undertaken.