Canada and the UK compare AI governance efforts and reflect on regulating AI

As Artificial Intelligence (AI) becomes mainstream, policy makers in jurisdictions across the world are considering their role in ensuring the safe and responsible oversight of these technologies to maximise their potentially beneficial use in society and to ensure the public is protected from potential harms.

Since 2020, the OECD’s AI Policy Observatory has tracked national AI governance efforts. While there are international trends in oversight objectives for AI based on the OECD’s guiding principles, these efforts remain nascent for most jurisdictions.

With these technologies transcending borders and operating in regions other than the ones in which they were designed and built, it has become urgent for national governments to work together to develop common regulatory capabilities, tools, and competencies as well as a shared understanding of the potential risks and benefits of AI.

To address this imminent challenge, the Responsible AI Institute (RAI Institute) recently convened policy leaders, regulators, standards developers, industry experts, and researchers from the United Kingdom and Canada to compare AI governance efforts in both jurisdictions. Set as a regulatory roundtable, this event was hosted by the UK’s Financial Conduct Authority (FCA), with support from the UK’s Science and Innovation Network in Canada.

With common governance systems, significant investments in AI proposed by both the British and Canadian governments and an emphasis on sectoral regulations in both nations, the roundtable presented a great opportunity to compare approaches and discuss a harmonized strategy.

Appreciating the need to focus on one sector to advance a more practical and actionable dialogue, the roundtable opted to centre discussions around AI in financial services and invited stakeholders and experts from that field. Attendees delivered presentations to guide the dialogue between policy makers, regulators, standards bodies, and researchers from each country, including representatives of the OECD AI Policy Observatory and the AI Incident Database.

A financial services case study

Taking a comparative approach, UK and Canadian regulators presented their emerging policy approaches. The joint work of the Bank of England and the FCA focused on the empirical work done as part of the AI Public-Private Forum (AIPPF) – a 15-month joint initiative working with AI practitioners to understand better the challenges in the safe and responsible adoption of AI in UK financial services. This led to the joint Bank of England and FCA Discussion Paper on AI as and an industry survey. Upcoming Model Risk Guidance from Canada’s Office of the Superintendent of Financial Institutions will address issues such as data governance and model robustness. OSFI also recently published the Financial Industry Forum on Artificial Intelligence: A Canadian Perspective on Responsible AI.

Deploying a sectoral lens to AI governance highlighted that financial services regulation has a framework that can be adapted to meet the novel or amplified risks that new technologies bring. Yet, this also raises questions: how can a sectoral approach to AI governance be best integrated into a cross-sectoral approach that is effective yet proportionate? Most importantly, given financial services regulations’ strong focus on financial stability and consumer protection, how can this be aligned with a cross-sectoral technology approach? The roundtable discussions emphasised the debate over whether AI needs a sectoral or a cross-sectoral regulatory approach has just begun.

The role of industry standards

After understanding the approaches adopted by sectoral experts, participants were asked to break out into discussion groups to provide feedback on what they heard and identify opportunities for these experts to consider as they move forward individually and together.

An interesting takeaway from these discussions was that, while participants shared the common sentiment in the responsible AI community “to move from principles to practice,” they recognised the pivotal role industry standards will play in shaping both principles and practice alongside national legislative and sectoral regulatory efforts.

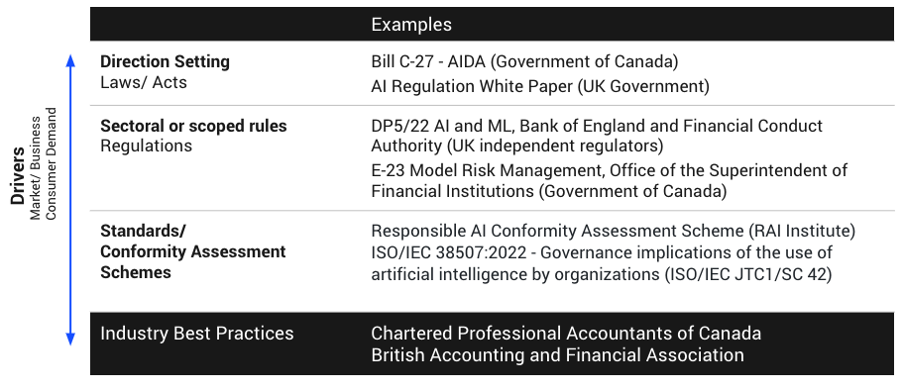

The following table provides examples of how national policy efforts can work together. While the table depicts a linear relationship between these different oversight mechanisms, it became clear through the roundtable dialogue that these do not need to be developed in this specific sequence.

With the recognition of each oversight tool’s role, the question became: “What can each participant do to expand and advance these efforts in their sector or domain?” Answers included (i) continuing to support broader legislative agendas, such as the adoption of Canada’s proposed AI and Data Act; (ii) working with colleagues between the UK, Canada, and other countries to develop common and interoperable regulatory frameworks; and (iii) supporting the research and development of measurable standards which meet both the needs of the sector and of the economy at large.

What roles for standards and regulation?

Presentations from the Standards Council of Canada and the British Standards Institute distinguished between different types of standards, including voluntary and mandatory standards, and covered the history of how standards have supported major legislative agendas. While many are familiar with current efforts to create broad standards like ISO/IEC 42001, a management system standard for AI, roundtable participants identified that standards need to complement regulations – particularly in sectors of the economy that are highly regulated (such as financial services). In fact, a main takeaway of the discussions was that financial services-specific use cases could complement the general cross-cutting frameworks for AI governance being supported by industry standards. But actors would still need to meet the requirements of specific sectoral frameworks.

Participants recognised that this roundtable was just the start. The discussion will need to advance over the months and years to come. To this end, participants expressed a desire to foster ongoing collaborations between policy makers, standards developers, and researchers in both countries and to continue harmonisation efforts at a future roundtable.

With an eye to take these lessons learned and apply them to current regulatory efforts, participants agreed that there is value in continuing the bilateral discussion. Participants felt the need to test key concepts of the global AI debate and explore shared opportunities. In particular, they mentioned shared standards pilots in both countries, research reports, and mutual advocacy.

Organisers will continue sharing discussion outcomes with the OECD.AI Policy Observatory’s participants and other key international organisations to harmonise shared goals.

AI is already profoundly changing our lives, but we are only beginning to grasp its full potential and range of policy, regulatory, political, social, ethical, and security risks. AI is increasingly prominent in the UK-Canada relationship. We are now at a pivotal moment. With similar approaches and values focusing on ethics and responsible AI, the UK and Canada are at similar stages in policy and standards development and have launched comparable governmental and regulatory initiatives. Both countries are well placed to show global AI leadership in maximising economic and societal benefits and addressing challenges while advancing the development of global norms, ethics, and governance.