A framework to navigate the emerging regulatory landscape for AI

Global organizations seeking to responsibly deploy AI systems face a complex and quickly evolving legal landscape. While agencies are increasingly providing guidance on how to apply existing laws to AI systems, lawmakers in the EU, US, and around the world are considering major new AI-related legislative proposals. In addition to existing national legal and regulatory frameworks, international governmental and standards organizations have been doing their jobs by coordinating global efforts to align views on ethical and trustworthy AI to bolster cross border interoperability.

So how should organizations deploying AI systems navigate this evolving landscape? Before answering that question, let’s look at some of the major governance efforts on the horizon.

The EU and US are ramping up regulatory efforts

The initial draft of the EU’s Artificial Intelligence Act (AI Act), proposed in April 2021, would prohibit the use of AI in certain fields like government credit scoring, and require conformity assessments and auditing for AI use in high-risk fields like migration and border control. However, due to interactions with existing laws and differing opinions on appropriate enforcement, the AI Act may not be finalized until 2023.

In the US, efforts to enact AI-related legislation at the federal level are gaining momentum. The draft Algorithmic Accountability Act (AAA) of 2022, proposed by Senator Ron Wyden, Senator Cory Booker, and Representative Yvette Clarke, would require developers and users of certain AI systems to conduct algorithmic impact assessments and build regulatory capacity at the Federal Trade Commission (FTC). The draft AAA may evolve significantly to gain the bipartisan support necessary to be enacted and become part of a broader legislative package.

At the same time, federal agencies are using existing powers to regulate AI systems. The White House’s Office of Science and Technology Policy (OSTP) is formulating an AI Bill of Rights. The Equal Employment Opportunity Commission (EEOC) has launched an Initiative on AI and Algorithmic Fairness. The FTC, which enforces key consumer protection laws like the Fair Credit Reporting Act (FCRA) and the Equal Credit Opportunity Act (ECOA), is expected to significantly increase its oversight and enforcement of AI system use.

International efforts to set standards for AI

Policy makers everywhere are making efforts to harmonize policy and regulatory approaches to AI systems, to promote interoperability at the organizational and technical levels. Interoperability will in turn bolster trade and allow smaller organizations to scale internationally.

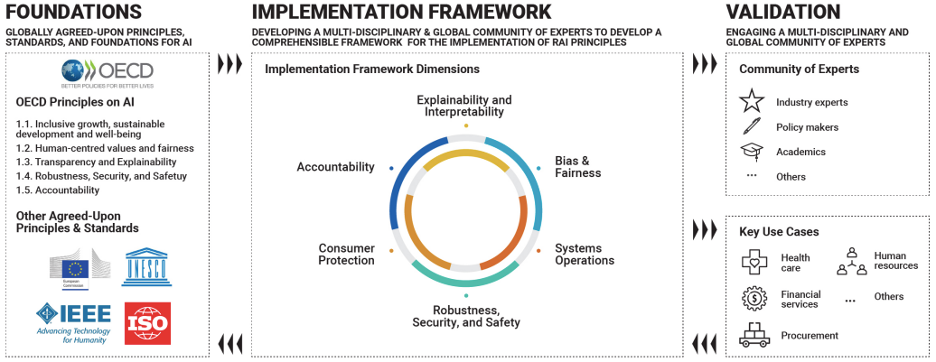

Since the Organisation for Economic Co-operation and Development (OECD) put forth its AI Principles in 2019, it has worked to help member countries put them into practice. To guide this effort, the OECD has established the OECD.AI Network of Experts on AI, which brings together policy, industry, and technical experts to discuss policy approaches, classification, risk, tools, accountability, and AI compute. The AI Policy Observatory, OECD.AI, is the organization’s platform that puts forward global developments in AI policy and data, and the OECD Working Party on AI Governance (AIGO) reviews national AI policies.

Beyond the OECD, membership of the Global Partnership on Artificial Intelligence (GPAI), a multistakeholder initiative that brings together experts to support “cutting-edge research and applied activities on AI-related priorities”, has expanded from 15 countries at its launch in 2021 to 25 countries today.

Efforts by the International Organization for Standardization (ISO) and other organizations to develop AI-related standards are taking shape. In December 2021, the United Nations Educational, Scientific and Cultural Organization (UNESCO) adopted a standard-setting recommendation on AI ethics.

Aligning AI standards to AI systems

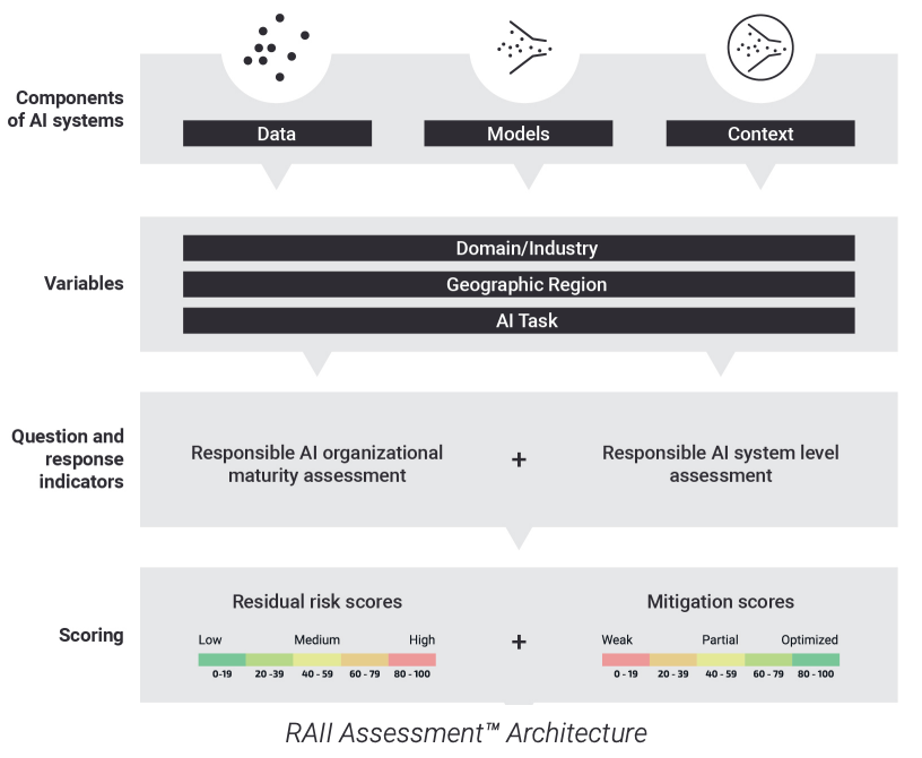

To guide organizations as they navigate the evolving landscape of proposed laws, regulations, and standards, the Responsible AI Institute (RAII) has mapped over 200 AI-related international principles and policy documents, many of which are tracked on the OECD AI Policy Observatory by country. This has informed RAII’s Implementation Framework, which sets out requirements and a suite of questions to evaluate AI systems.

RAII has made an effort to cover the wide variety of different AI systems by working with experts to configure its evaluations for specific uses, from automated lending to automated diagnosis and treatment. RAII also convenes working groups of experienced practitioners, engineers, designers, and policy makers for its Community of Experts. Working group conversations focus on how to apply RAII’s Implementation Framework to real-world AI systems in specific contexts.

The proposed EU AI Act, US AAA, and industry-specific AI laws and guidance would require organizations to conduct algorithmic impact assessments, conformity assessment procedures, or algorithmic audits on certain AI systems both prior to deployment and on an ongoing basis. RAII’s Implementation Framework serves as an assessment and audit tool that can guide corporate boards in each of these areas. It interprets existing laws and considers the proposed approaches and requirements of laws and standards that are currently under development. It also encompasses industry best practices for internal policies, procedures, testing, and monitoring, and use case- and context-specific considerations.

RAII’s Implementation Framework can guide organizations as they navigate enterprise-level concerns, like developing governance for AI initiatives and integrating Machine Learning Operations (MLOps), Model Risk Management (MRM), and Environmental, Social, and Governance (ESG) considerations. It can also inform more granular requirements, such as AI system documentation that demonstrates regulatory compliance.

A standards certification program for AI systems

RAII is also developing a certification program, which will enable organizations to certify that their AI systems conform to the appropriate ISO standard. This approach aligns with recent research suggesting that international AI standards may provide “a path towards effective global solutions where national rules may fall short”. The UK Centre for Data Ethics and Innovation (CDEI) emphasizes the importance of trustworthy information about whether or not entities follow the rules.

RAII’s Implementation Framework is based on globally agreed-upon principles, incorporates requirements from enacted and proposed regulations, and is calibrated for specific industries, use cases, and contexts. It can provide valuable guidance to organizational boards as they align their corporate governance with emerging AI-related laws and standards.