Singapore’s A.I.Verify builds trust through transparency

At World Economic Forum’s Annual Meeting at Davos on 25 May 2022, Singapore’s Minister for Communications and Information, Mrs Josephine Teo, announced the launch of A.I. Verify, a voluntary Artificial Intelligence (AI) governance testing framework and toolkit that verifies the performance of an AI system against the developer’s claims and with respect to internationally accepted AI ethics principles. A.I. Verify helps companies to be more transparent about what their AI systems can or cannot do. This keeps stakeholders better informed of what the AI systems they interact with are capable of. This process helps to build trust in AI through transparency.

The need for AI governance testing

As more products and services use AI to provide greater personalisation or to make autonomous predictions, the public needs to be assured that AI systems are fair, explainable, and safe and that the companies deploying the systems do so with transparency and accountability. Voluntary AI governance frameworks and guidelines have been published to help system owners and developers implement trustworthy AI products and services. Singapore has been at the forefront of international discourse on AI ethics and governance, and guiding industry in the responsible development and deployment of AI since 2018. The Infocomm Media Development Authority Singapore (IMDA) and Personal Data Protection Commission (PDPC) published the Model AI Governance Framework (now in its 2nd edition), a companion Implementation and Self-Assessment Guide for Organisations, and a two-volume Compendium of Use Cases that provide practical and implementable measures for industry’s voluntary adoption.

Voluntary self-assessment is a start. With greater maturity and more pervasive adoption of AI, the AI industry needs to demonstrate to its stakeholders that it is using responsible AI objectively and that this claim is verifiable. Thus, IMDA and PDPC took the first step to develop an AI Governance Testing Framework and Toolkit – A.I. Verify – to allow industry actors to show that they deploy responsible AI. This is done through a combination of technical tests and process checks. A.I. Verify is currently available as a Minimum Viable Product (MVP) and pilot for system developers and owners who want to be more transparent about the performance of their AI systems.

With this MVP, Singapore hopes to achieve the following objectives:

- Enable trust-building between businesses and their stakeholders. The MVP allows businesses to enhance stakeholders’ trust. Businesses determine their own benchmarks and demonstrate that their AI systems perform as they claim.

- Facilitate the interoperability of AI governance frameworks. The MVP addresses common principles of trustworthy AI and can potentially help businesses bridge different AI governance frameworks and regulations. IMDA is working with regulators and standards organisations to map the MVP to established AI frameworks. This helps businesses that offer AI-enabled products and services in multiple markets.

- Contribute to the development of international AI standards. Singapore participates as a member in ISO/IEC JTC1/SC 42 on Artificial Intelligence. Through industry adoption of A.I. Verify, Singapore aims to work with AI system owners and developers globally to collate industry practices and build benchmarks that can help develop international standards on AI governance.

What is the A.I. Verify MVP and why an international pilot?

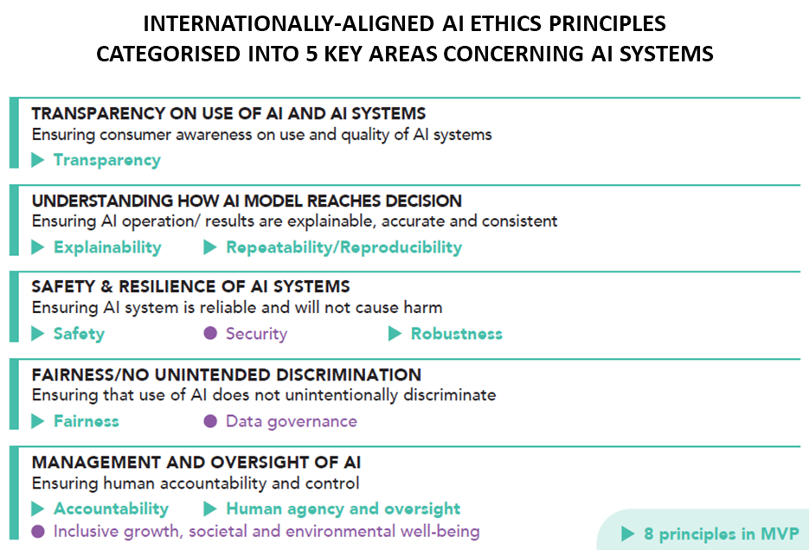

The A.I. Verify MVP has two components: a governance testing framework and a toolkit to test it. The framework is aligned with internationally accepted AI ethics principles, guidelines, and frameworks, such as those from the EU and OECD.

The toolkit is a software package that can be downloaded and executed locally in business environments to generate testing reports for engineers and management. Companies have full control over their AI models and data when using the A.I. Verify toolkit to conduct self-testing.

A combination of technical tests and process checks allows us to assess AI systems against the 8 AI ethics principles. Technical tests are conducted on AI models for explainability, robustness and fairness, while process checks are conducted on all 8 principles. The table below shows an overview of the scope of A.I. Verify tests.

| Principle | Technical Test | Process Checks |

| Transparency | Evidence (e.g., policy, comms collaterals) of providing appropriate info to individuals who may be impacted by the AI system – intended use, limitations, risk assessment (w/o comprising IP, safety, system integrity) | |

| Explainability | Factors contributing to AI model’s output | Evidence of considerations given to choice of AI models |

| Repeatability/ reproducibility | Evidence of AI model provenance and data provenance | |

| Safety | Evidence of materiality/risk assessments, identification/mitigation of known risks, evaluation of acceptable residual risks | |

| Robustness | Model performs as expected even when encountering unexpected input | Evidence of review of factors that may affect the performance of AI model, including adversarial attack |

| Fairness | Protected/sensitive attributes specified by AI system owner do not contribute to algo bias of model by checking model output against ground truth | Evidence of strategy for selecting fairness metrics, definition of sensitive attributes are consistent with legislation & corporate values |

| Accountability | Evidence of clear internal governance mechanisms for proper management oversight of AI system’s development/deployment | |

| Human agency & oversight | Evidence that AI system is designed in a way that will not reduce human’s ability to make decisions or to take control of the system (e.g., human-in-the-loop) |

We would like to emphasise a few points. The A.I. Verify MVP:

- does not define ethical standards. It aims to provide a way for AI system developers and owners to demonstrate their claims about the performance of their AI systems vis-à-vis the 8 selected AI ethics principles;

- is not a guarantee that AI systems tested with this Framework will be free from risks or biases or completely safe;

- is used by AI system developers and owners to conduct self-testing so that data and models remain in the company’s operating environment.

An invitation to participate in the international pilot

The A.I. Verify MVP went through early-stage testing with a small group of industry partners to obtain their feedback. We are now inviting participants from the broader industry to participate in the international pilot phase of the MVP. Participants will have the unique opportunity to:

- have early and full access to an internationally-aligned AI Governance Testing Framework and Toolkit MVP and use it to conduct self-testing on their AI systems/models;

- produce reports to demonstrate transparency and build trust with their stakeholders;

- provide feedback to IMDA to help shape the MVP so that it can reflect the industry’s needs and bring it benefits; and

- join the AI testing community to network, share and collaborate with other participating companies to build industry benchmarks and contribute to international standards development.

🇸🇬 VISIT SINGAPORE’S NATIONAL AI DASHBOARD 🇸🇬

Learning together as an AI testing community

As AI governance testing is still nascent, we need to collaborate with all stakeholders to develop standards and methods of verifying trustworthy AI. To this end, IMDA is creating an AI Testing Community, which will initially comprise policy makers, regulators, AI system developers, owners and anyone who provides technology solutions.

It is envisaged that as more AI system developers and owners use A.I. Verify to validate the performance of their AI models, we can crowd-source and co-develop benchmarks for acceptable levels of adherence to each principle for different industries and use cases. We can also work with technology solution providers to identify gaps and development opportunities in current methods of testing AI models and devise better ways to verify whether AI models have met the desired standards for trustworthy AI. Once released, A.I. Verify will be part of the OECD’s Catalogue of Tools for Trustworthy AI.

A key element for building trust is to communicate effectively to all stakeholders about the behaviour of AI applications through testing reports. This includes regulators, board members, senior management, business partners, auditors and customers and consumers. Stakeholders need different information at varying levels of detail to facilitate their decision-making. Hence, customised report templates addressing specific stakeholders’ information needs will need to be developed.

These goals cannot be achieved without the collective wisdom and efforts of the AI community. As part of community building, IMDA/PDPC will organise roundtables for the industry to engage regulators in early thinking about policy, as well as industry-specific workshops to develop consensus on industry benchmarks for trustworthy AI. These benchmarks can then be shared with international standards bodies as Singapore’s contribution to the global discourse on standards building.

Developing a sustainable ecosystem

Companies will need a sustainable AI testing and certification ecosystem if they are going to reap value and gain trust from AI governance testing. This ecosystem will need the participation of AI system developers owners and technology solution providers, but also:

- Third-party testing service providers to conduct independent testing;

- Advisory or consultancy service providers to help companies who do not have the know-how, expertise or resources to conduct governance testing themselves;

- Certification bodies to approve third-party testing reports and certify performance claims about AI models; and

- The research community to conduct research and develop new testing technologies to keep up with the rapid pace of AI development.

This is how Singapore will engage AI ecosystem players to explore viable modes of operations to create value for everyone concerned.

Towards international industry benchmarks for AI principles?

AI ethics principles may be universal but often times their interpretation and implementation are influenced by cultural and geographic variations. These variations could lead to fragmented AI governance frameworks, which in turn, may raise barriers to implementing trustworthy AI for companies and hinder their capacity to provide AI-enabled products and services across borders.

A.I. Verify is Singapore’s first step to identifying and defining an objective and verifiable way to validate the performance of AI systems. The international pilot will facilitate the collation and development of industry benchmarks for AI ethics principles. We look forward to working with like-minded players in this learning journey.