A Socio-technical approach to AI literacy: A quick guide

AI literacy is a crucial cornerstone of organisational AI readiness, focusing on developing the knowledge, skills, and competencies needed to master and critically assess AI technologies. In essence, AI literacy equips individuals and organisations with an effective and ethical approach to AI tools. This includes skills for output evaluation and human oversight, adaptation to the evolving technological landscape, alignment with EU digital priorities, and robust policy-by-design.

AI skills in the Age of AI

As AI’s presence in the workplace grows, so does the demand for professionals with AI competencies. In fact, a recent survey by Resume Genius found that 81% of hiring managers from a sample of 1 000 now consider AI-related skills a hiring priority, making them among the most sought-after capabilities in 2025. The report further highlights that 7 out of 10 executives say the pace of change at work is accelerating.

However, while some use AI tools to improve efficiency and productivity in their studies or work, the majority of users remain uncertain or lack confidence in their AI competencies. The supply of individuals with AI literacy does not meet the demand.

Beyond technical skills

Traditional approaches to AI literacy mostly fall into two camps. One focuses on highly technical training in programming and machine learning, while the other concentrates on discussions of ethics, AI’s societal implications, and related principles such as privacy, security, fairness, and transparency. The latter is crucial because AI systems are embedded within complex webs of human organisations, cultural contexts and institutional frameworks.

While both are valuable, neither alone captures the full picture. Bringing them together creates a socio-technical approach that recognises that AI’s risks and benefits arise from the technical design and deployment of systems, as well as from who operates them and the social contexts in which they operate. The same AI tool can empower workers in one context while displacing them elsewhere due to organisational decisions, management practices, and workplace culture. In the same way, their effectiveness and impact also depend on algorithmic design, deployment contexts, system operators and the organisational norms and cultural contexts in which they function.

Bridging the divide

One of the most compelling aspects of a socio-technical approach is its appeal to both technical and non-technical stakeholders. When AI literacy encompasses both the capabilities of systems and their organisational implications, it enables meaningful cross-functional collaboration. This integration is essential.

As the World Economic Forum’s Future Jobs report outlines, 40% of employers anticipate reducing their workforces by 2030 as AI automates tasks. Organisations need employees who can use AI tools and also understand how they reshape work processes, decision-making structures and human roles.

With a deeper understanding of AI, users can develop a critical perspective on the capabilities and limitations of various AI technologies. They can use advanced features, tools and prompts to generate better outputs.

For example, AI chatbots analyse patterns in their training data to predict the most likely responses to prompts. But that data tends to reflect the language conventions and assumptions of dominant perspectives, including any flaws and biases. This means outputs typically reflect common, mainstream perspectives from the internet. While these are useful for general explanations or brainstorming broad ideas, they are less helpful for specialised expertise, original thinking, or culturally nuanced responses. AI literacy means employees will know how to leverage AI to augment their roles, allowing them to focus their efforts where they can make the most difference.

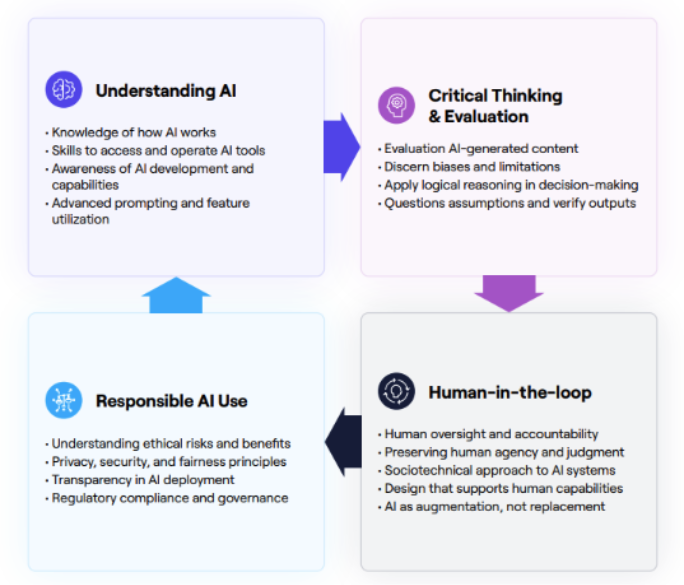

The four core dimensions of AI literacy

The four dimensions of AI literacy provide a comprehensive framework for engaging with AI technologies:

- Understand AI, the knowledge of how AI works, skills needed to access and operate common tools and awareness of AI’s continuous development.

- Critical thinking and evaluation involve the ability to evaluate AI-generated content, discern biases and apply logical reasoning.

- Responsible AI use means understanding the ethical risks and benefits of AI deployment, as well as the principles that guide responsible use, including privacy, security, fairness, transparency, and regulatory compliance.

- Human-in-the-loop includes the influence of AI on human behaviour, and the importance of human-centric practices that preserve human agency, oversight, and accountability. A socio-technical approach is crucial here, highlighting the need to design AI systems and workflows that support rather than supplant human capabilities and ensure human judgment remains central to AI-assisted processes.

Maintaining a human-centred approach

Humans must lead all AI endeavours. The individual choices we make about when, how, and why we use AI tools determine whether they are beneficial or detrimental. In placing human agency at the core of AI, we recognise individual and collective responsibility and accountability for the appropriate use of AI technology, including mitigating negative impacts and ensuring equitable benefits for all.

This approach underscores that safety outcomes are contingent on both the technical design and the broader human context, including power dynamics, labour considerations, and institutional practices.

It is time to reshape and spread comprehensive AI literacy

AI is fundamentally reshaping how we learn, work, and make decisions across industries and sectors. Fuelled by rapidly changing technology and widespread adoption, the challenge for organisations is not simply implementing these tools but strategically aligning them with organisational goals while understanding their broader social and ethical implications.

Meeting this challenge requires more than technical training. It demands a comprehensive approach to AI literacy that prepares individuals to navigate both the capabilities and consequences of AI systems.

A socio-technical framework for AI literacy underscores that neither technical skills nor ethical awareness alone can prepare a future-ready workforce. The pace of AI development requires iterative, collaborative education models in which shared understanding drives innovation, cross-functional learning reduces competency gaps, and institutional frameworks promote responsible governance practices.

By combining disciplines and perspectives, the socio-technical partnership between technologists, regulators and AI innovators can ensure that AI development and deployment prioritise innovation alongside societal well-being.

When combined with a socio-technical model, this framework enables a more comprehensive approach to AI literacy development. It bridges the gap between technical understanding and practical application, allowing for literacy programmes that are contextually informed, socially aware, and effective in equipping individuals to deploy and govern AI responsibly.