What is AI? Can you make a clear distinction between AI and non-AI systems?

How does one define AI? And how does one clearly distinguish between AI and non-AI machine-based systems? To date, the answer is as elusive as the question is simple. Many of the world’s smartest people have tried to come up with a solid definition that everyone agrees upon but never seem to find consensus. There is no clear red line but a continuum of features characterising what we think of as artificial intelligence and where the “magic” happens. As AI becomes more sophisticated and diverse, some technologies, such as optical character recognition (OCR), which were once widely considered AI, no longer are—at least by the general public—even though they employ textbook AI methods. This goes to show that AI technologies are developing at a rapid pace, and additional techniques and applications will likely emerge in the future.

OECD countries started working on it six years ago in 2018, and discussions have been extended and detailed. While they have not found the holy grail of definitions, they have reached a consensus on a definition. And that is a first. From the outset we decided not to define “AI” but rather an “AI System,” which is a more tangible and actionable concept, especially in a policy making context. For the sake of clarity, here are some explanations about what constitutes AI and the thoughts that went into the OECD definition of an AI system.

Countries revised the definition in 2023. For reference, here it is with the revisions to the definition of “AI System” in detail, with additions set out in bold and subtractions in strikethrough):

An AI system is a machine-based system that can, for a given set of human-defined explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as makes predictions, content, recommendations, or decisions that can influenceing physical real or virtual environments. Different AI systems are designed to operate with varying in their levels of autonomy and adaptiveness after deployment.

The revised definition:

An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.

Topics typically encompassed by the term “AI” and in the definition of an AI system include categories of techniques such as machine learning and knowledge-based approaches; application areas such as computer vision, natural language processing, speech recognition, intelligent decision support systems, and intelligent robotic systems; and specific applications of these tools in different domains.

The OECD just released an explanatory memorandum on its updated definition of an AI system. In this blog post, we explain the main points.

Input, including data

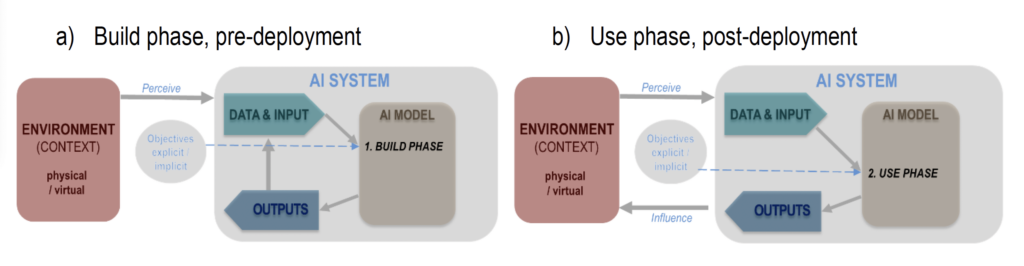

Input is used both during development and after deployment. Input can take the form of knowledge, rules, and code that humans put into the system during development or data. Humans and machines can provide input. During development, input is leveraged to build AI systems, e.g., with machine learning that produces a model from training data and/or human input. Input is also used by a system in operation, for instance, to infer how to generate outputs. Input can include data relevant to the task to be performed or take the form of, for example, a user prompt or a search query.

Illustrative, simplified overview of an AI system

Note: This figure presents only one possible relationship between the development and deployment phases. In many cases, the design and training of the system may continue in downstream uses. For example, deployers of AI systems may fine-tune or continuously train models during operation, which can significantly impact the system’s performance and behaviour.

Types of AI systems and how they are built

The definition that OECD countries agreed to use describes characteristics of machines considered to be AI, including what they do / how they are used but also how they are built.

Although different interpretations of the word “model” exist, in this document, an AI model is a core component of an AI system used to make inferences from inputs to produce outputs. Prior to deployment, an AI system is typically built by combining one or more “models” developed manually or automatically (e.g., with reasoning and decision-making algorithms) based on machine and/or human inputs/data.

Explanatory memorandum on the updated OECD definition of an AI system

- Machine learning is a set of techniques that allows machines to improve their performance and usually generate models in an automated manner through exposure to training data, which can help identify patterns and regularities, rather than through explicit instructions from a human. The process of improving a system’s performance using machine learning techniques is known as “training”.

- Symbolic or knowledge-based AI systems typically use logic-based and/or probabilistic representations, which may be human-generated or machine-generated. These representations rely on explicit descriptions of variables and of their interrelations. For example, a system that reasons about manufacturing processes might have variables representing factories, goods, workers, vehicles, machines, and so on.

- In addition, it should be noted that symbolic AI may use machine learning. For example, inductive logic programming learns symbolic logical representations from data, and decision-tree learning learns symbolic rules in the form of a tree of logical conditions.

People tend to consider that AI systems built using machine learning have some intelligence taking place because, during their training, they figure out relationships between model parameters without precise instructions. For example, language models are given large amounts of language resources and given the objective to “predict the next word”, producing a trained model that appears to respond intelligently to prompts . So, this is where what looks to AI researchers as intelligence occurs: in the building phase. What looks to end users like “intelligence” occurs at runtime, after construction.

But when building a symbolic AI system manually, humans supply the knowledge and the vocabulary in which it is expressed. Here, we consider the knowledge engineer to be the source of part of the intelligence – i.e., the AI system does not discover the knowledge it uses from its own experience. On the other hand, the AI system may perform very complex reasoning that contributes to the overall intelligence of the system. For example, Deep Blue, like AlphaZero, is given the rules of the game by human engineers; those rules allow it to play legal moves, but not good moves. The good moves come largely from its prodigious ability to reason about future trajectories in the game.

RELATED >> Updates to the OECD’s definition of an AI system explained

Autonomy and adaptiveness

Explanatory memorandum on the updated OECD definition of an AI system

An AI system’s objective setting and development can always be traced back to a human who originates the AI system development process, even when the objectives are implicit. However, some AI systems can “adapt” or develop implicit sub-objectives and sometimes set objectives for other systems. Human agency, autonomy, and oversight vis-à-vis AI systems are critical values in the OECD AI Principles that depend on the context of AI use.

- AI system autonomy means the degree to which a system can learn or act without human involvement following the delegation of autonomy and process automation by humans. Human supervision can occur at any stage of an AI system’s lifecycle, such as during AI system design, data collection and processing, development, verification, validation, deployment, or operation and monitoring. Some AI systems can generate outputs without specific instructions from a human.

- Adaptiveness, contained in the revised definition of an AI system, is usually related to AI systems based on machine learning that can continue to evolve their models after initial development. Examples include a speech recognition system that adapts to an individual’s voice or a personalised music recommender system. AI systems can be trained once, periodically, or continually. Through such training, some AI systems may develop the ability to perform new forms of inference not initially envisioned by their developers. The concept of post-deployment adaptation is highly significant for the regulation of AI because it means that assurances concerning the performance and safety of the system at the time of deployment may be invalidated by subsequent adaptation. Thus, assurances must be obtained for all future versions of the system under all possible future data trajectories, which is a considerably more difficult problem than assuring a static system. This difficulty may be alleviated to some extent by incorporating automated testing as part of the adaptation process so that, for example, no bias creeps into a system that is initially certified as fair.

RELATED >> Explanatory memorandum on the updated OECD definition of an AI system

AI system objectives

Explanatory memorandum on the updated OECD definition of an AI system

AI system objectives can be explicit or implicit. For example, they can belong to the following categories that may overlap in some systems:

- Explicit and human-defined. In these cases, the developer encodes the objective directly into the system, e.g., through an objective function. Examples of systems with explicit objectives include simple classifiers, game-playing systems, reinforcement learning systems, combinatorial problem-solving systems, planning algorithms, and dynamic programming algorithms.

- Implicit in rules and policies. Rules, typically human-specified, dictate the action to be taken by the AI system according to the current circumstance. For example, a driving system might have a rule, “If the traffic light is red, stop.” However, these systems’ underlying objectives, such as compliance with the law or avoiding accidents, are not explicit in the system, even if they are apparent to and intended by the human designer.

- Implicit in training data. This is where the ultimate objective is not explicitly programmed but incorporated through training data and a system architecture that learns to emulate those data, e.g., training large language models to imitate human linguistic behaviour. In such cases, the human engineer may not know what objectives are implicit in the data.

- Not fully known in advance. Some systems may learn to help humans by learning more about their objectives through interactions. Some examples include recommender systems that use “reinforcement learning from human feedback” to gradually narrow down a model of individual users’ preferences.

“Inferring how to” generate outputs

The concept of “inference” generally refers to the step in which a system generates an output from its inputs, typically after deployment. When performed during the build phase, inference, in this sense, is often used to evaluate a version of a model, particularly in the machine learning context. In the context of this explanatory memorandum, “infer how to generate outputs” should be understood as also referring to the build phase of the AI system, in which a model is derived from inputs/data.

Applying the updated definition

The OECD definition of an AI system intentionally does not address the issue of liability and responsibility for AI systems and their potentially harmful effects, which ultimately rests with humans and does not in any way pre-determine or pre-empt regulatory choices made by individual jurisdictions in that regard.

The updated definition of AI is inclusive and encompasses systems ranging from simple to complex. The fact that a system is “simple” does not mean that it carries no risk or that its safety need not be assured, but it does mean that providing such assurances may be simpler than it would be for a complex system! AI represents a set of technologies and techniques applicable to many different situations. Specific sets of techniques, such as machine learning, may raise particular considerations for policymakers, such as bias, transparency, and explainability, and some contexts of use (e.g., decisions about public benefits) may raise more significant concerns than others. Therefore, when applied in practice, additional criteria may be needed to narrow or otherwise tailor the definition when used in a specific context, and additional regulations may apply to certain types of AI systems, even in the same context of use.